Journal of Technology and Educational Innovation Vol. 10. No. 2. December 2024 - pp. 163-178 - ISSN: 2444-2925

DOI: https://doi.org/10.24310/ijtei.102.2024.20202

Evaluation of a scale on ICT knowledge applied to educational inclusion: A Graded Response Model approach

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.1. INTRODUCTION

The need to adapt to a constantly changing world, where Information and Communication Technologies (ICT) have a prominent relevance, is presented as one of the main goals of today’s society. Educational digital tools can be configured to provide learning resources at different levels of difficulty and in various formats (audio, text, video, etc.), so as to ensure the principles of accessibility and adaptability so that each student can access the content in a way that is understandable and manageable, following the Universal Design for Learning approach (Parody-García et al., 2022; Vigo, 2021). In addition, technology plays a fundamental role in the development of digital and communication skills for students with functional diversity, while favoring their autonomy, motivation, academic performance and integral development.

The integration of ICT in education has meant the reformulation of teaching approaches, establishing the updating of teaching and the modification of the curricula of future teachers as elementary factors to ensure that technologies have a positive impact on the teaching-learning processes of all learners, including students with functional diversity (Moriña, 2020; Parra & Agudelo, 2020; Vigo, 2021). In this context, the United Nations Educational, Scientific and Cultural Organization [UNESCO] (2019) has presented a reference framework for teacher professional development with digital competences standards that allow countries to offer a comprehensive view of ICT in education, addressing them from six dimensions (understanding the role of ICT in education policy, curriculum and assessment, pedagogy, digital competences, organization and management, and teacher professional learning) in three progressive stages (knowledge acquisition, knowledge deepening and knowledge creation). These competences can be described as the combination of knowledge, skills and attitudes towards technologies that teachers must acquire and put into practice in order to optimize their professional work from a critical, creative, innovative and inclusive paradigm (National Institute of Educational Technologies and Teacher Training [INTEF], 2022).

The development of technological skills in teachers should be promoted from their initial training, considering that this training should focus on an epistemological, theoretical and practical foundation that also contemplates the acquisition of attitudinal and procedural competences focused on achieving quality inclusive education (Kerexeta-Brazal et al., 2022; Recio-Muñoz et al., 2020; Ripoll-Rivaldo, 2021). These competences, according to Almerich et al. (2020), are classified into technological competences, referring to the skills that enable the mastery of digital tools; pedagogical competences, which are related to the use of technological resources to carry out training or academic tasks; and ethical competences, linked to the appropriate use of ICT.

The process of pedagogical innovation must transcend from the promotion of basic digital skills to the development of specific technological competences that favor the creation of optimal learning environments adapted to the needs, characteristics and interests of all students. Along these lines, Cabero-Almenara and Martínez (2019) point out that ICT training is gradual and takes time to consolidate the knowledge and skills needed to carry out innovative educational practices.

Tejedor et al. (2009) argue that teachers can show different attitudes towards the use of ICT: interest in technology (technophilia) or, on the contrary, rejection of its use (technophobia). Hence the importance of making teachers aware of the importance of using ICT for didactic and inclusive purposes from their initial training.

Several studies related to the integration of ICT in initial teacher education examine how content and training in digital competence are being addressed, share enriching experiences for the transformation of training practices and reflect on key changes to improve teacher education (Ari et al., 2022; Pinto-Santos et al., 2023).

Following an extensive review of the literature, a number of models on the development of teachers’ digital competences have been analyzed which emphasize that it is a progressive and multifaceted process. The most prominent of these are:

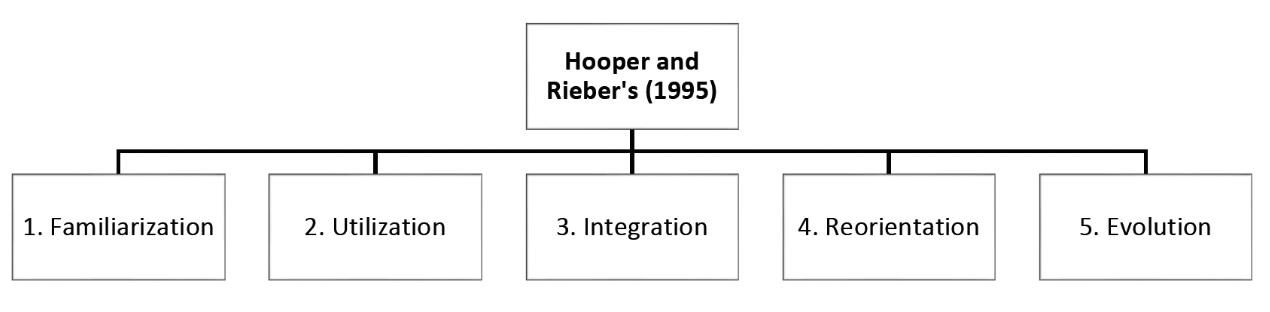

- Hooper and Rieber’s (1995) model: it envisages five phases in the process (familiarization as the initial stage of learning ICT outside the classroom, incorporation of what has been learned into the school context, integration as decision-making for technology-mediated activities, reconsideration of teaching praxis in terms of ICT possibilities and students’ needs/characteristics and, finally, continuous familiarization, which is based on the recognition that there are always new ICT solutions and the adoption of new decisions).

DIAGRAM 1. Hooper and Rieber’s model (1995)

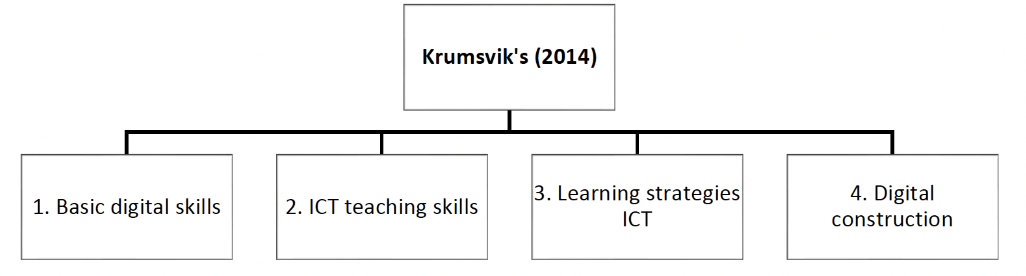

- Krumsvik’s model (2014): determines four stages to achieve optimal digital competence (basic digital skills, ICT teaching skills, learning strategies ICT and digital construction).

DIAGRAM 2. Krumsvik’s model (2014)

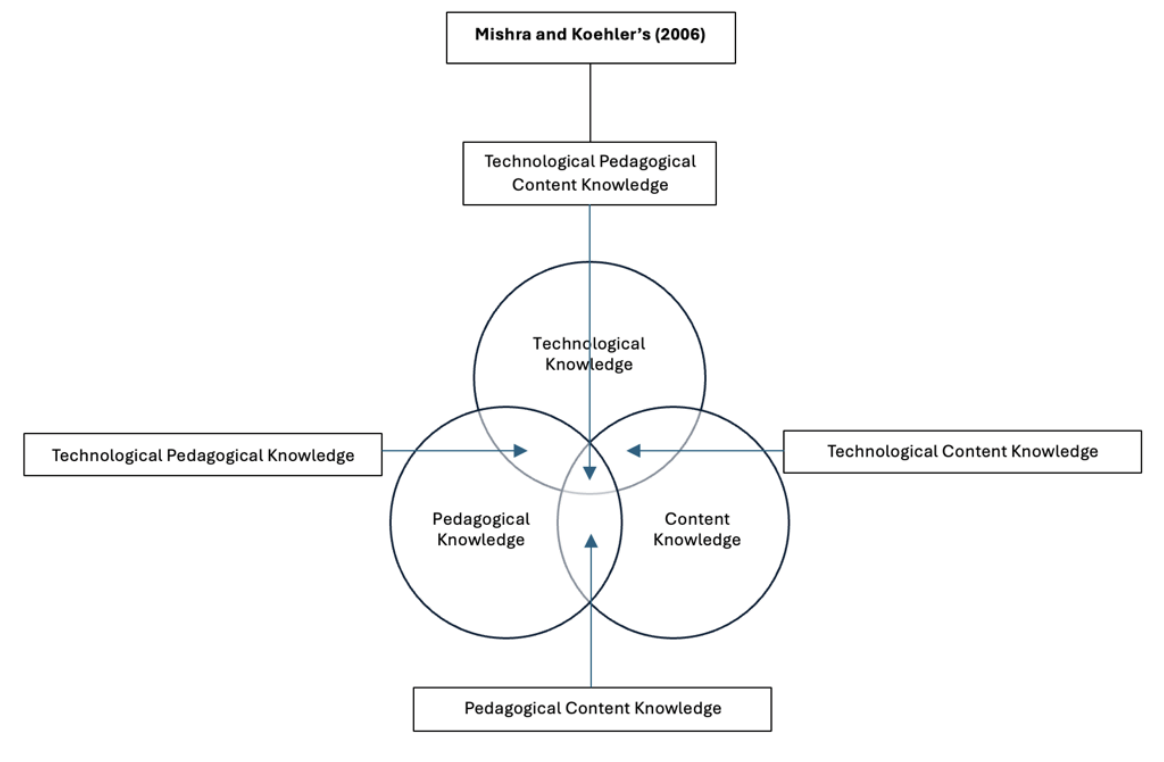

- Mishra and Koehler’s model (2006): this is a model that has gained some traction in recent years, known as Technological Pedagogical Content Knowledge (TPACK). It is based on the acquisition of three types of basic knowledge (technological, pedagogical and content knowledge). This approach argues that such knowledge should be acquired in combination (pedagogical content knowledge, knowledge of the use of technologies, technological pedagogical knowledge, and technological, pedagogical and content knowledge).

DIAGRAM 3. Mishra and Koehler’s model (2006)

Taking the aforementioned models as a reference, Cabero-Almenara and Martínez (2019) mention the following aspects to bear in mind in ICT teacher training: training actions should not be limited to traditional approaches or focus solely on technological aspects, so they should be approached from different and transversal perspectives to be more effective; training should consider various dimensions (instrumental, semiological/aesthetic, curricular, pragmatic, psychological, producer/designer, selector/evaluator, critic, organizer, attitudinal, and researcher) and reflection on professional performance should be encouraged.

The teacher training framework should therefore include a series of competences that can be summarized as follows: a predisposition towards cooperative work and curricular flexibility, technical and didactic management of ICT, attention to diversity, organizational skills and the ability to adapt to change (Almerich et al., 2020; Kerexeta-Brazal et al., 2022; Laitón et al., 2017). While it is true that teacher training in the knowledge and appropriate use of ICT is essential, the importance of the provision of technological resources by educational institutions in order to offer innovative and inclusive education should not be overlooked (Gallardo-Montes et al., 2023; Pegalajar, 2017).

The aim of this study is to evaluate an instrument (scale) to measure prospective teachers’ knowledge of ICT applied to educational inclusion using the Graded Response Model (GRM). More specifically, we set out the following specific objectives: O1) to evaluate the psychometric properties of the scale; and O2) to determine the effectiveness of the items in discriminating between different levels of knowledge.

This study is part of a larger research project analyzing teacher training in the development of digital competences applied to inclusive education (Parody-García, in press).

2. MATERIAL AND METHOD

A research process has been carried out, sometimes considered within analytical designs (Colás-Bravo & Buendía-Eisman, 1998) although it undoubtedly corresponds to a psychometric study (Romero-Martínez & Ordóñez-Camacho, 2015) on a non-probabilistic sample of 684 university students of the Degree in Primary Education from different Andalusian public universities (UMA, UGR, UAL, UJA, UCA, UCO, US, UHU). Table 1 shows the socio-demographic characteristics of the sample.

TABLE 1. Sample characteristics

| Age: | media=20.39 (D.T.=4.285). | |

| Sex: | Male: 176 | |

| Female: 508 | ||

| University of origin: | U. Granada: 161 | |

| U. Málaga: 476 | ||

| Other Andalusian universities: 47 |

Acronyms: U.= University; T.D. = Standard Deviation

Data collection was carried out during the 2022-2023 academic year, specifically during the months of January to June 2023. To this end, university teachers from different Andalusian public universities who teach different courses and groups of the Primary Education Degree were contacted by e-mail. A letter of introduction was sent to them detailing the purpose of the study and requesting their collaboration in order to distribute the questionnaire among their students (in the case of the University of Malaga, we attended the classes of the lecturers who showed their interest in the study and allowed them to spend some time in their session for the dissemination and completion of the questionnaire by the students). It should be noted that in the same link as the questionnaire, the informed consent document was attached, in which the ethical principles and confidentiality of the research, as well as the rights of the participants, are included.

The instrument used was a reduced version of the ICT Knowledge Scale applied to people with functional diversity by Cabero-Almenara et al. (2016), the purpose of which was to find out the level of training and knowledge of students studying for a Primary Education Degree in Spain on this subject. Specifically, it was applied to a non-probabilistic sample of 533 university students from several Spanish universities (Universities of the Basque Country, Cantabria, Cordoba, Huelva, Alicante, Murcia, Malaga, Balearic Islands, Santiago de Compostela, Jaen and Seville). The authors elaborated a Likert-type scale of 73 items, of which 18 refer to the technical-didactic mastery of different technologies and 55 are based on the assessment of the use of ICT for people with functional diversity. In this article, we focus on the 12 general items of the latter group (see table 2).

The results of the study of these authors obtained a Cronbach’s alpha of 0.992 points and identified 6 subscales that would explain 78.073% of the variance: general scale, visual scale, auditory scale, cognitive scale, motor scale and accessibility scale. These values can be consulted in the articles published by the authors (Cabero-Almenara et al. 2016) where they explain in detail the construction and validation process, including the goodness-of-fit indices of the models (e.g. KMO and similar) as well as the different analytical procedures from the Classical Test Theory (CTT).

Only the general scale has been used for this study as: a) it includes substantially fewer items, b) it provides a general measure of knowledge allowing it to be used as a screening test for an initial assessment, and c) the scale items are considered to be unidimensional (see table 2).

TABLE 2. General scale items

| - V15: I have general knowledge about the possibilities that ICTs offer to people with disabilities. - V16: I can select specific ICTs according to the physical, sensory and cognitive characteristics of different people. - V17: I am able to provide information on the possibilities of ICT for the labor market integration of people with different types of disabilities. - V18: I am aware of different books that are specifically dedicated to the analysis of the possibilities of ICTs for people with different types of disabilities. - V19: I am aware of different educational experiences of applying ICT for people with different types of disabilities. - V20: I am familiar with mobile applications in relation to subjects with special educational needs. V21: I am aware of the main limitations that may condition the use of ICT by learners with disabilities. - V22: I know different places on the Internet where I can find educational materials for people with special educational needs. - V23: In general, I feel prepared to help the student with certain disabilities in the use of technical aids and the use of ICT. - V24: I can design activities with generalized educational software for learners with special educational needs. - V76: I am aware of the problems and the importance of different types of disabilities for the use of ICT. - V77: I consider myself competent in locating educational materials for learners with specific educational support needs on the web. |

To achieve the proposed objectives, a psychometric analysis was carried out from the perspective of Item Response Theory or IRT (Baker & Kim, 2004; Van der Linden & Hambleton, 1997). This approach encompasses a series of models designed to explain the connection between an unobservable skill, trait or competence such as domain knowledge and its observable indicators, the responses given to a set of items. Unlike TCT, which focuses on composite scores and linear regression, IRT focuses on response patterns and considers them in probabilistic terms. This approach takes into account:

- Item discrimination: the ability of an item to distinguish between individuals with different levels of knowledge.

- Item difficulty: the level of knowledge at which 50% of respondents are expected to answer an item correctly, indicating the probability of a correct response.

- Additional parameters: depending on the specific IRT model, parameters such as guessing probability may also be considered.

Assessing item difficulties is crucial to align the test with the knowledge levels of the target population and to ensure full coverage of the knowledge range. Therefore, IRT models offer several advantages over TCT models, such as allowing the construction of scales that differentiate optimally between high and low cognitive individuals, while allowing scales to have fewer items than other psychometric approaches. Thus, although IRT was developed on tests of dichotomous items, a generalization of the procedure for polytomous items is available as the Graded Response Model (GRM), which was developed by Samejima (1969). Specifically, the following analyses have been carried out in this study using this GRM approach:

- Analysis of the unidimensionality of the scale: this is a preliminary step in the application of IRT models. This can be done in many different ways: exploratory factor analysis, principal component analysis, correspondence analysis, or even simply inter-item correlation (Rizopoulos, 2006). Among the different ways of analyzing unidimensionality, Principal Component Analysis was chosen (Chou & Wang, 2010; Wismeijer et al., 2008). For the assessment of model fit, the Root Mean Square of Residuals (RMSR) was taken into account, which always gives, whatever the estimated model and solution, a reference value of 0.05 (Harman, 1976); the Tucker-Lewis Index (TLI), where values above 0.95 indicate a good fit (Bentler, 1990); and the root mean square error of approximation (RMSEA), where values less than 0.05 indicate a good model fit and values up to 0.08 represent a reasonable error of approximation to the population (Browne & Cudeck, 1993). For a detailed discussion of these issues, see Ferrando et al. (2022). These values should be interpreted in context with the nature of the data, being considered as a whole and in relation to the construct or theoretical model (Lorenzo-Seva et al., 2011). In this way, the cut-off points established by the authors are benchmarks that provide guidance on goodness of fit and are interpreted flexibly (Lai & Green, 2016).

- Parameter estimation for each item: discrimination and difficulty parameters were estimated for each item, including response thresholds to determine the corresponding proficiency levels.

- Assessment of model goodness-of-fit: model fit was verified using indices such as log-likelihood, Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC), ensuring model fit to the data.

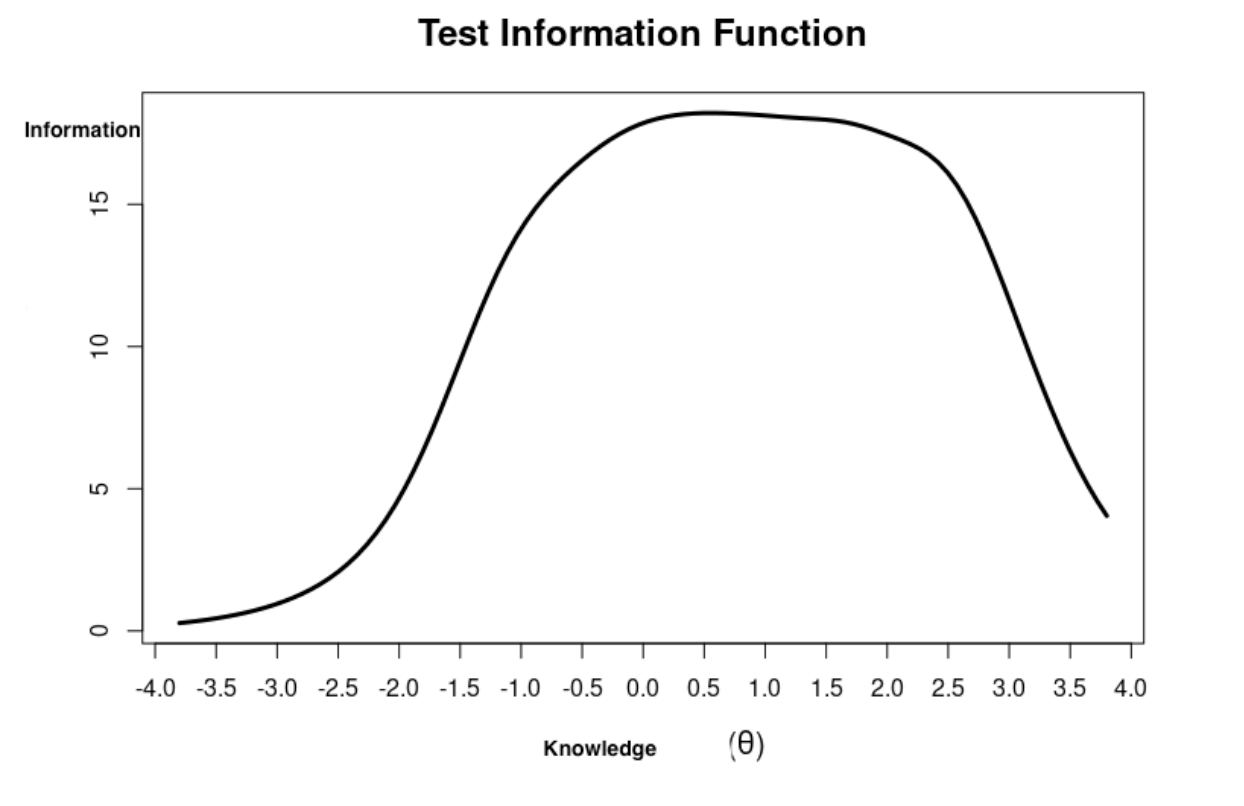

- Information function analysis: the information function of the test was analyzed to assess the precision of the estimates along the latent trait continuum, identifying the areas of highest precision.

- Review of bivariate margin calls: bivariate margin calls were reviewed to identify problems of fit between pairs of items, highlighting those with a significant lack of fit.

It should be noted that the ltm package (Rizopoulos, 2006) implemented in R (R Core Team, 2022) was used for the entire analysis.

3. RESULTS

In the following sub-sections, the results are organised on the basis of the objectives defined in this study.

3.1. To assess the psychometric properties of the scale

As noted above, a preliminary step in applying IRT is the verification of the assumption of unidimensionality. On this occasion, this phase was started with a principal component analysis (PCA) followed by an exploratory factor analysis (EFA). The suitability of the data set for these procedures was assessed by means of the Kaiser-Meyer-Olkin KMO test (which was 0.94) and Bartlett’s test of sphericity (which was statistically significant X²=5249.695 with 66 degrees of freedom, being p<.001), thus supporting the application of these analyses. In this study, with the sample under analysis, the PCA results show that the first principal component (PC1) explains 57% of the total variance of the items. The items have standard loadings on the first component ranging from 0.58 to 0.83, suggesting a good contribution of most of the items to the unidimensional factor. The root mean square root of residuals (RMSR) value was 0.08, with a chi-square of 517.92 and a probability p <.001, indicating that a one-component model provides a basic, but reasonably adequate.

To complement the PCA, an exploratory factor analysis (EFA) was conducted using the least squares method. The results of the EFA indicate that the first factor explains 53% of the total variance, with a sum of squared loadings of 6.41. The factor loadings of the items in the first factor vary between 0.54 and 0.82, which confirms a good contribution of the items to the unidimensional factor. On the other hand, the RMSR was 0.06, with an empirical chi-square of 380.16 and a probability p < .001, while the Tucker Lewis fit index (TLI) was 0.853 and the RMSEA index was 0.13, with 90% confidence intervals between 0.121 and 0.139. These results suggest that the fit is mediocre, however, it is assumed to be useful to the extent that: the 2-component model presented a worse fit, the deviations from the optimal cut-off points are not extreme, and furthermore, the model is consistent with the theoretical validity of the original instrument.

3.2. Determine the effectiveness of the items in discriminating between different levels of knowledge

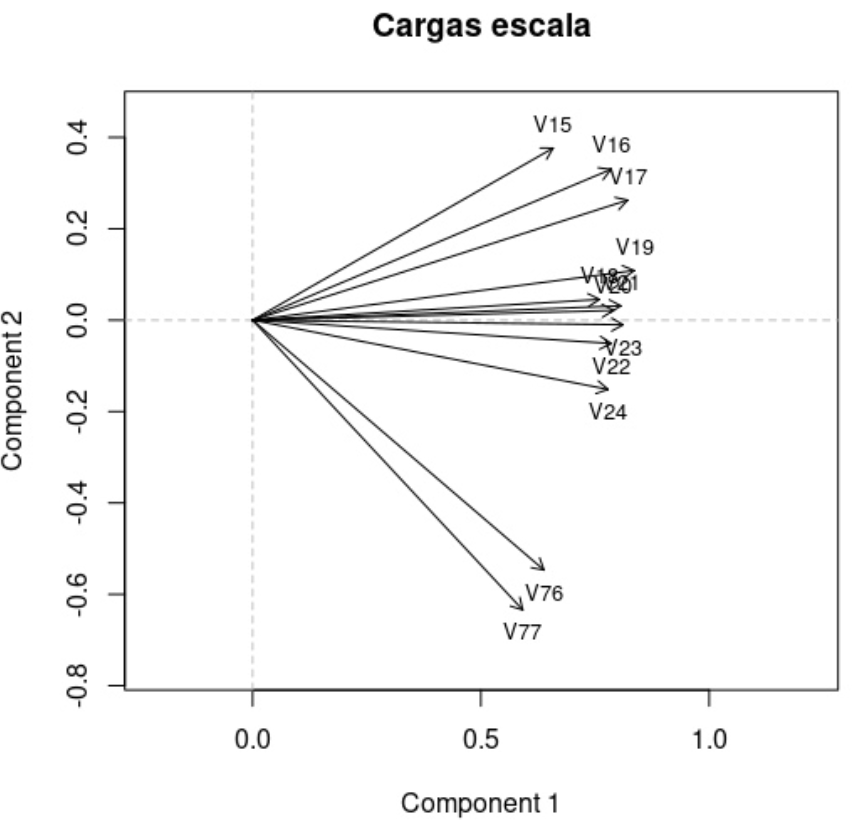

In addition, a categorical principal components analysis (Princals) was performed to examine the structure of the items. The results show that the first eigenvalue is 6.91, confirming the existence of a dominant factor that explains a significant part of the variance. The loss value was 0.668 after 27 iterations, indicating good convergence of the model. However, it can be observed in Figure 1 that two variables (V76 and V77) move away from the rest, which could suggest that these items affect the lack of a better model fit.

FIGURE 1. Categorical principal components

In any case, this evidence indicates that unidimensionality of the scale cannot be ruled out and the use of Item Response Theory (IRT) models is justified.

The Graded Response Model (GRM) was fitted to the data to assess the psychometric properties of the scale. The maximum likelihood indices (log., Lik= -11699.62 ; g.l=84) AIC (23567.24) and BIC (23947.59) indicate that the model fits the data adequately, maintaining a balance between accuracy and model complexity.

Table 3 shows the estimated parameters for each item, including discrimination values (Dscrmn) and response category thresholds (Extrmt).

TABLE 3. Parameters of MRG items

| Item | Discrimination | Threshold 1 | Threshold 2 | Threshold 3 | Threshold 4 | Threshold 5 | Threshold 6 |

| V15 | 1.633 | -2.213 | -1.179 | -0.383 | 0.457 | 1.398 | 2.420 |

| V16 | 2.432 | -1.302 | -0.481 | 0.109 | 0.813 | 1.639 | 2.345 |

| V17 | 2.668 | -1.198 | -0.407 | 0.274 | 0.857 | 1.566 | 2.420 |

| V18 | 2.216 | -0.021 | 0.610 | 1.063 | 1.660 | 2.252 | 2.947 |

| V19 | 2.756 | -0.765 | -0.035 | 0.506 | 1.139 | 1.749 | 2.720 |

| V20 | 2.287 | -0.692 | 0.026 | 0.510 | 1.126 | 1.845 | 2.776 |

| V21 | 2.469 | -1.153 | -0.287 | 0.338 | 0.955 | 1.628 | 2.370 |

| V22 | 2.254 | -1.012 | -0.173 | 0.418 | 1.060 | 1.676 | 2.419 |

| V23 | 2.519 | -0.958 | -0.124 | 0.503 | 1.133 | 1.758 | 2.360 |

| V24 | 2.146 | -0.203 | 0.523 | 1.062 | 1.662 | 2.340 | 3.213 |

| V77 | 1.180 | -0.675 | 0.295 | 1.022 | 1.795 | 2.678 | 3.659 |

| V76 | 1.354 | -0.406 | 0.444 | 1.177 | 1.862 | 2.546 | 3.330 |

It can be observed that most of the items have high discrimination values, with items such as V16 (2.432), V17 (2.668), and V19 (2.756) making them stand out in terms of their ability to differentiate between people with high and low levels of knowledge.

With respect to the item thresholds, it is observed that they are well distributed along the latent trait (knowledge) continuum, indicating that the items can capture a wide range of proficiency levels. For example, item V15 has thresholds ranging from -2.213 to 2.420, covering a wide range of difficulty.

An analysis of the information function of the model was then carried out to assess the accuracy of the scale at different levels of competence in order to get an overview of how much information the items provide in the various parts of the knowledge continuum of the participants.

The total information provided by the scale is 88.98. Of this, 86.89 information units, representing 97.65% of the total, are in the -4 to 4 range on the latent trait continuum. The fact that almost all the information is concentrated in the -4 to 4 range indicates that the scale is extremely accurate in measuring knowledge within this interval.

To visualise the accuracy of the test at different proficiency levels, the graph (Figure 2) of the information function of the Graduated Response Model (GRM) was generated. This graph shows how the amount of information provided by the items varies along the knowledge continuum. The peaks in the graph indicate where the scale is most informative and therefore most accurate at those levels. This means that the scale has a high ability to discriminate between individuals in those specific ranges. Furthermore, the high amount of information in the central range suggests that the scale’s estimates of participants’ knowledge will be accurate and reliable for most participants.

FIGURE 2. Graph of the information function of scale

The analysis of the factor scores and item responses provides a comprehensive picture of the level of knowledge about ICT used with people with functional diversity in the sample studied. The mean latent score is approximately -0.021, suggesting that, on average, participants have a level of knowledge close to the mean of the latent trait. The latent scores range from -2.32 to 3.68, indicating a wide dispersion in knowledge levels among participants.

The mean standard error is 0.236, suggesting high precision in the estimation of latent scores. Standard errors range from 0.061 to 0.496, indicating that most estimates of latent scores are fairly accurate. A low standard error is necessary to ensure the reliability of the measurements, as it suggests that the estimates of the latent scores are consistent and replicable.

These results reflect a significant diversity in the participants’ knowledge of ICT applied to people with functional diversity. The wide variation in latent scores may imply the need to generate and/or design training plans that address digital teaching competences for educational inclusion. Furthermore, the accuracy in estimating latent scores supports the validity of the measurement instrument, indicating that it is adequate to capture the subtleties and nuances of knowledge in this specific area.

4. DISCUSSION

A psychometric analysis based on IRT has been carried out that resolves some of the limitations of Classical Test Theory when validating instruments. In addition, it is necessary to take into account some limitations that the validation process had in its original publication. Thus, the high internal consistency of the original scale (Cabero-Almenara et al., 2016) suggests an excessive internal consistency that may suggest the existence of a redundancy problem in the measure (Panayides, 2013). The latent structure was identified from a Principal Component Analysis, to whose results matrix they applied a Varimax rotation, which suggests a classical approach to the psychometric problem, although it has been superseded in recent decades (Widaman, 2007). In this study, only one subscale has been used, considerably reducing its length and facilitating its use with screening tests. The information function of the scale indicates that it provides very reliable estimates for most of the people assessed.

Overall, most of the fit values between pairs of items are within an acceptable range, indicating that the MRG model adequately captures the relationships between most of the items. However, they also suggest areas where the model may be less accurate due to fit problems with the V77-V76 pair, an issue that is graphically observed in the categorical principal components analysis (Figure 1).

The results show that the scale used is not only effective in measuring the average knowledge of the participants, but it is also able to detect significant variations between individuals, which is essential for designing more effective educational interventions and training programmes. The accuracy of the estimates reinforces confidence in the results obtained (López-Falcón, 2021), providing a solid basis for future research and practical applications in the field of ICT education and teacher training for people with functional diversity (Ari et al., 2022; Blasco-Serrano et al., 2022; Kerexeta-Brazal et al., 2022).

These results indicate that the University of Granada provides more effective ICT training, reflected in the higher and less dispersed scores of its students. In contrast, students from “Other universities” and the University of Malaga have lower levels of ICT knowledge than the overall average, with greater diversity in proficiency levels within the “Other universities” group. These findings highlight the importance of institutional context in ICT training, suggesting the need to adapt educational programmes to address differences in knowledge levels (Cabero-Almenara et al., 2022; Díaz-García et al., 2020).

Research by Masoumi (2021), Pinto-Santos (2023), Recio-Muñoz et al. (2020) and Silva et al. (2018), for example, argues that comprehensive training in the promotion of digital skills is essential, as teachers are at a basic level in terms of ICT knowledge and management, i.e. they know basic technological tools, but find it more complex to perform advanced tasks such as creating content or activities using technology. Likewise, the results of a study carried out by Guillén-Gámez et al. (2020) corroborate that future teachers have a low level of ICT use in the classroom and show that the degree of digital competence and motivation to use ICT are variables that are positively correlated.

The study underlines the importance of socio-demographic variables in the level of ICT knowledge. Men, students from certain universities, and those who have received ICT training show higher levels of knowledge, which we value from the point of view of critical enquiry and the potential of the scale used for the improvement of initial teacher training in educational innovation and inclusion. Furthermore, these findings highlight the need to consider these differences when designing educational and training programmes, with the aim of addressing knowledge gaps and promoting equitable and effective training in educational technology. This same idea is highlighted in a study by Nieto-Isidro et al. (2022) on the relationship between information literacy and socio-demographic variables in teachers and future teachers in compulsory education.

5. CONCLUSIONS

Educational inclusion requires changes in teaching-learning methods and ICT offers the necessary tools to make these adjustments efficiently, becoming a fundamental resource for the promotion of equity and quality in education. ICT not only facilitates access to learning and communication, but also fosters collaboration, autonomy and active participation of students with functional diversity in pedagogical processes. These resources need to be appropriately integrated to ensure that all learners have the opportunity to reach their full potential, regardless of their individual abilities.

The fit of the MRG model to the data was satisfactory, as indicated by the fit indices (log.Lik, AIC, BIC). The discrimination parameters suggest that most of the items are effective in differentiating levels of competence, and the well-distributed thresholds ensure a wide coverage of the range of competence of the latent trait. These results support the validity of using the MRG model to assess prospective teachers’ knowledge of ICT for diversity.

The analysis of the information function confirms that the scale is highly effective in measuring prospective teachers’ knowledge of ICT for diversity. On the other hand, the interpretation of the latent scores suggests that the sample has a distribution of ICT for diversity knowledge centred close to the mean.

The implementation of this scale not only makes it possible to assess the digital and inclusive competences of future teachers, but also to raise awareness and advocate for the need to reformulate teacher training plans to meet the demands of a highly heterogeneous society. It therefore serves as a predictive tool to identify training gaps and develop training programmes that respond to these needs. This represents a step forward in generating scientific knowledge within the paradigm of inclusive education, in need of resources and instruments to move towards a true educational transformation that avoids para-scientific parameters or, where appropriate, occurrences rather than fully reliable and valid evidence in terms of democratic, rational and human rights-based pedagogical construction.

The evolution of ICT and diversity in the classroom require continuous teacher training and the design and/or revision of scales to assess digital competences for inclusive education. These measurement tools facilitate the understanding of the level of knowledge of future teachers and improve both teacher training and the quality of education. The aim is to continue transforming education based on scientific-technical criteria that enable the understanding of a reality that is key to educational quality: the challenge of educational inclusion. Teachers need this type of instruments that can and should be shared to increase their commitment to diversity and digital competences applied to pedagogical-inclusive responses that are the bearers of more revitalising, dynamic, innovative and creative meanings in the new school scenarios.

5.1. Limitations and future lines of research

There is a significant fit problem between items V77 and V76. This finding indicates the need to revise these items to improve the validity and reliability of the scale.

Overall, the results support the use of the MRG model, but also highlight areas for improvement in the formulation and evaluation of some specific items.

For future research, it would be interesting to design a scale on digital teaching competences for inclusion that includes emerging ICT competences such as artificial intelligence, big data analysis and cybersecurity, among others. These latter challenges are key elements in addressing the complexity of a school that is progressively facing the translation of cultural and technological challenges in a hybrid, interconnected and networked world.

6. ACKNOWLEDGMENTS

Lucía María Parody García thanks the Spanish Ministry of Universities for the FPU grant (FPU20/00049).

7. REFERENCES

Almerich, G., Suárez-Rodríguez, J., Díaz-García, I., & Orellana, N. (2020). Structure of 21st century competencies in students in the educational field. Influential personal factors. Educación XX1, 23 (1), 45-74. https://doi.org/10.5944/educXX1.23853

Ari, R., Altinay, Z., Altinay, F., Dagli, G., & Ari, E. (2022). Sustainable management and policies: the roles of stakeholders in the practice of inclusive education in digital transformation. Electronics, 11 (4), 1-16. https://doi.org/10.3390/electronics11040585

Baker, F., & Kim, S.H. (2004). Item Response Theory. Marcel Dekker.

Bentler, P. M. (1990). Comparative fit indexes in structural models. Psychological Bulletin, 107 (2), 238-246. https://doi.org/10.1037/0033-2909.107.2.238

Blasco-Serrano, A. C., Bitrián-González, I., & Coma-Roselló, T. (2022). Incorporation of ICT in initial teacher training through Flipped Classroom to promote inclusive education. EDUTEC, ( 79), 9-29. https://doi.org/10.21556/edutec.2022.79.2393

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 136-162). Sage.

Cabero-Almenara, J., Fernández-Batanero, J. M., & Córdoba-Pérez, M. (2016). Knowledge of ICT applied to people with disabilities. Construction of a diagnostic instrument. Magis, International Journal of Research in Education, 8 (17), 157-176. https://doi.org/10.11144/Javeriana.m8-17.ctap

Cabero-Almenara, J., Guillén-Gámez, F. D., Ruiz-Palmero, J., & Palacios-Rodríguez, A. (2022). Teachers’ digital competence to assist students with functional diversity: Identification of factors through logistic regression methods. British Journal of Educational Technology, 53 (1), 41-57. https://doi.org/10.1111/bjet.13151

Cabero-Almenara, J., & Martínez, A. (2019). Information and communication technologies and initial teacher education: digital models and competences. Profesorado, Revista de Currículum y Formación de Profesorado, 23 (3), 247-268. https://doi.org/10.30827/profesorado.v23i3.9421

Chou, Y.T., & Wang, W.C. (2010). Checking Dimensionality in Item Response Models With Principal Component Analysis on Standardized Residuals. Educational and Psychological Measurement, 70 (5), 717-731. https://doi.org/10.1177/0013164410379322

Colás-Bravo, M. P., & Buendía-Eisman, L. (1998). Educational research ( 3rd ed.). Alfar.

Díaz-García, I., Almerich-Cerveró, G., Suárez-Rodríguez, J., & Orellana-Alonso, N. (2020). The relationship between ICT competences, ICT use and learning approaches in university education students. Journal of Educational Research, 38 (2), 549-566. https://doi.org/10.6018/rie.409371

Ferrando, P.J., Lorenzo-Seva, U., Hernández-Dorado, A., & Muñiz, J. (2022). Decalogue for the Factorial Analysis of Test Items. Picothema, 34 (1), 7-17. https://doi.org/10.7334/psicothema2021.456

Gallardo-Montes C. P., Caurcel-Cara, M. J., Crisol-Moya, E., & Peregrina-Nievas, P. (2023). ICT Training Perception of Professionals in Functional Diversity in Granada. International Journal of Environmental Research and Public Health, 20 (3), 2064. https://doi.org/10.3390/ijerph20032064

Guillén-Gámez, F. D., Mayorga-Fernández, M. J., & Álvarez-García, F. J. (2020). A Study on the Actual Use of Digital Competence in the Practicum of Education Degree. Tech Know Learn, 25 , 667-684. https://doi.org/10.1007/s10758-018-9390-z

Harman, H. H. (1976). Modern factor analysis (3rd ed.). University of Chicago Press.

Hooper, S., & Rieber, L. P. (1995). Teaching with technology. In A. C. Ornstein (Ed.), Teaching: Theory into practice (154-170). Allyn and Bacon.

Instituto Nacional de Tecnologías del Aprendizaje y de Formación del Profesorado [INTEF] (2022). Marco de Referencia de la Competencia Digital Docente. INTEF. http://aprende.intef . en/mccdd

Kerexeta-Brazal, I., Darretxe-Urrutxi, L., & Martínez-Monje, P. M. (2022). Digital competence in teaching and educational inclusion at school. A systematic review. Campus Virtuales, 11 (2), 63-73. https://doi.org/10.54988/cv.2022.2.885

Krumsvik, R. J. (2014). Teacher educators’ digital competence. Scandinavian Journal of Educational Research, 58 (3), 269-280. https://doi.org/10.1080/00313831.2012.726273

Lai, K., & Green, S. B. (2016). The Problem with Having Two Watches: Assessment of Fit When RMSEA and CFI Disagree. Multivariate Behavioral Research, 51 (2-3), 220-239. https://doi.org/10.1080/00273171.2015.1134306

Laitón, E.V., Gómez, S.E., Sarmiento, R.E., & Mejía, C. (2017). Inclusive practice competence: ICT and inclusive education in teacher professional development. Sophia 13 (2), 82-95. https://doi.org/10.18634/sophiaj.13v.2i.502

López-Falcón, A. (2021). The types of research results in educational sciences. Revista Conrado , 17 (S3), 53-61.

Lorenzo-Seva, U., Timmerman, M. E., & Kiers, H. A. L. (2011). The Hull method for selecting the number of common factors. Multivariate Behavioral Research, 46 (2), 340-364. https://doi.org/10.1080/00273171.2011.564527

Masoumi, D. (2021). Situating ICT in early childhood teacher education. Education and Information Technologies, 26 , 3009-3026. https://doi.org/10.1007/s10639-020-10399-7

Mishra, P., & Koehler, M.J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. The Teachers College Record, 108 (6), 1017-1054. https://doi.org/10.1111/j.1467-9620.2006.00684.x

Moriña, A. (2020). Faculty members who engage in inclusive pedagogy: Methodological and effective strategies for teaching. Teaching and Higher Education , 27 (3), 1-16. https://doi.org/10.1080/13562517.2020.1724938

Nieto-Isidro, S., Martínez-Abad, F., & Rodríguez-Conde, M. J. (2022). Observed and Self-perceived Informational Competence in teachers and future teachers and its relationship with socio-demographic variables. Revista de Educación, (396), 35-64. https://doi.org/10.4438/1988-592X-RE-2022-396-529

Panayides, P. (2013). Coefficient Alpha: Interpret With Caution. Europe’s Journal of Psychology , 9 (4), 687-696. https://doi.org/10.5964/ejop.v9i4.653

Parody-García, L. M. (in press). New trends in initial and in-service teacher training for the development of digital competences applied to inclusive education. Pedagogical realities and challenges. [Doctoral thesis]. University of Malaga.

Parody-García, L.M., Leiva-Olivencia, J.J., & Santos-Villalba, M.J. (2022). Universal Design for Learning in Digital Teacher Training from an Inclusive Pedagogical View. Revista Latinoamericana de Educación Inclusiva, 16 (2), 109-123. https://dx.doi.org/10.4067/S0718-73782022000200109

Parra, L., & Agudelo, A. (2020). Innovation in ICT-mediated pedagogical practices. In R. Canales & C. Herrera (eds.). Access, democracy and virtual communities ( pp. 51-64). CLACSO.

Pegalajar, M. C. (2017). Future teachers and the use of ICT for inclusive education. Digital Education Review , (31), 131-148.

Pinto-Santos, A. R., Pérez-Garcias, A., & Darder-Mesquida, A. (2023). Digital competence training for teachers: functional validation of the TEP model. Innoeduca. International Journal of Technology and Educational Innovation, 9 (1), 39-52. https://doi.org/10.24310/innoeduca.2023.v9i1.15191

R Core Team (2022). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/ .

Recio-Muñoz, F., Silva-Quiroz, J., & Abricot-Marchant, N. (2020). Analysis of digital competence in the initial training of university students: A meta-analysis study in the Web of Science. Pixel-Bit. Journal of Media and Education, (59), 125-146. https://doi.org/10.12795/pixelbit.77759

Ripoll-Rivaldo, M. (2021). Pedagogical practices in teacher training: from the didactic axis. Telos: Revista de Estudios Interdisciplinarios en Ciencias Sociales, 23 (2), 286-304. https://doi.org/10.36390/telos232.06

Rizopoulos, D. (2006). ltm: An R Package for Latent Variable Modeling and Item Response Analysis. Journal of Statistical Software, 17 (5), 1-25. https://doi.org/10.18637/jss.v017.i05

Romero-Martínez, S. J., & Ordóñez-Camacho, X. G. (2015). Psychometrics . Centro de Estudios Financieros.

Samejima, F. (1969). Estimation of Latent Ability using a Response Pattern of Graded Scores. Psychometrika Monograph Supplement.

Silva, J., Lázaro, J. L., Miranda, P., & Canales, R. (2018). The development of teachers’ digital competence during teacher training. Opción, 34 (86), 423-449.

Tejedor, F. J., García-Valcárel, A., & Prada, S. (2009). Measuring university teaching staff attitudes towards ICT integration. Comunicar, 17 (33), 115-124. https://doi.org/10.3916/c33-2009-03-002

United Nations Educational, Scientific and Cultural Organization [UNESCO] (2019). ICT Competency Framework for Teachers. UNESCO. https://unesdoc.unesco.org/ark:/48223/pf0000371024

Van der Linden, W.J., & Hambleton, R.K. (1997). Handbook of Modern Item Response Theory. Springer.

Vigo, M.B. (2021). Development of creative and inclusive teaching practices with digital media. In C. Latorre and A. Quintas (Coords.), Inclusión educativa y tecnologías para el aprendizaje (pp.129-144). Octaedro.

Widaman, K. F. (2007). Common factors versus components: Principals and principles, errors, and misconceptions. In R. Cudeck & R. C. MacCallum (Eds.), Factor analysis at 100: Historical developments and future directions (pp. 177-203). Lawrence Erlbaum Associates.

Wi smeijer, A. A. A. J., Sijtsma, K., van Assen, M. A. L. M., & Vingerhoets, A. J. J. J. M. (2008). A Comparative Study of the Dimensionality of the Self-Concealment Scale Using Principal Components Analysis and Mokken Scale Analysis. Journal of Personality Assessment, 90 (4), 323-334. https://doi.org/10.1080/0022389080210787 5