Journal of Technology and Educational Innovation Vol. 10. No. 2. December 2024 - pp. 141-162 - ISSN: 2444-2925

DOI: https://doi.org/10.24310/ijtei.102.2024.20132

The dynamics of disposition: introducing a new scale for evaluating middle school attitudes towards blended learning

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.1. INTRODUCTION

Blended learning, combining face-to-face instruction with web-assisted learning, has become a transformative educational approach for the information age (Dangwal, 2017). Blended learning includes virtual classrooms, personalized training, collaborative learning, and multimedia resources, creating a rich learning environment (Ashraf et al., 2021). It enhances accessibility and flexibility, catering to diverse learning preferences and paces (Chaw & Tang, 2023). Virtual classrooms allow engagement from anywhere, while personalized training lets learners progress at their own pace with tailored support (Kilag et al., 2023). Online platforms foster teamwork and communication skills essential for the modern workforce (Bizami et al., 2023). Multimedia resources such as videos and interactive simulations cater to various learning styles (Akram et al., 2023). Despite the advantages of online learning, face-to-face interaction remains crucial for feedback, hands-on activities, and interpersonal skills development (Bizami et al., 2023). This combination makes blended learning effective for modern education, addressing limitations of purely online or traditional settings (Smith & Hill, 2018). Adaptability and inclusivity of blended learning meet the evolving needs of students, leveraging technology to enhance traditional practices and develop critical 21st-century skills (Dakhi et al., 2020). It prepares students to thrive in a digital world, fulfilling education’s goal of equipping individuals with essential skills for the information society.

Understanding students’ attitudes towards blended learning is crucial, as these attitudes significantly influence educational outcomes (Cao, 2023). Positive attitudes lead to better engagement and results, while negative attitudes can hinder progress (Zhang et al., 2020). Educators must measure and analyze these attitudes to fine-tune educational strategies and align them with learner preferences (Chiu, 2021). This approach ensures that blended learning techniques enhance the learning experience, supporting the goal of equipping students with skills for the digital age. The Community of Inquiry (CoI) framework supports this understanding by conceptualizing the online educational experience through social, cognitive, and teaching presences, which are critical to creating a deep and meaningful learning experience. Incorporating technology in education offers advantages like enhanced social development, independent learning, better motivation, and increased network literacy (Kikalishvili, 2023; Stec et al., 2020). However, effective education requires purposeful and strategic application of technology, involving educators, students, families, and administrators (Blau & Hameiri, 2017). Teachers must integrate technology into their strategies, and students should take responsibility for their learning (ElSayary, 2023). Support from families and administrators is also crucial (Khlaif et al., 2023).

Despite the growing importance of blended learning, there remains a significant gap in the research regarding robust measurement tools specifically designed to assess middle school students’ attitudes towards blended learning methods. While numerous studies have explored the general perceptions within blended learning environments, they have largely overlooked the critical dimension of student attitudes (Banihashem et al., 2023; Niu et al., 2023; Olpak & Ateş, 2018; Peng et al., 2023). The attitudes of students towards blended learning are pivotal as they directly impact their engagement, motivation, and overall success in these environments (Ateş & Garzon, 2022, 2023). Positive attitudes are linked to enhanced learning experiences and outcomes, while negative attitudes can significantly hinder educational progress (Cao, 2023). However, existing studies typically rely on subjective evaluations rather than rigorously validated measurement tools, resulting in inconsistent and unreliable data. This deficiency underscores the need for methodically developed tools that can provide accurate and actionable insights into students’ attitudes. Such tools are essential for educators to effectively tailor and optimize blended learning environments, ensuring they cater to the diverse needs and preferences of students.

The study aims to address this gap by developing and validating an attitude scale specifically for middle school students engaged in blended learning. This scale is intended to measure students’ perceptions accurately, thereby informing the adaptation and enhancement of teaching strategies to improve educational outcomes. Providing educators with a reliable tool to assess attitudes towards blended learning is critical for integrating educational technology more effectively and for advancing pedagogical practices.

Research Questions:

- How reliable is the newly developed attitude scale for measuring middle school students’ perceptions of blended learning?

This question is crucial as reliability determines the consistency of the scale across different contexts and samples. Establishing reliability is fundamental to ensuring that the scale produces stable and repeatable results, which is essential for its application in diverse educational settings. - Does the attitude scale exhibit valid construct representation for attitudes toward blended learning among middle school students?

This question seeks to validate the scale’s effectiveness in capturing the complex attitudes students hold towards blended learning. Validity is key to confirming that the scale accurately measures the constructs it purports to measure, thereby providing meaningful and trustworthy data that can guide educational decisions and strategies.

2. LITERATURE REVIEW

Blended learning, also known as hybrid learning, is an educational approach that seamlessly integrates traditional face-to-face classroom instruction with online learning components to create a balanced educational experience (Dangwal, 2017). This method combines the engaging and personalized aspects of in-person teaching with the flexibility and accessibility of online formats (Singh et al., 2021). Key aspects of blended learning include direct student-instructor interactions essential for engagement and feedback; online learning materials such as multimedia lectures, interactive simulations, and digital textbooks that students can access at their convenience; interactive technologies like discussion forums, blogs, and collaborative platforms that foster active learning and teamwork; and a variety of assessment methods that provide both traditional and immediate digital feedback (Armellini et al., 2021; Ateş, 2024; López-Pellisa et al., 2021). This holistic approach ensures that blended learning adapts to diverse learning styles and needs, enhancing both the effectiveness and reach of educational programs.

In the evolving landscape of blended learning, a variety of studies have enriched our understanding of the factors that influence its effectiveness and acceptance, guiding the development of comprehensive items for a new scale intended to measure these elements. One of them conducted by Akkoyunlu and Yılmaz-Soylu (2008) developed a refined scale consisting of 50 items, revealing two principal components that elucidate learners’ views on blended learning and its implementation. This scale underscores the complexity levels of the learning process and the nuanced views of learners towards blended modalities, setting a precedent for comprehensive scale development in this educational context. Building on these insights, Bervell et al. (2021) constructed the Blended Learning Acceptance Scale (BLAS) which integrates perceptions of both LMS-based online learning and face-to-face components. Their research highlights the need for a holistic approach to measuring blended learning acceptance, reflecting both digital and traditional educational experiences. Furthermore, Bhagat et al. (2023) employed exploratory and confirmatory factor analysis to develop a scale that captures three dimensions of blended learning experiences among students in Malaysia: course design, learning experience, and personal factors. This robust validation ensures that the scale reliably reflects varied aspects of student interaction with blended learning environments. In a similar vein, Lazar et al. (2020) introduced a multidimensional scale focusing on the acceptance of digital technology in blended learning contexts. Their work emphasizes the role of familiarity with digital tools, identifying it as a significant factor influencing learners’ engagement with technology in blended settings. The study by Han and Ellis (2020) highlighted the importance of understanding student perceptions in blended learning environments. They developed the Perceptions of the Blended Learning Environment Questionnaire (PBLEQ), which is distinguished by its bifactor model assessing integration between different learning modalities and the specific contributions of online components. Lastly, Çemçem et al. (2024) addressed the need for assessing teachers’ readiness for blended learning. Their scale, derived from exploratory and confirmatory factor analysis, reflects a nuanced understanding of the pedagogical, technological, and adaptive skills required for effective blended teaching. These studies collectively underscore the multifaceted nature of blended learning environments. They reveal that effective assessment tools must not only address the technological aspects but also the pedagogical and interpersonal dynamics that influence both learners’ and instructors’ experiences. The comprehensive scales developed in these studies provide a robust framework for evaluating the effectiveness of blended learning implementations and offer insights that could guide future enhancements in this educational paradigm.

To integrate findings from the aforementioned studies into a coherent framework for item formation in a new blended learning scale, Table 1 was presented, aligning specific research findings with corresponding scale items.

TABLE 1. Systematic Alignment of Research Insights with Scale Item Development for Blended Learning

| STUDY AUTHORS | KEY FINDINGS | INFLUENCED SCALE ITEMS |

| Akkoyunlu & Yılmaz-Soylu (2008) | Identified two principal components crucial for understanding learners’ views on blended learning. | Items to assess learners’ perceptions of the complexity and effectiveness of blended learning integration. |

| Bervell et al. (2021) | Developed BLAS to combine both LMS-based and face-to-face learning acceptance. | Items that measure acceptance and adaptability to both online platforms and traditional classroom settings. |

| Bhagat et al. (2023) | Explored three dimensions: course design, learning experience, and personal factors affecting blended learning. | Items covering course structure, interactive elements, and personal engagement with blended learning courses. |

| Lazar et al. (2020) | Extended Technology Acceptance Model to include familiarity with digital tools and their impact on blended learning acceptance. | Items to evaluate familiarity with and attitudes towards various digital tools used in blended learning. |

| Han & Ellis (2020) | Developed PBLEQ focusing on integration between learning modalities and the contributions of online components. | Items assessing the integration effectiveness and student perceptions of online contributions to learning outcomes. |

| Çemçem et al. (2024) | Assessed teachers’ readiness for blended learning, emphasizing pedagogical, technological, and adaptive skills. | Items designed to gauge teacher preparedness and competency in managing blended learning environments. |

3. MATERIAL AND METHOD

3.1. Study Group

The study sample comprised 259 seventh-grade students enrolled in a public school in Izmir in Turkey during the 2018-2019 academic year. These participants were chosen through the convenience sampling method, a technique favored for its efficiency and practicality. This method enables researchers to quickly gather data from a readily available subset of the population, thereby facilitating the timely progression of the study without compromising the validity of the results (Çobanoğlu & Demir, 2023).

3.2. Scale Development Process

This research aimed to accurately gauge middle school students’ attitudes towards blended learning methods through a meticulously crafted scale developed in five comprehensive phases.

3.2.1. Item Formation Phase

The item formation phase initiated this process by conducting an extensive review of the literature on blended learning methods to establish a solid theoretical foundation. Central to this foundation was the Community of Inquiry (CoI) framework, which identifies three pivotal elements—social presence, cognitive presence, and teaching presence—as essential to fostering a meaningful and effective educational experience in blended learning environments. The application of the CoI framework guided the development of the scale’s items. For social presence, the scale included questions designed to assess students’ perceptions of their connectedness and social integration within the blended learning environment. These items explored aspects such as the sense of community, ease of interaction with peers, and students’ comfort levels in expressing themselves in virtual settings. In assessing cognitive presence, the scale focused on how students construct and confirm meaning through reflection and discourse. Items were crafted to measure the depth of engagement with the content, the quality of critical thinking displayed, and the ability to integrate and apply the knowledge gained in a blended setting. Teaching presence was evaluated through items that examined the design, organization, facilitation, and direction of the educational activities and content delivery. This included assessing the effectiveness of instructional methods and the level of educator support provided in both online and face-to-face components of blended learning. The insights gained from these theoretical and practical considerations were transformed into a preliminary set of 42 distinct items. These were designed to capture a broad spectrum of student attitudes towards blended learning methods, incorporating both positively and negatively framed items to ensure a balanced representation of student perspectives. This comprehensive approach ensures that the developed scale robustly addresses the multifaceted nature of blended learning as outlined by the CoI framework, providing a powerful tool for assessing the efficacy of blended learning environments in supporting effective educational experiences for middle school students.

3.2.2. Expert Opinion and Item Refinement

The development of the attitude scale commenced with an extensive review by a panel of experts across fields such as science education, measurement and evaluation, and linguistics. This critical phase was designed to ensure the content validity of the initial 42-item draft, aligning each item with the specific requirements of assessing attitudes within blended learning contexts. Experts conducted a thorough analysis of each item, focusing on their relevance, clarity, and alignment with the overarching goals of the study. This rigorous review process led to the refinement of the scale by removing six items that were deemed redundant or not adequately aligned with the scale’s objectives. The remaining 36 items were structured into a 5-point Likert scale, ranging from “strongly agree” to “strongly disagree,” which is a widely recognized method for measuring attitudes. This format allows for a nuanced capture of responses, facilitating a detailed analysis of students’ attitudes towards blended learning. This revised scale provides a robust tool for accurately gauging and interpreting diverse educational outcomes in blended learning settings.

3.2.3. Pre-Application and Scale Testing

Subsequently, the refined scale underwent a pre-application phase where it was administered to a select group of 14 middle school students. This phase was crucial for initial real-world testing of the scale’s practical application, ensuring the items were understandable and relevant to the target demographic. The feedback received was instrumental in making final adjustments to the scale, optimizing it for broader application.

3.2.4. Comprehensive Application and Data Collection

The scale was then administered to a larger cohort of 267 students, ensuring comprehensive data collection from 259 participants. This phase was critical for assessing the scale’s effectiveness in a real educational setting, emphasizing the importance of the study and engaging students to ensure sincere and thoughtful responses. Such extensive data collection not only reinforced the scale’s practical utility but also its capacity to capture a wide array of attitudes towards blended learning.

3.2.5. Analysis of Data

The data analysis process was meticulously structured to assess and establish the construct validity of the newly developed attitude scale through Analysis . Factor analysis is a statistical method used to identify underlying relationships between measured variables (Kline, 2014). It reduces a large number of variables into fewer numbers of factors. Factors are essentially latent variables that represent clusters of related items within the dataset. These factors help in understanding the structure of the data and in identifying patterns that are not immediately apparent (Bartholomew et al., 2011).

Initially, the suitability of the data for factor analysis was confirmed by the Kaiser-Meyer-Olkin (KMO) measure, which yielded a coefficient suggesting excellent sampling adequacy, and Bartlett’s Test of Sphericity, which indicated significant correlations among the items. This preliminary analysis set the stage for a more detailed exploration using Principal Component Analysis (PCA) with varimax rotation which is a statistical technique used to simplify the interpretation of factor analysis results by maximizing the variance of squared loadings of a factor across variables, making the structure clearer and more interpretable (Abdi, 2003). This step was crucial to discerning the underlying structures within the data, culminating in the identification of a robust two-factor structure that effectively delineated the diverse dimensions of students’ attitudes towards blended learning. The factors extracted during this phase were rigorously validated to ensure their relevance and reliability. The internal consistency of each factor was quantitatively supported by high Cronbach’s alpha values of .967 for the first factor and .923 for the second, indicating excellent reliability. These factors were further scrutinized through item test-total correlation and item discrimination analyses, which are pivotal in evaluating how well each item contributes to the overarching construct measured by the scale. The meticulous examination of these values not only reinforced the scale’s reliability but also its validity in capturing nuanced aspects of students’ perceptions and attitudes. This comprehensive approach to data analysis ensured that the scale developed provides reliable, valid insights that are crucial for educators and researchers who aim to tailor and enhance blended learning strategies effectively. The integration of these rigorous analytical methods underscores the robustness of the scale, offering a dependable tool for assessing middle school students’ attitudes towards blended learning and informing the development of more effective educational practices.

3.3. Compliance with Ethical Rules

Ethical principles and rules were followed at all stages of this research. Manisa Celal Bayar University Science Research Ethics Committee approved that the study was ethically convenient as of protocol Nr. 07/11/2018-E.95399. Aforementioned document related to ethics committee approval is presented in Appendix.

4. RESULTS

4.1. Item Analysis

The item analysis was conducted to ensure that each item on the scale effectively discriminates between respondents with high and low attitudes toward blended learning. This is crucial for validating the scale’s effectiveness in capturing the nuanced perceptions of middle school students regarding blended learning. Following a method recommended by Tavşancıl (2006), we compared the average scores assigned to each item by the top 27% and the bottom 27% of respondents. Specifically, the highest scoring 70 students (approximately 27% of the 259 participants) were compared with the lowest scoring 70 students. This technique helps determine if the items are sensitive enough to capture variations in student attitudes, a pivotal aspect of the scale’s utility. To achieve this, the independent groups t-test was used due to the statistical independence between the upper and lower scoring groups, allowing for a clear assessment of differences in responses. As shown in Table 2, all items on the scale exhibited significant levels of discrimination, indicating that they effectively distinguish between high and low scorers. This high level of item discrimination is essential for confirming the scale’s reliability and validity, ensuring it accurately measures students’ attitudes toward blended learning. By validating the effectiveness of each item, this analysis directly supports the first research question regarding the reliability of the newly developed attitude scale. The consistent high discrimination of items demonstrates the scale’s capability to reliably differentiate between varying levels of student attitudes, ensuring robust measurement. The second research question, which concerns the scale’s validity in representing attitudes toward blended learning, is addressed through the comprehensive item analysis combined with factor analysis. The significant discrimination levels observed for each item ensure that the scale accurately captures the intended constructs, providing a valid measure of students’ attitudes toward blended learning.

TABLE 2. Results of item analysis regarding the blended learning methods

| ITEM NUMBERS | Lower group | Upper group | t sub-upper (%27) | ||

| M | SD | M | SD | ||

| Item 1 | 3.558 | .936 | 4.985 | .121 | 12.456 |

| Item 3 | 3.088 | .973 | 4.970 | .170 | 15.710 |

| Item 4 | 2.647 | 1.075 | 4.926 | .314 | 16.771 |

| Item 6 | 1.176 | .621 | 2.695 | .944 | 11.107 |

| Item 7 | 3.250 | .853 | 4.970 | .170 | 16.312 |

| Item 8 | 3.470 | 1.071 | 4.941 | .293 | 10.915 |

| Item 10 | 3.044 | .904 | 4.838 | .535 | 14.069 |

| Item 11 | 3.250 | 1.070 | 5.000 | .000 | 13.483 |

| Item 12 | 3.044 | .921 | 4.970 | .170 | 16.956 |

| Item 15 | 2.882 | .970 | 4.867 | .341 | 15.918 |

| Item 16 | 2.941 | .861 | 4.897 | .391 | 17.037 |

| Item 20 | 2.867 | 1.063 | 4.911 | .333 | 15.119 |

| Item 22 | 3.088 | .988 | 4.985 | .121 | 15.707 |

| Item 23 | 3.323 | .761 | 4.926 | .262 | 16.402 |

| Item 25 | 2.794 | 1.030 | 4.926 | .262 | 16.537 |

| Item 26 | 2.764 | .899 | 4.882 | .406 | 17.689 |

| Item 28 | 2.970 | .913 | 4.985 | .121 | 18.024 |

| Item 29 | 2.926 | .966 | 4.985 | .121 | 17.423 |

| Item 31 | 3.411 | 1.025 | 4.970 | .170 | 12.365 |

| Item 34 | 2.808 | 1.011 | 4.941 | .293 | 16.700 |

| Item 35 | 2.985 | 1.085 | 4.970 | .170 | 14.896 |

| Item 36 | 3.161 | 1.153 | 4.985 | .121 | 12.959 |

| Item 2 | 3.970 | .845 | 4.705 | .490 | 6.201 |

| Item 5 | 2.205 | .955 | 1.455 | .656 | 5.337 |

| Item 9 | 3.514 | 1.139 | 4.750 | .436 | 8.349 |

| Item 13 | 3.779 | .990 | 4.794 | .407 | 7.815 |

| Item 14 | 3.779 | .959 | 4.794 | .407 | 8.027 |

| Item 17 | 3.589 | 1.271 | 4.475 | 1.23 | 5.603 |

| Item 18 | 3.948 | .981 | 4.776 | .674 | 6.272 |

| Item 19 | 3.573 | 1.012 | 4.735 | .613 | 8.094 |

| Item 21 | 3.808 | .950 | 4.661 | .682 | 6.011 |

| Item 24 | 3.691 | .981 | 4.705 | .520 | 7.535 |

| Item 27 | 3.948 | 1.121 | 4.734 | .608 | 7.624 |

| Item 30 | 3.529 | 1.085 | 4.764 | .427 | 8.732 |

| Item 32 | 3.470 | 1.177 | 4.794 | .407 | 8.758 |

| Item 33 | 3.426 | 1.200 | 4.735 | .613 | 8.003 |

M: Mean, SD: Standard Deviation, Significance Level: p<.05

4.2. Exploratory Factor Analysis (EFA)

EFA was utilized to reassess and refine the structure of our scale by examining the interrelationships among the scale items. To verify the appropriateness of conducting an EFA, the KMO measure and the Bartlett’s Test of Sphericity were employed. These tests are crucial for assessing the adequacy of sample size and the suitability of the data for factor analysis. The KMO test, which measures sampling adequacy, returned a value of 0.943, suggesting an excellent fit for factor analysis as values closer to 1 indicate more suitable data for structure detection. Typically, a KMO value above 0.90 is considered excellent, while values below 0.50 are deemed unacceptable for a reliable factor analysis. Furthermore, the Bartlett’s Test of Sphericity, which assesses the hypothesis that the variables are unrelated in the population, confirmed that the variables are sufficiently correlated for EFA. The significance of the chi-square statistic from this test was very high (χ2 = 1693.582, p<.000), strongly indicating that the data do not arise from a multivariate normal distribution where the variables are independent.

Upon confirming data suitability, principal components analysis was conducted, utilizing the varimax rotation. The rotation clarified the factor structure, enabling us to isolate and interpret the primary dimensions represented by the scale items. This methodological approach ensured that the derived factors were both statistically robust and meaningful, reflecting coherent underlying constructs that the scale aims to measure (see Table 3).

Following the execution of the factor analysis, two distinct factors emerged, each with eigenvalues exceeding 1. According to established analytical standards, the presence of factors that cumulatively explain at least two-thirds of the total variance in the data is indicative of their significance within the model. This threshold is crucial as it helps identify the most impactful factors that encapsulate the core dimensions being measured by the scale. The eigenvalues of these identified factors, alongside their respective contributions to the explained variance, effectively delineate the underlying constructs captured by the scale (see Table 4).

TABLE 3. KMO and BS tests towards blended learning methods attitude scale

| KMO value | BS test values | ||

| χ2 | df | p | |

| .943 | 1693.582 | 593 | .000* |

TABLE 4. Characteristics of factors

| Factor | Factor eigenvalues | Variance | Total variance |

| Factor 1 | 16.736 | 46.488% | 57.035% |

| Factor 2 | 3.641 | 10.704% |

The strength of the factor structure of the scale is directly proportional to the size of the variance ratios derived from the analysis. A robust factor structure is indicated by higher variance ratios, which demonstrate that the factors identified capture a significant proportion of the total variance in the dataset. Generally, a variance ratio falling within the range of 40% to 60% is deemed sufficient. This range suggests that the factors adequately represent the underlying constructs without overfitting the data, thereby ensuring that the scale is both effective and efficient in measuring the intended attributes.

TABLE 5. Factor loadings of scale items

| SCALE ITEMS | FACTOR LOADINGS | |

| FACTOR 1 | FACTOR 2 | |

| Item 1 | 0.759 | |

| Item 3 | 0.818 | |

| Item 4 | 0.785 | |

| Item 6 | 0.762 | |

| Item 7 | 0.789 | |

| Item 8 | 0.602 | |

| Item 10 | 0.629 | |

| Item 11 | 0.759 | |

| Item 12 | 0.807 | |

| Item 15 | 0.723 | |

| Item 16 | 0.782 | |

| Item 20 | 0.773 | |

| Item 22 | 0.701 | |

| Item 23 | 0.701 | |

| Item 25 | 0.825 | |

| Item 26 | 0.789 | |

| Item 28 | 0.856 | |

| Item 29 | 0.847 | |

| Item 31 | 0.647 | |

| Item 34 | 0.746 | |

| Item 35 | 0.776 | |

| Item 36 | 0.790 | |

| Item 2 | 0.563 | |

| Item 5 | 0.483 | |

| Item 9 | 0.785 | |

| Item 13 | 0.787 | |

| Item 14 | 0.740 | |

| Item 17 | 0.834 | |

| Item 18 | 0.748 | |

| Item 19 | 0.711 | |

| Item 21 | 0.664 | |

| Item 24 | 0.734 | |

| Item 27 | 0.855 | |

| Item 30 | 0.699 | |

| Item 32 | 0.808 | |

| Item 33 | 0.773 | |

As detailed in Table 5, the scale comprises 36 items, with 22 categorized as positive and 14 as negative. The factors have been named according to the predominant sentiment of the items they include, which simplifies the interpretation and discussion of the scale’s structure. Specifically, the first factor is labeled “Positive” and includes items 1, 3, 4, 6, 7, 8, 10, 11, 12, 15, 16, 20, 22, 23, 25, 26, 28, 29, 31, 34, 35, and 36. The second factor, labeled “Negative,” encompasses items 2, 5, 9, 13, 14, 17, 18, 19, 21, 24, 27, 30, 32, and 33.

To further enhance the clarity and utility of the item categorization, the scale items have been grouped into three key dimensions: “Engagement,” “Usefulness,” and “Ease of Use.” These dimensions were chosen to represent the primary areas of interest in evaluating students’ attitudes towards blended learning. Engagement assesses how blended learning environments affect students’ involvement and interaction in the learning process. Items in this category measure aspects such as student participation, motivation, and the extent to which blended learning fosters active learning. Positive engagement items include statements like “I greatly enjoy studying the lesson with the blended learning methods” and “My desire to learn increases in the lesson taught with the blended learning methods.” Negative engagement items include statements like “I am afraid of failing the lesson taught with the blended learning methods” and “I find the teaching of the lesson with the blended learning methods boring.” Usefulness evaluates the perceived benefits and effectiveness of blended learning methods in enhancing educational outcomes. This includes how well blended learning supports academic achievement, facilitates understanding of course material, and contributes to skill development. Positive usefulness items include statements like “I think the lesson taught with the blended learning methods is useful” and “I think that the information I learned in the lesson taught with the blended learning methods will last permanently.” Negative usefulness items include statements like “I have difficulty in understanding the lesson taught with the blended learning methods” and “I do not think that the lesson taught with the blended learning methods is useful.” Ease of Use captures students’ perceptions of how user-friendly and accessible the blended learning tools and platforms are. Items in this category address the technological aspects, such as the ease of navigating online resources and the overall usability of the blended learning system. Positive ease of use items include statements like “I think that the lesson taught with the blended learning methods is understandable” and “Teaching the lesson with blended learning methods increases my motivation.” Negative ease of use items include statements like “I find it difficult to follow the lesson taught with the blended learning methods” and “I find it difficult to communicate with my friends in the lesson taught with the blended learning methods.”

The examination of factor loadings elucidates the variability in the correlation of scale items with the identified factors, providing a foundational assessment for optimizing blended learning strategies. Items with higher loadings, such as Item 28 (0.856) and Item 29 (0.847), demonstrate robust correlations with positive perceptions toward blended learning. This correlation aligns with educational frameworks like the Community of Inquiry, which emphasizes the importance of cognitive presence for meaningful learning experiences. Conversely, items exhibiting the lowest loadings, such as Item 5 (0.483) and Item 2 (0.563), may indicate aspects that are perceived as less central to, or less effectively captured within, student perceptions of blended learning. For instance, the lower loadings of items related to technical ease of use suggest that these elements, while important, may not directly impact students’ overall attitudes as prominently as other dimensions. This variance underscores potential areas for refining the scale, particularly in terms of improving how these items are formulated or contextualized to better resonate with core educational constructs. Statistically, the disparity in loadings, ranging from very high to moderately low, supports a robust factor structure of the scale, affirming its capacity to differentiate between the influential and less impactful aspects of blended learning experiences. This statistical validation is further enhanced by theoretical underpinnings, providing a nuanced understanding that not only corroborates the scale’s construct but also aligns closely with blended learning theories that advocate for a balanced integration of online and face-to-face educational components.

4.3. Confirmatory Factor Analysis

The outcomes of Confirmatory Factor Analysis (CFA) provided a robust examination of the model’s structure through the modification indices, which suggest possible adjustments for improving model fit. Additionally, the compatibility of the model with the empirical data was quantified through various fit indices detailed in Table 6.

TABLE 6. Findings related to CFA

| INDEX | PERFECT FIT CRITERIA | ACCEPTABLE FIT CRITERIA | RESEARCH FINDING | RESULT * |

| Χ²/df | 0 ≤ χ²/df ≤ 3 | 3 ≤ χ²/df ≤ 5 | 2.84 | Perfect Fit |

| GFI | .95 ≤ GFI ≤ 1.00 | .90 ≤ GFI ≤ .95 | 0.91 | Acceptable Fit |

| CFI | .95 ≤ CFI ≤ 1.00 | .90 ≤ CFI ≤ .95 | 0.93 | Acceptable Fit |

| NFI | .95 ≤ NFI ≤ 1.00 | .90 ≤ NFI ≤ .95 | 0.92 | Acceptable Fit |

| AGFI | .90 ≤ AGFI ≤ 1.00 | .85 ≤ AGFI ≤ .90 | 0.87 | Acceptable Fit |

| RMSEA | .00 ≤ RMSEA ≤ .05 | .05 ≤ RMSEA ≤ .10 | 0.086 | Acceptable Fit |

| SRMR | .00 ≤ SRMR ≤ .05 | .05 ≤ SRMR ≤ .08 | 0.076 | Acceptable Fit |

Note. *Baumgartner & Homburg (1996); Bentler (1980); Kline (2023); Hu & Bentler (1999)

In this study, a p-value of .000 (p<.05) indicated a significant difference between the expected and observed covariance matrices. The fit indices for the Confirmatory Factor Analysis (CFA) were evaluated to ensure the model’s validity. The chi-square (χ²) fit statistic showed a ratio of 2.84 to the degrees of freedom, indicating a perfect fit, as values below 3 and 5 suggest perfect and good fits, respectively (Kline, 2023). The Goodness of Fit Index (GFI) was 0.91, suggesting a perfect fit since values close to 1 indicate a good fit. The Comparative Fit Index (CFI) value of 0.93 indicated an acceptable fit, with values between 0.90-0.95 considered acceptable (Hu & Bentler, 1999; Tabachnick & Fidell, 2001). Similarly, the Normed Fit Index (NFI) was 0.92, which also signifies an acceptable fit, as values between 0.90-0.95 are acceptable (Kline, 2005). The Standardized Root Mean Square Residual (SRMR) value was 0.076, showing an acceptable fit as values close to 0 indicate a good fit. The Adjusted Goodness of Fit Index (AGFI) was 0.87, indicating an acceptable fit. Lastly, the Root Mean Square Error of Approximation (RMSEA) value was 0.086, demonstrating an acceptable fit, with values between 0.05 and 0.10 being acceptable (Hu & Bentler, 1995; Kline, 2014). These results confirm that the model fits the data well, with all indices within acceptable ranges.

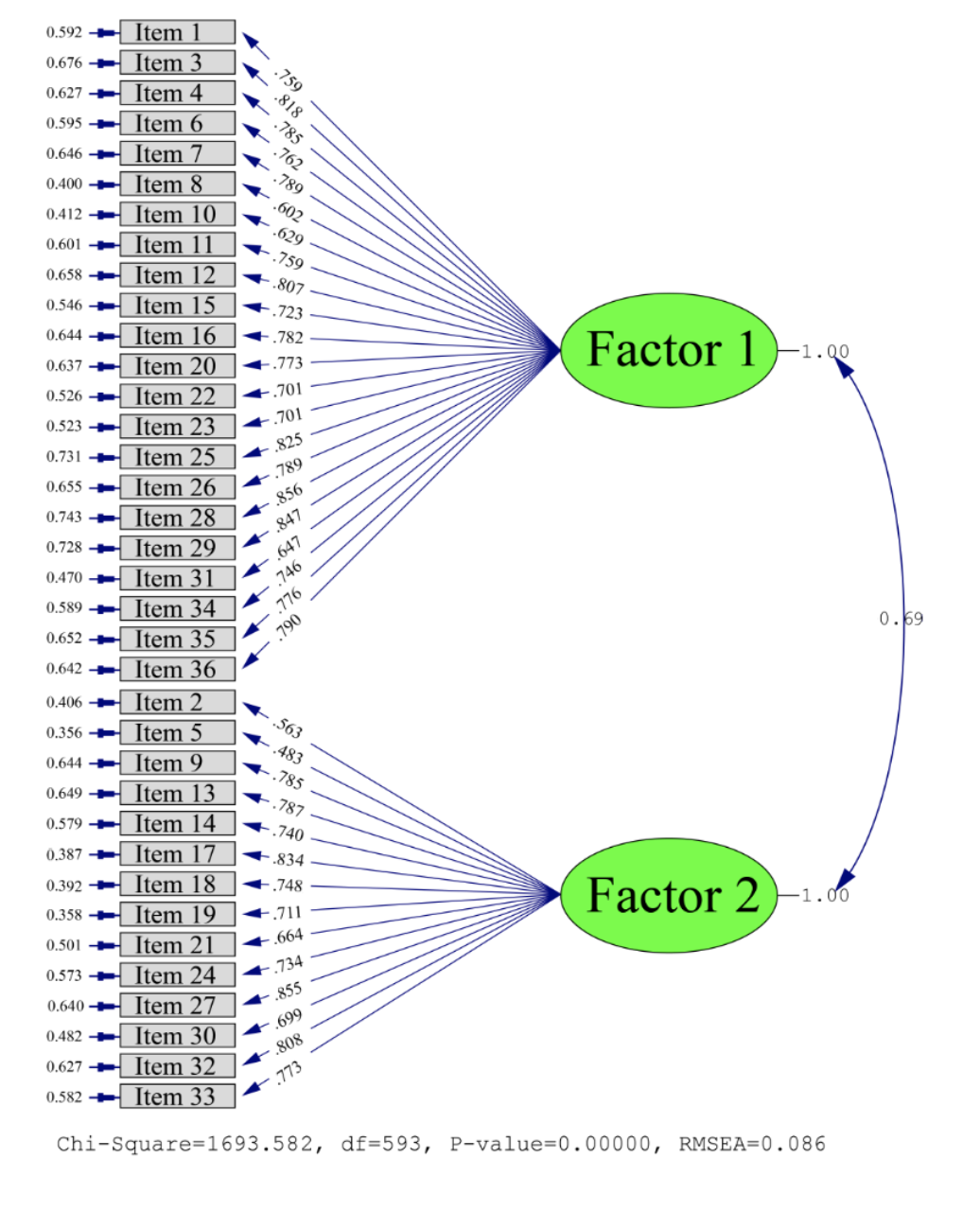

The path diagram for the blended learning methods attitude scale in the CFA model is shown in Figure 3. This diagram illustrates the model’s structure, including factor loadings and common factor variances for each item. The strong factor loadings indicate a robust goodness of fit, suggesting the items effectively measure the intended constructs. The diagram visually reinforces the analytical findings, providing a clear overview of the scale’s structural validity and cohesive factor associations.

FIGURE 3. Path diagram of the blended learning methods attitude scale for the CFA model

4.4. Reliability Analysis

4.4.1. Internal consistency reliability-Cronbach’s α coefficient

The resulting Cronbach’s α values were analyzed for both the individual subscales and the entire scale. These values have been systematically tabulated and are detailed in Table 7. The presentation of these values allows for a nuanced understanding of the reliability of each component of the scale as well as the scale as a whole, highlighting the scale’s overall ability to provide consistent and dependable results across various dimensions of the blended learning attitude construct.

TABLE 7. Reliability analysis results of blended learning methods attitude scale (Cronbach’s α)

| FACTOR | Items | Cronbach’s α |

| Factor 1 | 1, 3, 4, 6, 7, 8, 10, 11, 12, 15, 16, 20, 22, 23, 25, 26, 28, 29, 31, 34, 35, 36 | .967 |

| Factor 2 | 2, 5, 9, 13, 14, 17, 18, 19, 21, 24, 27, 30, 32, 33 | .923 |

| Total | .847 |

A Cronbach’s α value of 0.70 or higher is generally considered indicative of satisfactory reliability for scale scores, according to Cohen et al. (2007). Such a value confirms that the scale, along with its sub-dimensions, possesses robust internal reliability. This benchmark ensures that the items within the scale consistently measure the same underlying attributes, providing a reliable and stable gauge of the constructs intended to be assessed. When the Cronbach’s α meets or exceeds this threshold, it signals that the scale is dependable for educational and psychological assessments, reflecting a high degree of internal consistency among the items.

4.4.2. Consistency of the scale using Pearson correlation coefficients (r)

The results indicated that these relationships were predominantly significant, with p values less than 0.05, suggesting a statistically significant correlation at a conventional level of confidence. These findings, detailed in Table 8, confirm that the scale items are not only closely related to their respective factors but also exhibit significant inter-correlations, reinforcing the scale’s conceptual coherence and the interdependence of its various elements.

TABLE 8. Pearson correlation of the relationships between factors and scale scores

| FACTORS | Pearson correlation coefficients (r) | ||

| FACTOR 1 | FACTOR 2 | TOTAL | |

| Factor-1 | 1 | -.603** | .868** |

| Factor-2 | -.603** | 1 | -.132* |

| Total | .868** | -.132* | 1 |

**Significance level: p<.01, *Significance level: p<.05

As detailed in Table 8, the correlation coefficient reveals a moderate negative consistency (r = -0.603) between the sub-factors, with statistical significance at the p < .01 level. This moderate negative correlation indicates that as scores on one factor increase, scores on the other factor tend to decrease, suggesting a divergent relationship between the constructs measured by these factors. Conversely, a high and positive correlation (r = .868) is observed between the first factor (Factor 1) and the overall scale score, also significant at the p < .01 level. This strong positive relationship indicates that higher scores on Factor 1 are closely associated with higher overall scores on the scale, affirming Factor 1’s substantial influence on the scale’s composite score. Additionally, a negative but low-level significant correlation (r = -0.132) exists between the second factor (Factor 2) and the total scale score, significant at the p < .05 level. This suggests that Factor 2 has a slight inverse relationship with the overall scale performance, though the impact is relatively minimal.

5. DISCUSSION

This research significantly enhances our understanding of the blended learning methods by showcasing the diverse impacts of blended learning environments on student attitudes. The newly developed scale not only corroborates but also expands upon previous theoretical assertions, such as those proposed by Lazar et al. (2020) and Tzafilkou et al. (2021). It does so by meticulously quantifying the influence of specific pedagogical approaches within blended learning on the attitudinal dimensions of middle school students. The results reveal that perceptions of blended learning are multifaceted and considerably varied, highlighting the coexistence of both positive and negative attitudes towards these learning environments. This complexity is crucial for extending theoretical frameworks and provides a nuanced view of how blended learning affects student engagement and learning outcomes (Bouilheres et al., 2020; Chiu, 2021; Fisher et al., 2021), suggesting that the educational impact of blended learning is not uniformly positive but rather dependent on a variety of interrelated factors.

Building on the nuanced understanding of student attitudes revealed in the previous analysis, these findings significantly inform the evolution of theoretical frameworks within the field of educational technology and pedagogy (Fawns, 2022). The discovery of a two-factor structure encompassing both positive and negative attitudes toward blended learning environments underscores the need for future theoretical models to incorporate these dual dimensions. By acknowledging the complexity of student attitudes, educators and researchers can better predict and enhance student outcomes (Cao, 2023; Yu et al., 2022). This understanding could facilitate the design of targeted interventions aimed at amplifying positive attitudes and alleviating negative ones (Olpak & Ateş, 2018). Such strategic interventions are pivotal for cultivating more effective and adaptive learning environments that respond dynamically to the varied needs and perceptions of students. This approach not only complements the findings that attitudes towards blended learning are varied and complex but also leverages this insight to propose practical solutions aimed at optimizing educational outcomes (Ateş & Garzon, 2022).

Furthermore, the findings of this study provide concrete insights into the design and implementation of blended learning environments. By identifying key factors that influence student attitudes towards blended learning, such as engagement levels and the effectiveness of digital tools, we can directly inform the instructional design processes. This approach ensures that blended learning techniques are not only aligned with educational outcomes but are also responsive to the diverse needs of students. The development of the attitude scale, validated through this research, enables educators to fine-tune these environments, ensuring they are conducive to learning and growth. Thus, by integrating our findings with existing blended learning strategies, we can enhance the practical application of these instructional methodologies, fostering environments that support both student engagement and academic success (Cigdem & Oncu, 2024).

Expanding on these practical insights, the research has incorporated specific attitudinal factors such as social interaction, technological ease of use, and pedagogical effectiveness into the attitude scale. This integration ensures that the scale accurately captures the essential elements that define the student experience in blended learning environments (Al-Maroof et al., 2022; Ohanu et al., 2023). These factors underscore the complexity of how students interact with and respond to blended environments, integrating both emotional and cognitive responses with their social and technological contexts (Bizami et al., 2023). By exploring these deeper layers of influence, this study not only enhances our understanding of blended learning dynamics but also enriches the theoretical models used to interpret these phenomena. This refined understanding provides a foundation for designing more effective blended learning strategies that are comprehensively responsive to all dimensions of student experience, thus aligning closely with the practical applications discussed earlier and extending their impact on educational practice.

Building upon the concept of “attitudinal duality” and the complexities it introduces, the collective insights from this study enhance our understanding of the dynamic impacts of blended learning. They lay a robust foundation for refining educational theories to more accurately reflect the intricacies of contemporary educational environments. This research challenges the current theoretical landscape by illustrating that the true impact of blended learning is not singular, but rather multifaceted and influenced by a constellation of interrelated factors. These include the balance of pedagogical approaches, the integration of technology, and the psychological well-being of students. By acknowledging these diverse and interconnected elements, this study enriches existing theories, prompting a reevaluation of how blended learning environments are designed, implemented, and studied. This approach not only responds to the identified complexities but also suggests a pathway for future research and practice that is more aligned with the real-world experiences of learners in digitally enhanced educational settings.

6. Conclusions

This study has significantly enhanced our understanding of middle school students’ attitudes towards blended learning by developing and validating a comprehensive attitude scale. The findings reveal the complexity of student perceptions, encompassing both positive and negative attitudes, and emphasize the need for educational strategies that address these diverse views. The research contributes to both theoretical and practical aspects of blended learning, offering detailed insights into how such educational methods impact student attitudes and suggesting ways to improve learning outcomes. The integration of educational psychology and instructional design principles provides a robust framework for future educational interventions and supports the ongoing evolution of blended learning practices. The study’s implications for educational policy and practice are clear: tailored educational interventions must consider both the psychological and pedagogical aspects of student learning. This includes professional development for teachers, effective feedback mechanisms, and psychological support for students in navigating the challenges of blended learning environments.

6.1. Limitations and future lines of research

This study provides valuable insights into middle school students’ attitudes towards blended learning, but it has certain limitations that future research should address. One major limitation is its reliance on a single educational context, which may not represent all middle school environments or student populations. Future studies should broaden the geographical scope and include diverse educational settings to see if findings are consistent across different cultures and systems. The study’s cross-sectional design captures attitudes at a specific point in time but does not account for how these attitudes might change as students and educational technologies evolve. Longitudinal studies could offer a more dynamic understanding of how attitudes towards blended learning develop over time, especially as students become more familiar with these practices. Potential bias in self-reported data is another limitation, as such data can sometimes reflect aspirational attitudes or be influenced by social desirability bias. Future research should use a mix of qualitative and quantitative methods, such as interviews or observations, to gain a deeper and more nuanced understanding of student attitudes and the factors influencing them. This study focused mainly on the cognitive and affective dimensions of student attitudes, without delving deeply into the behavioral aspect—how students actually engage with blended learning environments. Future research should explore this dimension to provide a comprehensive view of how attitudes align with actual behavior in blended learning contexts. Additionally, while the study identified key factors influencing student attitudes, it did not extensively examine the role of individual differences such as personal motivation, learning styles, and prior technological experience. These factors could significantly affect how students perceive and interact with blended learning environments. Future research should consider these personal attributes to tailor educational strategies that are not only effective but also personalized to meet the unique needs of each student.

7. FUNDING

This work was supported by Manisa Celal Bayar University Scientific Research Project (Grant number 2018-216).

8. REFERENCES

Abdi, H. (2003). Factor rotations in factor analyses. In M. Lewis-Beck, A. Bryman, T. Futing. (Eds.), Encyclopedia for Research Methods for the Social Sciences (pp. 792-795). Sage .

Ateş, H. (2024). Designing a self-regulated flipped learning approach to promote students’ science learning performance. Educational Technology & Society, 27 (1), 65-83.

Akkoyunlu, B., & Yılmaz-Soylu, M. (2008). Development of a scale on learners’ views on blended learning and its implementation process. The Internet and Higher Education , 11 (1), 26-32.

Akram, M., Iqbal, M. W., Ashraf, M. U., Arif, E., Alsubhi, K., & Aljahdali, H. M. (2023). Optimization of Interactive Videos Empowered the Experience of Learning Management System. Computer Systems Science & Engineering , 46 (1), 1021-1038. https://doi.org/10.32604/csse.2023.034085

Al-Maroof, R., Al-Qaysi, N., Salloum, S. A., & Al-Emran, M. (2022). Blended learning acceptance: A systematic review of information systems models. Technology, Knowledge and Learning , 1-36. https://doi.org/10.1007/s10758-021-09519-0

Armellini, A., Teixeira Antunes, V., & Howe, R. (2021). Student perspectives on learning experiences in a higher education active blended learning context. TechTrends , 65 (4), 433-443. https://doi.org/10.1007/s11528-021-00593-w

Ashraf, M. A., Yang, M., Zhang, Y., Denden, M., Tlili, A., Liu, J., Huang, R., & Burgos, D. (2021). A systematic review of systematic reviews on blended learning: trends, gaps and future directions. Psychology Research and Behavior Management, 14, 1525-1541. https://doi.org/10.2147/PRBM.S331741

Ateş, H., & Garzon, J. (2022). Drivers of teachers’ intentions to use mobile applications to teach science. Education and Information Technologies, 27 (2), 2521-2542. https://doi.org/10.1007/s10639-021-10671-4

Ateş, H., & Garzon, J. (2023). An integrated model for examining teachers’ intentions to use augmented reality in science courses. Education and Information Technologies, 28 , 1299-1321. https://doi.org/10.1007/s10639-022-11239-6

Banihashem, S. K., Noroozi, O., den Brok, P., Biemans, H. J., & Kerman, N. T. (2023). Modeling teachers’ and students’ attitudes, emotions, and perceptions in blended education: Towards post-pandemic education. The International Journal of Management Education , 21 (2), 100803. https://doi.org/10.1016/j.ijme.2023.100803

Bartholomew, D. J., Knott, M., & Moustaki, I. (2011). Latent variable models and factor analysis: A unified approach . John Wiley & Sons.

Baumgartner, H., & Homburg, C. (1996). Applications of structural equation modeling in marketing and consumer research: A review. International Journal of Research in Marketing, 13 (2), 139-161. https://doi.org/10.1016/0167-8116(95)00038-0

Bentler, P.M. (1980). Multivariate analysis with latent variables: Causal modeling. Annual Review of Psychology, 31 (1), 419-456. https://doi.org/10.1146/annurev.ps.31.020180.002223

Bervell, B., Umar, I. N., Kumar, J. A., Asante Somuah, B., & Arkorful, V. (2021). Blended learning acceptance scale (BLAS) in distance higher education: toward an initial development and validation. Sage Open , 11 (3), 21582440211040073. https://doi.org/10.1177/21582440211040073

Bhagat, K. K., Cheng, C. H., Koneru, I., Fook, F. S., & Chang, C. Y. (2023). Students’ blended learning course experience scale (BLCES): Development and validation. Interactive Learning Environments , 31 (6), 3971-3981. https://doi.org/10.1080/10494820.2021.1946566

Bizami, N. A., Tasir, Z., & Kew, S. N. (2023). Innovative pedagogical principles and technological tools capabilities for immersive blended learning: a systematic literature review. Education and Information Technologies , 28 (2), 1373-1425. https://doi.org/10.1007/s10639-022-11243-w

Blau, I., & Hameiri, M. (2017). Ubiquitous mobile educational data management by teachers, students and parents: Does technology change school-family communication and parental involvement?. Education and Information Technologies , 22 , 1231-1247. https://doi.org/10.1007/s10639-016-9487-8

Bouilheres, F., Le, L. T. V. H., McDonald, S., Nkhoma, C., & Jandug-Montera, L. (2020). Defining student learning experience through blended learning. Education and Information Technologies , 25 (4), 3049-3069. https://doi.org/10.1007/s10639-020-10100-y

Cao, W. (2023). A meta-analysis of effects of blended learning on performance, attitude, achievement, and engagement across different countries. Frontiers in psychology , 14 , 1212056. https://doi.org/10.3389/fpsyg.2023.1212056

Chaw, L. Y., & Tang, C. M. (2023). Exploring the role of learner characteristics in learners’ learning environment preferences. International Journal of Educational Management , 37 (1), 37-54. https://doi.org/10.1108/IJEM-05-2022-0205

Chiu, T. K. (2021). Digital support for student engagement in blended learning based on self-determination theory. Computers in Human Behavior , 124 , 106909. https://doi.org/10.1016/j.chb.2021.106909

Cigdem, H., & Oncu, S. (2024). Understanding the Role of Self-Regulated Learning in academic success: a blended learning perspective in vocational education. Innoeduca: International Journal of Technology and Educational Innovation , 10 (1), 45-64. https://doi.org/10.24310/ijtei.101.2024.17432

Cohen, L., Manion, L., & Morrison, K. (2007). Research methods in education . New York: Routledge.

Çemçem, G. D., Korkmaz, Ö., & Kukul, V. (2024). Readiness of teachers for blended learning: A scale development study. Education and Information Technologies , 1-25. https://doi.org/10.1007/s10639-024-12777-x

Çobanoğlu, N., & Demir S. (2023). Investigation of preschool the approaches of teachers towards inclusion, inclusion competencies and classroom management skills. International Online Journal of Education and Teaching (IOJET), 10 (3), 1868-1885.

Dakhi, O., Jama, J., & Irfan, D. (2020). Blended learning: a 21st century learning model at college. International Journal of Multi Science , 1 (08), 50-65.

Dangwal, K. L. (2017). Blended learning: An innovative approach. Universal Journal of Educational Research , 5 (1), 129-136. https://doi.org/10.13189/ujer.2017.050116

ElSayary, A. (2023). The impact of a professional upskilling training programme on developing teachers’ digital competence. Journal of Computer Assisted Learning , 39 (4), 1154-1166. https://doi.org/10.1111/jcal.12788

Fawns, T. (2022). An entangled pedagogy: Looking beyond the pedagogy—technology dichotomy. Postdigital Science and Education , 4 (3), 711-728. https://doi.org/10.1007/s42438-022-00302-7

Fisher, R., Perényi, A., & Birdthistle, N. (2021). The positive relationship between flipped and blended learning and student engagement, performance and satisfaction. Active Learning in Higher Education , 22 (2), 97-113. https://doi.org/10.1177/1469787418801702

Han, F., & Ellis, R. A. (2020). Initial development and validation of the perceptions of the blended learning environment questionnaire. Journal of Psychoeducational Assessment , 38 (2), 168-181. https://doi.org/10.1177/0734282919834091

Hu, L.T., & Bentler, P. M. (1995). Evaluating model fit. In: RH. Hoyle (Ed.), Structural Equation Modeling: Concepts, Issues, and Applications (pp. 76-99) . Sage.

Hu, L.T., Bentler, P. M., (1999). Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria versus New Alternatives. Structural Equation Modeling, 6(1), 1-55. https://doi.org/10.1080/10705519909540118

Khlaif, Z. N., Sanmugam, M., Joma, A. I., Odeh, A., & Barham, K. (2023). Factors influencing teacher’s technostress experienced in using emerging technology: A qualitative study. Technology, Knowledge and Learning , 28 (2), 865-899. https://doi.org/10.1007/s10758-022-09607-9

Kikalishvili, S. (2023). Unlocking the potential of GPT-3 in education: Opportunities, limitations, and recommendations for effective integration. Interactive Learning Environments , 1-13. https://doi.org/10.1080/10494820.2023.2220401

Kilag, O. K., Obaner, E., Vidal, E., Castañares, J., Dumdum, J. N., & Hermosa, T. J. (2023). Optimizing Education: Building Blended Learning Curricula with LMS. Excellencia: International Multi-disciplinary Journal of Education (2994-9521) , 1 (4), 238-250.

Kline, P. (2014). An easy guide to factor analysis . Routledge.

Kline, R. B. (2023). Principles and practice of structural equation modeling (15th edition). Guilford Press

Kline, T. (2005). Psychological testing: A practical approach to design and evaluation . Sage.

Lazar, I. M., Panisoara, G., & Panisoara, I. O. (2020). Digital technology adoption scale in the blended learning context in higher education: Development, validation and testing of a specific tool. PloS one , 15 (7), e0235957. https://doi.org/10.1371/journal.pone.0235957

López-Pellisa, T., Rotger, N., & Rodríguez-Gallego, F. (2021). Collaborative writing at work: Peer feedback in a blended learning environment. Education and Information Technologies, 26 (1), 1293-1310. https://doi.org/10.1007/s10639-020-10312-2

Niu, Y., Xi, H., Liu, J., Sui, X., Li, F., Xu, H., ... & Guo, L. (2023). Effects of blended learning on undergraduate nursing students’ knowledge, skill, critical thinking ability and mental health: a systematic review and meta-analysis. Nurse Education in Practice , 103786. https://doi.org/10.1016/j.nepr.2023.103786

Ohanu, I. B., Shodipe, T. O., Ohanu, C. M., & Anene-Okeakwa, J. E. (2023). System quality, technology acceptance model and theory of planned behaviour models: Agents for adopting blended learning tools. E-Learning and Digital Media , 20 (3), 255-281. https://doi.org/10.1177/20427530221108031

Olpak, Y. Z., & Ateş, H. (2018). Pre-Service science teachers’ perceptions toward additional instructional strategies in biology laboratory applications: Blended learning. Science Education International, 29 (2), 88-95. https://doi.org/10.33828/sei.v29.i2.3

Peng, Y., Wang, Y., & Hu, J. (2023). Examining ICT attitudes, use and support in blended learning settings for students’ reading performance: Approaches of artificial intelligence and multilevel model. Computers & Education , 203 , 104846. https://doi.org/10.1016/j.compedu.2023.104846

Singh, J., Steele, K., & Singh, L. (2021). Combining the best of online and face-to-face learning: Hybrid and blended learning approach for COVID-19, post vaccine, & post-pandemic world. Journal of Educational Technology Systems , 50 (2), 140-171. https://doi.org/10.1177/00472395211047865

Smith, K., & Hill, J. (2018). Defining the nature of blended learning through its depiction in current research. Higher Education Research and Development, 38 (2), 383-397. https://doi.org/10.1080/07294360.2018.1517732

Stec, M., Smith, C., & Jacox, E. (2020). Technology enhanced teaching and learning: Exploration of faculty adaptation to iPad delivered curriculum. Technology, Knowledge and Learning , 25 (3), 651-665. https://doi.org/10.1007/s10758-019-09401-0

Tabachnick, B. G., & Fidell, L. S. (2001). Using Multivariate Statistics (4th edition). MA: Allyn & Bacon, Inc.

Tavşancıl, E. (2006). Measurement of attitudes and data analysis with SPSS (3rd edition). Nobel Publishing.

Tzafilkou, K., Perifanou, M., & Economides, A. A. (2021). Development and validation of a students’ remote learning attitude scale (RLAS) in higher education. Education and Information Technologies , 26 (6), 7279-7305. https://doi.org/10.1007/s10639-021-10586-0

Weng, C. H., & Tang, Y. (2014). The relationship between technology leadership strategies and effectiveness of school administration: An empirical study. Computers & Education , 76 , 91-107. https://doi.org/10.1016/j.compedu.2014.03.010

Yu, Z., Xu, W., & Sukjairungwattana, P. (2022). Meta-analyses of differences in blended and traditional learning outcomes and students’ attitudes. Frontiers in psychology , 13 , 926947. https://doi.org/10.3389/fpsyg.2022.926947

Zhang, Z., Cao, T., Shu, J., & Liu, H. (2020). Identifying key factors affecting college students’ adoption of the e-learning system in mandatory blended learning environments. Interactive Learning Environments , 30 (8), 1388-1401. https://doi.org/10.1080/10494820.2020.1723113

APPENDIX: The developed scale

| Item No | STATEMENTS | Strongly agree | Agree | Undecided | Disagree | Strongly disagree |

| 1 | I think the lesson taught with the blended learning methods is useful. | |||||

| 2 | I have difficulty in understanding the lesson taught with the blended learning methods. | |||||

| 3 | I greatly enjoy studying the lesson with the blended learning methods. | |||||

| 4 | I can not wait to go to the lesson taught with the blended learning methods. | |||||

| 5 | I am afraid of failing the lesson taught with the blended learning methods. | |||||

| 6 | Teaching the lesson with the blended learning methods allows me to learn faster. | |||||

| 7 | I think that the lesson taught with the blended learning methods is understandable. | |||||

| 8 | I think the lesson taught with the blended learning methods is fun. | |||||

| 9 | I find the teaching of the lesson with the blended learning methods boring. | |||||

| 10 | I like to share the information I learned in the lesson taught with the blended learning methods with others. | |||||

| 11 | I like the lesson taught with the blended learning methods. | |||||

| 12 | My desire to learn increases in the lesson taught with the blended learning methods. | |||||

| 13 | I get restless when the lesson taught with the blended learning methods. | |||||

| 14 | Teaching the lesson with the blended learning methods reduces my interest in the lesson. | |||||

| 15 | I do not notice time passing in the lesson taught with the blended learning methods. | |||||

| 16 | Teaching the lesson with blended learning methods encourages me to do research. | |||||

| 17 | I do not think that the blended learning methods is suitable for other lessons. | |||||

| 18 | I find it difficult to communicate with my friends in the lesson taught with the blended learning methods. | |||||

| 19 | I do not like that the lesson is taught by BL. | |||||

| 20 | The lesson taught with the blended learning methods allows me to demonstrate my own ability. | |||||

| 21 | I am irritated that the lesson is taught with the blended learning methods. | |||||

| 22 | I think I got the best out of the lesson taught with the blended learning methods. | |||||

| 23 | I think that the information I learned in the lesson taught with the blended learning methods will last permanently. | |||||

| 24 | I find it difficult to follow the lesson taught with the blended learning methods. | |||||

| 25 | Teaching the lesson with blended learning methods increases my creativity. | |||||

| 26 | My self-confidence increases in the lesson taught with the blended learning methods. | |||||

| 27 | I am afraid of making mistakes in the lesson taught with the blended learning methods. | |||||

| 28 | Teaching the lesson with blended learning methods increases my motivation. | |||||

| 29 | My interest increases in the lesson taught with the blended learning methods. | |||||

| 30 | I am not motivated in the lesson taught with the blended learning methods. | |||||

| 31 | I think I will be successful in the lesson taught with the blended learning methods. | |||||

| 32 | I do not think that the lesson taught with the blended learning methods is useful. | |||||

| 33 | I think that the lesson taught with the blended learning methods is a waste of time. | |||||

| 34 | I think that the lesson taught with the blended learning methods helped me develop socially. | |||||

| 35 | I think I will get better grades in the lesson taught with the blended learning methods. | |||||

| 36 | The lesson taught with the blended learning methods increases my curiosity. |