of Technology and Educational Innovation

Vol. 10. No. 1. Junio 2024 - pp. 144-165 - ISSN: 2444-2925

DOI: https://doi.org/10.24310/ijtei.101.2024.17813

Higher Tertiary Education Perspectives: Evaluating the Electronic Assessment Techniques of the Blackboard Platform for Fairness and Reliability

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.1. INTRODUCTION

Amidst a backdrop of rapidly evolving technological paradigms, the global educational milieu has witnessed a transformative reorientation. In order to optimize processes and improve the quality of their offerings, academic institutions have increasingly anchored their operations in digital innovations. The Corona pandemic accelerated this technological trajectory, which was already in motion. The crisis rendered conventional educational architectures insufficient, necessitating a rapid transition to virtual pedagogic platforms (Ahmed et al., 2023; Iqbal et al., 2022). Previously viewed as a supplement to traditional education, E-learning has become crucial in maintaining the continuity of academic pursuits (Therisa Beena, & Sony, 2022).

Electronic assessment is a central component of this digital education revolution. E-learning solutions, exemplified by platforms like the Blackboard system, have assumed a central role in assessing student aptitude and performance (Hezam, & Mahyoub, 2022). Nonetheless, despite these digital tools’ unrivalled flexibility and convenience, there has been an explosion of discussion regarding their efficacy, dependability, and objectivity. How do the primary stakeholders, the students, interpret and place their trust in these digital assessment techniques?

The significance of this study is substantial. The trajectory of contemporary education depends on the symbiotic convergence of technology and pedagogical principles. Should electronic assessment mechanisms, which are fundamental to this convergence, be perceived as compromized or biased, the structural integrity of contemporary pedagogical paradigms could be jeopardized. In recognition of this significance, the present study seeks to examine the dependability and impartiality of electronic assessment modalities, with a particular emphasis on the Blackboard ecosystem, through the lens of its most affected demographic: students.

This study aims to elucidate university students’ perceptions regarding electronic assessment techniques within the Blackboard Learning Management System. This study is intended to focus on broader concerns regarding the veracity and fairness of such digital evaluation techniques in a constantly evolving educational environment. Given this, the subsequent research questions are scrutinized:

- How do students perceive the electronic assessment techniques employed by faculty within the Blackboard platform at tertiary institutions?

- What is the level of student receptivity towards the electronic assessment approaches utilized within the Blackboard platform?

- What are students’ views on the fairness and reliability of the electronic assessment strategies facilitated through the Blackboard platform?

- How do variables such as gender and academic discipline influence students’ perceptions of the reliability and fairness of electronic evaluations conducted within the Blackboard system?

2. LITERATURE REVIEW

2.1. Evolution of E-learning and Digital Pedagogy

The digital era brought about profound changes in numerous fields, but perhaps none more so than in education. Late in the 20th century, primitive computer-based training systems laid the groundwork for what we now refer to as ‘e-learning’ (Martin, & Bolliger, 2018; Padilla-Hernández et al., 2019). Although limited in scope and interactivity, these early systems departed from traditional educational methods by providing self-paced learning modules primarily utilizing digital technology as a content delivery mechanism (Agung et al., 2020). As the years progressed, the convergence of advancing technologies, particularly the introduction of the ‘World Wide Web’, propelled e-learning from these fundamental computer-mediated instructions to the dynamic, immersive, and collaborative virtual classrooms we are familiar with today (Al-khresheh, 2022a; Azizan et al., 2020). This shift did not merely reflect technological advancement and highlighted a complex tango between pedagogical innovations and technological affordances, constantly reshaping digital education’s contours (Gonzales Tito et al., 2023; Meirbekov et al., 2022). Initially, e-learning was predominantly tied to specific locations, such as computer laboratories, where students interacted with static content. Modern e-learning environments are characterized by their emphasis on collaboration (Munir et al., 2022). The emphasis was primarily placed on self-directed, individualized learning. With the advent of the Internet in the late 1990s and early 2000s, e-learning began to realize its full potential. ‘Web-based platforms’ enabled dynamic content delivery, interactive multimedia, and, most significantly, real-time communication between students and instructors (Alenezi, 2023).

With the widespread adoption of Learning Management Systems (LMS), e-learning underwent a period of transformation. At the turn of the 21st century, platforms such as Moodle, Canvas, and Blackboard rose to prominence, presenting themselves as unified centres that combined curriculum design, content dissemination, student evaluation, and collaboration facilitation (Veluvali, & Surisetti, 2021). Among them, Blackboard, distinguished by its user-friendly interface and robust structure, has solidified its position as a top-tier option for educational institutions, demonstrating its adaptability to various educational strategies (Al-khresheh, 2021; Almoeather, 2020). However, these platforms’ effects extended beyond the confines of content organization. They played a pivotal role in making education more accessible, removing geographical barriers, and ensuring all learners had access to high-quality content (Saadati et al., 2023). In addition, LMSs introduced a novel level of personalized learning experiences. Informed by data-driven insights, academic institutions began designing courses that resonated with the profiles of individual students, departing from the historically prevalent generic pedagogical models (Guoyan et al., 2023). This paradigm shift, ushered in by the capabilities of LMSs, exemplified the promise of technology: a force capable of not only replicating but also amplifying and redefining conventional educational pathways.

Alongside the rapid advances in technological innovation, educational theories and pedagogical practices adapted to exploit the full potential of these digital platforms evolved. The constructivist and connectivist educational paradigms stood out among these. Constructivism, which is rooted in the notion that learning is an active, constructive process, emphasizes the role of learners as the primary architects of their knowledge (Bizami et al., 2022; Ratten, 2023). Similarly, connectivism, introduced in the digital era, asserts that learning occurs within networks, advocating for technology integration and acknowledging the significance of social and cultural contexts in knowledge acquisition (Dziubaniuk et al., 2023). These theories highlighted the significance of learner autonomy, peer collaboration, and the establishment of dynamic knowledge ecosystems, particularly in the digital domain (Mampota et al., 2023). This pedagogical transformation was crucial. It ensured that emerging technological tools and platforms did not simply imitate traditional teaching paradigms. Instead, they ushered in a new era of education that emphasized a holistic, interconnected, and student-centred learning environment. By combining technology and these evolved teaching philosophies, a more engaging and responsive educational environment was created that was better suited to the requirements and opportunities of the digital age.

The landscape of digital education has witnessed a paradigmatic shift with the emergence of m-learning (mobile learning), a natural progression from e-learning spurred by the ubiquity of mobile technologies and pervasive Internet connectivity. This transition has revolutionized the educational sphere, placing learning resources directly at students’ fingertips, and effectively dissolving the traditional constraints of geography and time. Such a transformation necessitates learning methodologies that are not only flexible and adaptable but also aligned with the dynamic digital context (Alenezi, 2023; Onyekwere, & Enamul Hoque, 2023).

However, alongside the myriad advantages of e-learning, it also introduces complex challenges, particularly in assessing learner performance within these digital realms. The diversification in content delivery modes, coupled with the trend towards individualized learning trajectories, has highlighted the limitations and inadequacies of conventional assessment methods. These traditional approaches often fail to address the unique needs and goals of e-learning environments, prompting a reevaluation of assessment strategies (Davidova, 2023). The expansion of e-learning platforms has, in turn, amplified the demand for innovative assessment methodologies. These methodologies need to be dependable and fair and tailored to the nuances of digital learning environments. Such assessment strategies are pivotal in validating the effectiveness of digital pedagogy and have garnered significant attention in recent scholarly discourse. The subsequent section aims to explore this critical area, scrutinizing the evolution, current challenges, and prospective advancements in electronic assessment techniques within the e-learning ecosystem.

2.2. Electronic Assessment in E-Learning: Advancements and Challenges

Electronic assessment, also known as e-assessment, has become integral to modern e-learning, reshaping how institutions evaluate students’ comprehension, skills, and competencies. Both technological capabilities and changing pedagogical approaches have contributed to this transformation.

The digital revolution of the past two decades has been instrumental in advancing and diversifying e-assessment methods. The voyage commenced with digitizing traditional paper-based tests, such as multiple-choice quizzes, transforming them into platforms that provide immediate feedback and automated grading (Bender, 2023). In the wake of technological advancements, electronic assessments have evolved in complexity. The introduction of adaptive testing systems allows for questions to be adjusted dynamically based on a student’s real-time performance, fostering a more tailored assessment experience (Malik et al., 2019). Moreover, incorporating artificial intelligence (AI) into these systems has ushered in an era of timely and detailed feedback, guiding students to areas for improvement while reinforcing their understanding of well-understood topics (Huang et al., 2021).

Incorporating multimedia elements into e-assessments has markedly broadened their scope and application. Integrating video resources, sophisticated simulations, and immersive virtual reality environments is increasingly becoming a staple in modern assessment methodologies (AL-Qadri, & Zhao, 2021; Challa et al., 2010). These cutting-edge tools offer a replication of real-life scenarios, thereby providing a platform for students to exhibit not just their theoretical knowledge but also their practical skills, analytical prowess, and decision-making capabilities within complex, real-world contexts (Liu et al., 2020).

For example, in medical education, virtual surgery simulations have been instrumental in assessing the competencies of medical students, allowing them to navigate intricate surgical procedures in a controlled, risk-free environment (Lai, & Bower, 2019). Similarly, in architectural education, advanced 3D modelling tools enable aspiring architects to design and present their spatial concepts in detailed virtual environments. These immersive assessment tools not only evaluate the students’ technical skills but also their creativity and problem-solving abilities in a more holistic manner. Such advancements underscore the evolution of assessment strategies, moving beyond traditional pen-and-paper tests to encompass dynamic, interactive, and highly engaging evaluation methods.

Increasingly, e-assessments have incorporated universal design principles in line with the global effort to promote inclusivity in the educational domain. This commitment ensures that assessment platforms remain accessible to learners with physical, cognitive, and sensory disabilities (Kiryakova, 2021). Modern e-assessment tools now include resizable text, image descriptors, voice-over explanations, and compatibility with screen-reading technologies, ensuring each student receives a fair assessment experience (Tang et al., 2022). This shift towards inclusivity not only exemplifies the moral imperatives of today’s educational ethos but also highlights the capacity of technology to overcome traditional educational barriers (Al-Azawei et al., 2019).

Even though the road to wholly digitalized assessments has been paved with innovations, there have been obstacles. The issue of academic integrity ranks first among these concerns. The rise of remote e-assessments has brought to light issues such as plagiarism, student impersonation, and unauthorized assistance during online evaluations, raising concerns about the reliability of digital examination formats (Guangul et al., 2020). In addition, the persistent problem of the ‘digital divide’ adds complexity. Despite being adaptable and dynamic, E-assessments rely on dependable internet connections and functional devices. Nonetheless, equitable access to these technological amenities remains inequitable, potentially generating disparities in student experiences and outcomes (Al-Maqbali & Raha Hussain, 2022; Kashyap et al., 2021).

Educational assessment is undergoing a significant transformation with AI and machine learning innovations. This notable shift is acutely observed in educational digital platforms, such as Blackboard, which progressively integrate AI-enhanced tools to innovate traditional assessment methods (Al-khresheh, 2021). The ability of AI to process and analyze extensive data sets facilitates the creation of tailored and adaptive assessment frameworks, thus accommodating diverse learning trajectories (Guangul et al., 2020). Furthermore, AI-powered algorithms are pivotal in streamlining the grading process, delivering immediate feedback to learners, and pinpointing improvement areas (Kiryakova, 2021). Despite these advancements, the employment of AI in assessments brings crucial ethical concerns and questions of fairness to the fore. Issues such as the risk of algorithmic bias, the safeguarding of data privacy, and the imperative for human intervention in AI-driven assessments are central themes in recent academic discourse (Fauzani et al., 2021).

The shift towards digital assessments represents both an opportunity and a challenge for teachers. While e-assessments provide opportunities for innovative teaching and testing methods, they also require teachers to be proficient in both the nuances of pedagogy and the technicalities of digital platforms (Fauzani et al., 2021; Rajesh, & Sethuraman, 2020). Such multifaceted knowledge necessitates consistent training and skill development, highlighting the intertwined nature of pedagogical insight and technological proficiency in the modern education sector (Garg, & Goel, 2022). In addition, as institutions navigate this digital transition, it is imperative that the fundamental principles of assessment, such as impartiality, transparency, and validity, remain intact, emphasising the delicate balance that must be achieved in the digital age of education.

In conclusion, electronic assessment in e-learning offers numerous advancements that align with contemporary pedagogical approaches and provide greater flexibility and adaptability. Nonetheless, educational institutions must confront inherent challenges. As we transition to investigating the role of LMSs such as Blackboard in e-assessment, these broader trends and challenges provide the context for a more in-depth examination of student perceptions and experiences within these platforms.

2.3. Student Perceptions and Experiences with E-learning Platforms: A Focus on Blackboard

The world of e-assessments, catalyzed by technological advancements, hinges significantly on the acceptance and trust of its primary stakeholders-students. Their perceptions and comfort levels are crucial in determining the durability and success of these evaluation instruments. The quality and immediacy of feedback from digital platforms have contributed significantly to these perceptions. The ability of educational systems to provide immediate feedback has always been a significant factor in their popularity. The research of Habib et al. (2020) confirms this, demonstrating how real-time feedback not only accelerates the understanding of concepts but also revitalizes student motivation and enhances confidence. Based on the principles of formative assessment, this immediate feedback creates an interactive cycle that enables students to identify their strengths and improvement areas quickly. Enriched by technological advancements, the breadth and quality of this feedback provide students with a comprehensive analysis of their performance, frequently coupled with additional resources to clarify concepts further (Rakha, 2023). Nonetheless, this digital boon comes with a caveat. The speed must be accompanied by pedagogical rigour. If a swift response is devoid of educational content, it can inadvertently result in student misunderstandings and a decline in motivation (Haleem et al., 2022).

Despite these technological marvels, e-assessments are not without their detractors. The impersonal nature of digital evaluations is one of the student concerns most frequently voiced. As articulated by Al-Maqbali and Raha Hussain (2022), while algorithms may evaluate responses quickly, they may overlook the depth and nuances that a human assessor would capture. This is amplified in high-stakes exams, where the inflexibility of digital systems and the fear of potential technical glitches can substantially heighten student anxiety (Aburumman, 2021; Fauzani et al., 2021; Marevci, & Salihu, 2023).). As we transition to the topic of e-assessment impartiality, there is an inevitable overlap with potential biases. The underlying assumption — that every student is on an equal digital footing — often fails to account for the realities of diverse socioeconomic backgrounds, technological literacy levels, and infrastructural disparities (García-Morales et al., 2021; Hosseini et al., 2021). Addressing these disparities requires recognizing and acting on the diverse requirements of students, particularly those with disabilities (Dahlstrom-Hakki et al., 2020).

The rise of Blackboard as a leading platform in e-assessment has catalyzed a plethora of scholarly investigations, delving into its multifaceted characteristics and implications in digital education (Baron, 2023). Over recent years, the academic focus has increasingly turned towards evaluating Blackboard’s user experience, seamless integration capabilities, and adaptability, especially in the rapidly changing landscape of online education. This scrutiny has become particularly pertinent in the wake of global crises, such as the COVID-19 pandemic, which have necessitated a swift and comprehensive shift to digital learning modalities (Alam et al., 2023; Alblaihed, 2023; Al-khresheh, 2022a; Rakha, 2023).

Significant research has explored how Blackboard facilitates a user-friendly interface that enhances teaching and learning experiences. Studies have emphasized its role in simplifying the transition to online platforms for educators and students, mitigating potential disruptions in educational continuity (Alam et al., 2023). Moreover, Blackboard’s capacity for integrating various digital tools and resources has been a subject of considerable interest, illustrating its effectiveness in creating a cohesive and interactive learning environment (Al-khresheh, 2022a). The platform’s adaptability, particularly in rapidly evolving scenarios like the pandemic, has also been extensively examined. Researchers have noted how Blackboard has evolved to meet educational institutions’ diverse and changing needs, ensuring uninterrupted learning processes and facilitating the implementation of innovative pedagogical strategies (Alblaihed, 2023; Rakha, 2023).

Nonetheless, a nuanced and largely unexplored area of interest remains beneath the breadth of general assessments. Specifically, while numerous studies have cast light on the overall user experience with Blackboard (Almufarreh et al., 2021; AlTameemy et al., 2020; Alyadumi & Falcioglu, 2023; Baig et al., 2020), little attention has been paid to student perceptions of the platform’s e-assessment techniques, particularly regarding their fairness and dependability. Although broad feedback may indicate overall platform contentment, it is essential to dig deeper. Students may have reservations about certain e-assessment features, querying whether or not they provide an accurate reflection of their competencies or whether or not they can reliably assess their knowledge. Some preliminary research, such as the insights provided by Tseng (2020), has hinted at these complexities, suggesting that students’ perceptions may vary based on demographic or technological factors. However, these preliminary analyses leave vast areas of this topic largely unexplored. As academic institutions rely more and more on platforms like Blackboard for comprehensive assessment, it becomes crucial to understand these complex student perspectives. In addition to having academic ramifications, it is essential to ensure that the evolution of digital education remains equitable and comprehensive. This noticeable research gap necessitates a more in-depth and targeted investigation into student experiences’ nuances and e-assessment techniques’ evaluations on platforms such as Blackboard.

3. MATERIAL AND METHOD

3.1. Research design

The study employs a descriptive quantitative design that has been carefully chosen for its capacity to capture and quantify the depth and breadth of students’ perspectives on the electronic assessment techniques of the Blackboard platform, particularly concerning impartiality and reliability. This method utilizes the power of numerical data to provide precise, quantifiable insights, thereby assuring both precision and clarity (Slattery et al., 2011). When dealing with larger sample sizes, such as the 400 undergraduates in this study, the richness of this design is further accentuated, as it provides statistical robustness and meaningful insights across a diverse student population. The non-interventionist nature of this design is one of its chief advantages. Not manipulating variables guarantees genuine, unadulterated responses that accurately reflect the respondents’ sentiments. This guarantees the integrity of the collected data, rendering it a representative snapshot of prevalent perceptions and attitudes, thereby enhancing the overall dependability and credibility of the research.

3.2. Participants

The study involved a diverse group of 400 undergraduate university students, selected using a random sampling technique to ensure a representative sample of the entire student body. The gender distribution was reasonably balanced, with 215 females representing 53.8% of the population and 185 males representing 46.3%. In addition, the participants’ academic backgrounds were almost evenly distributed between the two main faculties. 48%, or 192 participants, were students from scientific faculties, which typically emphasize systematic and data-driven learning approaches. On the other hand, students from Humanistic faculties, known for cultivating interpretive and critical thinking skills, comprised a slight majority of 52% (208 participants) of the sample. This balanced academic and gender distribution guarantees a comprehensive and holistic reflection of diverse student perspectives, thereby enhancing the validity of the research outcomes. Table 1 below displays their characteristics:

TABLE 1. Characteristics of the study participants.

| Variables | Frequency | Percentage | |

| Gender | Female | 215 | 53.8% |

| Male | 185 | 46.3% | |

| Faculty | Scientific | 192 | 48.0% |

| Humanistic | 208 | 52.0% | |

3.3. Instrumentation

Questionnaires stand as one of the quintessential tools in research, especially when delving into perceptions, attitudes, and experiences (Ball, 2019). Their structured format and ability to reach a broad audience make them an invaluable asset for obtaining reliable and scalable data. In line with this rationale, a comprehensive questionnaire was crafted for this study to probe into the university students’ perceptions concerning the reliability and fairness of Blackboard’s electronic evaluation mechanisms.

Drawing inspiration from an exhaustive review of related literature and relevant studies (Almufarreh et al., 2021; AlTameemy et al., 2020; Baig et al., 2020), this instrument encompassed a total of 20 insightful items strategically delineated across three pivotal dimensions:

- Exploration of the electronic evaluation techniques via Blackboard that faculty members deploy, with responses anchored to a straightforward binary scale (yes or no). This dimension includes seven items.

- The degree of students’ receptivity towards these electronic assessment paradigms, measured through a nuanced five-point Likert scale from 5 (signifying strong agreement) to 1 (indicating strong disagreement). Seven items are comprised in this dimension.

- In-depth assessment of students’ perceptions of the robustness (reliability) and equity (fairness) of the Blackboard’s electronic evaluations, solicited via a similarly structured five-point Likert scale. Six items are involved.

3.4. Data Collection and Analysis

Utilizing the availability and pervasiveness of online platforms, the survey was distributed via Google Forms. This platform was chosen due to its popularity among students and its streamlined design, which ensures usability and quick response acquisition. Moreover, for the academic year 2022-2023, it was essential to capture data digitally to maintain efficiency and ensure a broad reach.

After collecting the data, a comprehensive analysis was conducted using the advanced capabilities of SPSS version 26, the industry standard for social research analytics. This dependable software provided the instruments required for a comprehensive dataset evaluation. Frequency and percentage analyses illuminated general trends, while arithmetic means and standard deviations delved into the fundamental characteristics and dispersion of the responses. A two-way ANOVA was used to enhance the profundity of the analysis. This method identified significant differences or overlaps between categories, such as gender and faculty type. Using this layered analytic approach, the research intended to provide stakeholders with actionable insights regarding students’ perceptions of the Blackboard platform.

3.5. Instrumentation Validity and Reliability

To ensure the instrument’s validity and resonance with academic standards, a panel of academicians specializing in education and psychology subjected the preliminary version of the questionnaire to rigorous scrutiny. Their invaluable feedback served as a linchpin for refinement. Items garnered an agreement rate of 90% or more were retained, while others were fine-tuned or jettisoned based on the panel’s recommendations. After an exhaustive review, the initial questionnaire containing 23 items was refined to produce a final instrument consisting of 20 pertinent items.

Ensuring that the instrument was not just valid but also reliable was of paramount importance. An in-depth examination of its internal consistency was conducted by determining the correlation coefficients for each dimension. The values for the first dimension ranged between 0.57 and 0.79, the second dimension between 0.64 and 0.82, and the third dimension from 0.61 to 0.85. The overall correlation of the dimensions with the questionnaire’s total score further accentuated the instrument’s reliability, with values of 0.92, 0.88, and 0.93 for the first, second, and third dimensions, respectively. All correlation coefficients were significant at 0.001, indicating strong internal consistency and affirming the items’ alignment with their respective dimensions.

To ascertain the factorial validity of the instrument, an exploratory factor analysis (EFA) was conducted using the Principal Components extraction method. The Varimax rotation method was orthogonal to isolate factors by prioritizing items demonstrating the highest degree of saturation post-rotation. Items manifesting saturations over 0.4 were selectively categorized based on the factor where maximal saturation was exhibited. It was observed that several items demonstrated saturation across multiple factors. The EFA delineated three distinct factors, collectively accounting for 20 saturated items. The cumulative variance elucidated by these factors amounted to 59.94%. The inaugural factor encapsulated seven items and had an eigenvalue of 4.20, elucidating 25.51% of the aggregate variance. The subsequent factor enveloped seven distinct items, registering an eigenvalue of 4.15 and expounding 18.27% of the aggregate variance. The tertiary factor incorporated six items, with an eigenvalue of 3.88, illuminating 16.16% of the overarching variance.

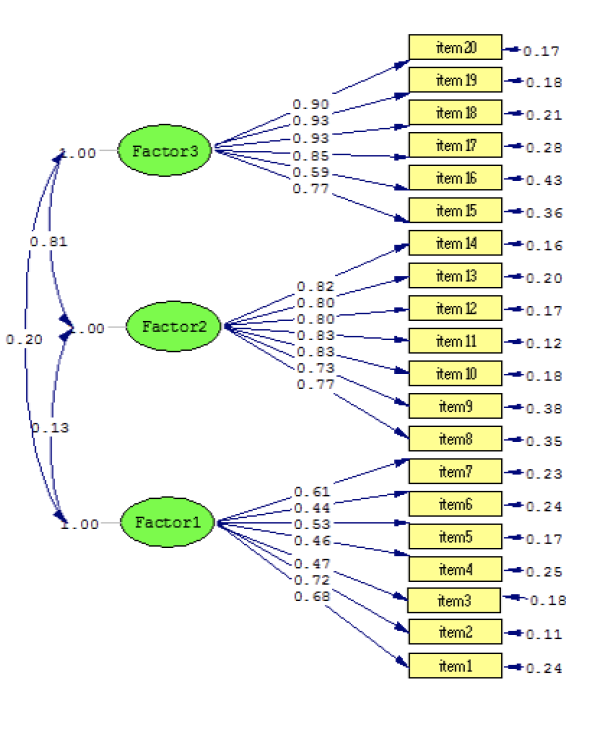

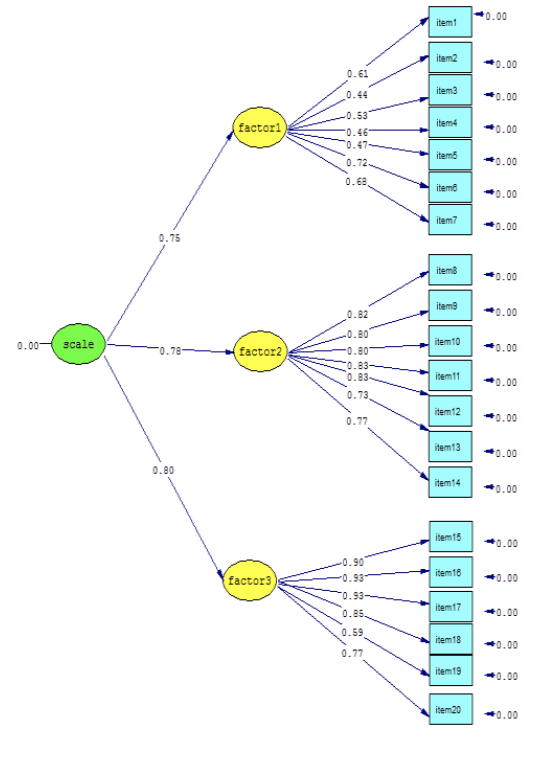

To rigorously assess the hypothesized item-factor loadings, a Confirmatory Factor Analysis (CFA) was executed employing the Maximum Likelihood Method, facilitated by the LISREL software suite. This analysis unambiguously validated the tri-factorial construct of the instrument. Empirical outcomes revealed path coefficients for the constituent items of the scale oscillating between 0.46 and 0.93, all manifesting pronounced statistical significance at the P≤0.01 threshold. The chi-square (χ2) diagnostic registered a value of 618.43 with an associated 149 degrees of freedom and a significance level anchored at P≤0.001. This translates to a (χ2/df) ratio of 4.15, signifying an optimal fit of the conceptual model to the collated data. Table 2 enumerates the goodness-of-fit indices (RMSEA, GFI, AGFI, NFI), with each metric aligning within its ideal range. This corroborates the robustness of the model’s alignment with the empirical dataset and attests to the instrument’s factorial integrity. Figure 1 provides a graphical representation of the scale’s confirmatory factor structure, whereas Figure 2 delineates the second-tier confirmatory factor analysis related to the instrument.

TABLE 2. Goodness-of-Fit Indices for the Model.

| χ2(df), p-value | χ2/df | CFI | GFI | NFI | PGFI | RMSEA | |

| CFA Model | 618.43 (149), p < 0.001 | 4.15 | 0.93 | 0.90 | 0.91 | 0.68 | 0.043 |

FIGURE 1. Confirmatory Factor Analysis Model.

FIGURE 2. Second-Order Latent Factor Model.

FIGURE 2. Second-Order Latent Factor Model.

The scale’s dimensions’ internal consistency was ascertained using Cronbach’s Alpha. The coefficients yielded were 0.77 for the primary dimension, 0.81 for the secondary dimension, 0.80 for the tertiary dimension, and an overarching 0.89 for the cumulative score of the scale. These metrics are deemed statistically robust and are within acceptable thresholds.

3.6. Ethical Considerations

Central to this research was a steadfast commitment to the highest ethical standards. To ensure transparency, the study’s primary purpose was conspicuously displayed on the first page of the questionnaire so that participants had a clear understanding of the purpose of the research. Participants’ anonymity and confidentiality were guarded with the utmost care, and their identities remained concealed. Each student was apprised of the study’s objectives, and their participation was emphasized as voluntary. In addition, they were informed of their rights, including the ability to withdraw at any time. The study maintained a neutral stance, averting bias or undue influence, thereby protecting the results’ veracity and credibility.

4. RESULTS

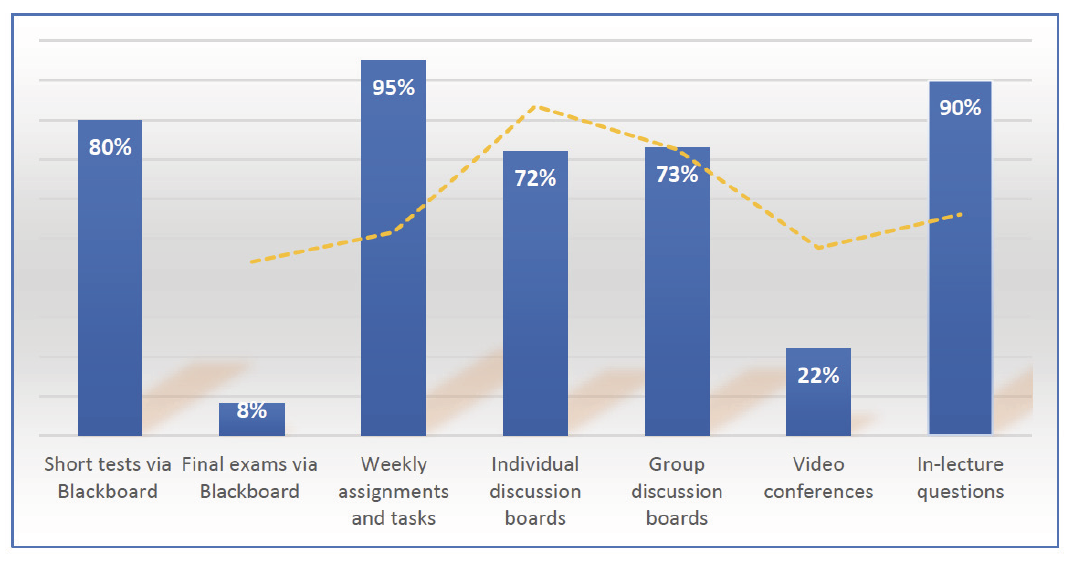

Upon comprehensive collation and examination of the participants’ responses, insights emerged concerning the first dimension, which focused on the predominant electronic evaluation methods employed by university faculty members. As illustrated in Figure 3, the weekly assignment technique emerged as the most frequently utilized approach. This was closely followed by real-time in-lecture queries. Subsequent methods in decreasing order of prevalence included short tests, group discussion forums, individual discussion panels, and video conferencing sessions between teachers and students, culminating with final examinations.

FIGURE 3. Electronic evaluation techniques most commonly employed by teaching staff.

Table 3 provides an exhaustive overview of the mean perceptions of students concerning the acceptability of Blackboard’s electronic assessment methods. The data reflects students’ opinions regarding electronic assessments on the Blackboard platform. Overall, the results indicate that students prefer electronic assessment methods on Blackboard, as indicated by the mean of 3.42 and the standard deviation of 0.71. This demonstrates both a positive aggregate response and consistency among student opinions. Examining specific elements, the statement with the highest mean (3.54) indicates students’ preference for Blackboard-based electronic performance evaluations. This implies their adaptability to digital platforms and an appreciation for their efficiency and immediacy benefits. Other highly rated items highlight the coherence of electronic evaluations with instructional content, the faculty’s initiative in online assessments, and the promptness of feedback – all of which are integral to the educational process. In contrast, the items with slightly lower mean scores concern the faculty’s use of Blackboard to moderate discussions and administer assignments. This may indicate a nuanced predilection on the part of students, in which they place assessment techniques slightly above course management capabilities.

TABLE 3. Students’ Perceptions on Accepting Blackboard’s Electronic Assessment Methods.

| No | Item | Mean | SD | Acceptance level |

| item12 | My preference leans towards undergoing performance evaluations utilizing electronic assessment modalities within the Blackboard platform. | 3.54 | 0.82 | High |

| item13 | I contend that electronic assessment methods on Blackboard align seamlessly with the course’s instructional content. | 3.49 | 0.90 | High |

| item10 | The initiative academic staff took to administer electronic examinations on the Blackboard platform meets with my approval. | 3.47 | 0.90 | High |

| item14 | Timely feedback on electronic assessment outcomes, as provided by faculty via Blackboard, garners my appreciation. | 3.42 | 0.92 | High |

| item11 | The prompt dissemination of our semesterly academic achievements on the Blackboard system by faculty members is commendable. | 3.40 | 0.87 | High |

| item9 | Faculty use of the Blackboard platform for curating course-related discussion forums and panels resonates positively with me. | 3.34 | 0.93 | Moderate |

| item8 | I value the faculty’s diligence in employing the Blackboard platform to submit and rectify course-centric assignments. | 3.30 | 0.94 | Moderate |

| TOTAL | 3.42 | 0.77 | High |

Students’ perspectives regarding electronic assessment methods enabled by the Blackboard platform are presented in descending order of mean values in Table 4. The overall trend demonstrates that students have a moderately positive attitude towards these methods, as evidenced by a mean of 3.23 and a standard deviation of 0.75. This indicates that opinions continue to vary despite a consensus regarding the moderate favourability of Blackboard’s electronic assessments. The sentiment regarding the dependability of video conference evaluations has the highest mean, 3.40, among these perceptions. This emphasizes the students’ confidence in real-time, interactive assessment methods. The subsequent items emphasize the significance of equity and impartiality, with a mean score of 3.31 for prompt assessment feedback, demonstrating the students’ appreciation for timely communication. However, as we move towards the bottom of the table, the mean values decrease slightly. 2.96 is the lowest score representing the congruence between electronic evaluation scores and students’ competencies. This may imply scepticism regarding the veracity of online assessment results. The scores for the other items concerning impartiality, equity, and diverse assessment methods indicate that Blackboard’s efforts in these areas are generally acknowledged but suggest improvement.

TABLE 4. Students’ Perceptions on Electronic Assessment via Blackboard in Descending Order.

| No | Item | Mean | SD | Acceptance level |

| item16 | The electronic evaluation conducted through video conferences between instructors and students exhibits significant reliability. | 3.40 | 0.87 | High |

| item20 | Prompt feedback on electronic assessment outcomes via the Blackboard system is deemed to uphold the principle of equity among students. | 3.31 | 0.97 | Moderate |

| item19 | The varied assessment methodologies implemented on the Blackboard system are believed to accommodate individual student variances. | 3.25 | 0.98 | Moderate |

| item17 | Evaluation techniques utilized within the Blackboard system are thought to epitomize the essence of fairness for all students. | 3.23 | 0.98 | Moderate |

| item18 | The adherence to stringent control measures in electronic evaluations via the Blackboard system is instrumental in ensuring student equity. | 3.20 | 1.02 | Moderate |

| item15 | Scores attained by students in electronic evaluations through the Blackboard system are believed to mirror their actual proficiency authentically | 2.96 | 0.99 | Moderate |

| TOTAL | 3.23 | 0.81 | Moderate |

To rigorously analyze the disparities in students’ perceptions concerning the reliability and fairness of electronic assessment approaches facilitated through the Blackboard platform, arithmetic means were computed, and categorized by the specific dimensions of gender and academic discipline, as presented in Table 5.

TABLE 5. Arithmetic Averages Based on Each Tier of the Study Variables.

| Faculty | Gender | Mean | N | Std. Deviation |

| Scientific | Female | 3.3044 | 98 | .73803 |

| male | 3.1578 | 94 | .92888 | |

| Total | 3.2326 | 192 | .83794 | |

| Humanistic | Female | 3.2550 | 117 | .75954 |

| male | 3.1722 | 91 | .79755 | |

| Total | 3.2187 | 208 | .77559 | |

| Total | Female | 3.2775 | 215 | .74847 |

| male | 3.1649 | 185 | .86445 | |

| Total | 3.2254 | 400 | .80513 |

Table 5 provides the mean perception scores and standard deviations by faculty type and gender. Females had a mean score of 3.3044 in the Scientific faculty, while males earned a score of 3.1578. The overall mean for the Scientific faculty is 3.2326 when both genders are considered. Females averaged 3.2554, and males averaged 3.1722, a total mean of 3.2187 for the Humanistic faculty. Overall, female participants had a mean score of 3.2775, while males had a mean of 3.1649, with a total mean of 3.2254 for all 400 respondents. There are subtle differences in perceptions between genders and faculties, but the overall sentiment is consistent. To discern the statistical implications of these evident disparities as per the categories (gender and academic department) and their ensuing interactions, a Bivariate Analysis of Variance (Two-Way ANOVA) was applied, as expounded in Table 6.

TABLE 6. Bivariate ANOVA Results on Students’ Perceptions of Electronic Assessment via Blackboard (Gender & Department).

| Source | Type III Sum of Squares | df | Mean Square | F | Sig. |

| Gender | 1.304 | 1 | 1.304 | 2.007 | .157 |

| Faculty | .030 | 1 | .030 | .047 | .829 |

| Gender * Faculty | .101 | 1 | .101 | .155 | .694 |

| Error | 257.245 | 396 | .650 | ||

| Total | 4419.972 | 400 | |||

| Corrected Total | 258.647 | 399 |

Table 6 shows the ANOVA findings for gender and faculty on students’ perceptions of electronic assessment via Blackboard. The p-value for gender is.157, showing that gender has no significant influence on students’ viewpoints, as it is above the conventional significance level of.05. Similarly, the faculty has a p-value of 0.829, indicating that it has no significant influence on students’ opinions. The interaction effect of gender and faculty, labeled “Gender * faculty” in the table, has a p-value of.694, indicating that this combined factor is not a significant predictor of students’ perceptions. In summary, the ANOVA results in Table 5 show that gender, faculty, and their interaction had no significant impact on participants’ perceptions of Blackboard’s electronic assessment.

5. DISCUSSION

The data indicated a diverse assessment environment to answer the first research question about how students evaluate the electronic assessment techniques used by teachers within the Blackboard Learning Management System at tertiary institutions. Weekly assignments are prominent because faculty members value constant, ongoing contact with students. This technology not only allows teachers to track students’ academic progress in real-time, but also allows them to fine-tune their educational techniques based on rapid feedback. The second most popular strategy was real-time in-lecture questioning, emphasizing the importance of live student interaction and understanding checks during online lectures. Such real-time interactions are invaluable in a virtual classroom environment, where typical indications of student perplexity or attention may go unnoticed.

Surprisingly, the study discovered a lower reliance on final exams in the digital format. This tendency may reflect an emerging educational perspective shifting away from high-stakes, end-of-term evaluations, particularly in an online format. This could be due to various factors, including logistical issues, potential academic integrity concerns in remote settings, or the inherent limits of online exam administration. Instead, there is a clear shift towards more formative, consistent examinations that give students continuous feedback and opportunities for progress.

These findings are consistent with recognized educational ideas and research compared to previous studies. Bender (2023) has emphasized the increased support for learning provided by continuous input instead of episodic feedback. The shift towards active learning approaches, supported by scholars such as Ahmed et al. (2023), enhances student participation, which is especially important in virtual classrooms. Furthermore, the difficulties in preserving academic integrity in online examinations, as investigated by Al-Maqbali and Raja Hussain (2022), may have accelerated the migration to other assessment forms judged less prone to academic misconduct.

To answer the second research question on students’ receptivity towards the electronic assessment techniques within the Blackboard system, the insights obtained from the data are insightful. The overall mean score of 3.42 indicates that students have a high preference for electronic assessment procedures. This is emphasized further by the mean score of item (12), which demonstrates students’ preference for computerized performance evaluations on the Blackboard platform. A closer look at the students’ responses indicates a variety of explanations for their selection. The efficiency provided by computerized assessments is at the heart of this attitude. The simplified form of online exams, from electronic submission to automated grading for specific question types, and the speedy transmission of findings, contrasts sharply with older approaches. Because of the increased efficiency, students can immediately determine their academic standing, allowing them to fine-tune their study habits in real-time. Furthermore, the near-instant feedback that platforms like Blackboard can provide is crucial. Unlike traditional approaches, which require students to wait weeks for feedback on their performance, electronic evaluations encourage a proactive learning environment by enabling students to identify areas for growth and engage with the material more thoroughly.

Furthermore, the adaptability inherent in computerized examinations is critical. They can effortlessly include various question types, such as multiple-choice and short-answer questions, interactive quizzes and forum-based debates. This range of evaluation systems guarantees that different learning styles are accommodated, fostering a more inclusive academic atmosphere. Such an approach can resonate strongly with kids, giving them numerous opportunities to demonstrate their understanding and skill sets. In a broader context, it is clear that digital integration in modern life has conditioned students to not only adapt to but also demand technology in their educational experiences. Growing up in a digitally dominated environment suggests that the current tertiary education cohort is well-versed in online tools. This comfort and familiarity with digital platforms may be the key to their overwhelming acceptance and favourable reception of Blackboard for academic exams.

When these data are contextualized with previous literature, it is clear that there is an increasing acceptance of digital learning platforms across student groups. For example, Ahmed et al. (2023) found that students perceive online learning environments as engaging and beneficial to their learning objectives. These platforms frequently give individualized and rapid feedback, which has been identified as an essential aspect of improving learning experiences. Furthermore, as Al-khresheh (2022b) emphasizes, the demand for electronic exams on platforms such as Blackboard reflects the trend towards using assessment as a learning tool rather than just a measurement tool. Given these widespread tendencies and supporting data, the current study’s favourable student response coincides with broader worldwide educational changes.

In addressing the third research question associated with students’ views on the fairness and reliability of the electronic assessment strategies within the Blackboard platform, the privilege of findings offers several layers of understanding. The statistics show that students have a reasonable level of trust in these electronic evaluation approaches. The overall mean number indicates a moderate level of dependability. This implies that while students generally trust electronic evaluations, there may be areas of scepticism or concern. A closer look at individual questions indicates, for example, that students have a median level of confidence in the accuracy of ratings from electronic exams representing their genuine proficiency. This indicates a balance in student perceptions: they see the benefits of electronic evaluation. However, they may also be concerned about its drawbacks, such as potential technology glitches or a lack of personal involvement in the assessment process.

Previous research, drawing on existing literature, discovered mixed student opinions towards online exams. Many students appreciate the flexibility and immediacy of online evaluations (Garg, & Goel, 2022). However, there are legitimate worries regarding the depersonalization of these evaluations, the risk of technological challenges, and the difficulty of guaranteeing equality and fairness in a remote testing context (Guangul et al., 2022; Kashyap et al., 2021). As a result, the study’s moderate degree of confidence is consistent with previous academic findings, showing the ongoing dialogue between the benefits of digital innovation and the intricacies of human connection in educational evaluation procedures.

A deep exploration of the segmented data was done to address the final study question addressing the influence of characteristics such as gender and academic discipline on students’ opinions of the trustworthiness and fairness of electronic evaluations within the Blackboard system. There are no statistically significant changes in perceptions depending on gender or faculty type, according to the data. Male and female students, regardless of whether they were affiliated with scientific or humanistic faculties, expressed comparable feelings about electronic assessment systems. This could indicate that the Blackboard platform provides a consistent user experience, or it could reflect a broader cultural or institutional environment that fosters shared perspectives across various demographic groups. Notably, the lack of substantial interaction between gender and academic discipline shows that these variables do not strongly influence students’ perceptions when combined.

Drawing on previous research, the similarity in perceptions across genders is consistent with studies demonstrating the impact of technology on learning for both male and female students (Al-khresheh, 2022). Furthermore, while some research has found differences in perceptions depending on academic disciplines in traditional learning contexts (Almoeather, 2020; Azizan et al., 2020), the findings indicate that such distinctions may be less prominent in an online environment. This could highlight the potential of digital learning platforms to provide more consistent access and experiences than traditional classroom settings.

5.1. Implications

This study’s findings provide significant insights for institutions and teachers employing or seeking to implement electronic evaluation methodologies, particularly within the Blackboard Learning Management System. The preference for weekly assignments demonstrates the importance of continuous assessment in contemporary educational settings. This continuous monitoring provides immediate feedback and can significantly improve student retention and engagement. Moreover, the positive reception of real-time in-lecture questions and discussions demonstrates the effectiveness of blended learning. Educational institutions must prioritize faculty training in seamlessly integrating these real-time interactions with traditional and online teaching methods.

The prevailing sentiment regarding the impartiality and dependability of electronic examinations on Blackboard indicates room for improvement. Institutions of higher education should focus on refining their online assessment protocols, possibly through sophisticated monitoring tools or the creation of assessments explicitly designed for the digital environment, to ensure integrity and fairness. Similarly, the consistent perception across gender and academic disciplines demonstrates the universal applicability of Blackboard. Such consistency suggests that platform enhancements or modifications would benefit many students, obviating the need for demographically targeted interventions.

The positive trend towards electronic evaluations within Blackboard allows curriculum designers to investigate this technological direction further. Continuous research and monitoring are required to ensure these innovations remain effective and equitable. As the platform’s primary stakeholders, the high acceptance rate among students makes their feedback invaluable. Institutions should actively engage with students, using their feedback to refine practices to ensure that educational strategies remain adaptable and in sync with students’ changing needs and preferences.

6. CONCLUSIONS

This research explored student perceptions regarding electronic assessment techniques within the Blackboard Learning Management System. The main findings revealed a strong preference among students for digital assessment strategies, particularly ongoing assessment mechanisms such as weekly assignments. In addition, students exhibited a high level of receptivity towards the diverse electronic assessment modalities made available through Blackboard, demonstrating the efficiency and adaptability of these assessment tools. Concerning the impartiality and dependability of these digital tools, students’ confidence in the system’s methods was moderate. Intriguingly, when evaluating perceptions based on gender and academic discipline, the results demonstrated uniformity, indicating a unified viewpoint among diverse student demographics. This study highlights a prevalent trend in which digital assessment tools, mainly via Blackboard, are highly regarded and integral, indicating a promising future for continued incorporation into higher education paradigms.

6.1. Limitations and future lines of research

This study, conducted at a single university, makes several noteworthy observations regarding students’ perceptions of Blackboard electronic assessments. However, its extent is limited. The pedagogical approach, technological infrastructure, and demographic nuances of this institution may differ significantly from those of other academic institutions, reducing the universal applicability of the findings. Moreover, the self-reported nature of the data collection may introduce biases, either due to respondents’ propensity to provide socially desirable responses or potential misinterpretations. The study’s granularity did not extend to examining differences between faculties or courses, which may employ distinct electronic evaluation strategies.

Given these constraints, future research would benefit significantly from a broader, perhaps inter-institutional or international perspective. This broader scope could provide a more complete comprehension, highlighting best practices and subtle differences in students’ perceptions across diverse educational environments. Incorporating mixed-method research designs combining quantitative and qualitative approaches may yield more profound and nuanced insights. In addition, as educational technologies continue to advance, with the incorporation of tools such as artificial intelligence, virtual reality, and sophisticated analytics, a forward-looking research strategy should be employed to investigate their potential symbiosis with established platforms such as Blackboard, ensuring that evaluations remain relevant and optimized in a rapidly evolving digital educational ecosystem.

7. FUNDING

The paper received no funding.

8. REFERENCES

Aburumman, M. F. (2021). E-assessment of students’ activities during covid-19 pandemic: Challenges, advantages, and disadvantages. International Journal of Contemporary Management and Information Technology, 2(1), 1-7.

Agung, A. S. N., Surtikanti, M. W., & Quinones, C. A. (2020). Students’ perception of online learning during COVID-19 pandemic: A case study on the English students of STKIP Pamane Talino. SOSHUM: Jurnal Sosial Dan Humaniora, 10(2), 225-235. https://doi.org/10.31940/soshum.v10i2.1316

Ahmed, S., Noor, A. S. M., Khan, W. Z., Mehmood, A., Shaheen, R., & Fatima, T. (2023). Students’ perception and acceptance of e-learning and e-evaluation in higher education. Pakistan Journal of Life & Social Sciences, 21(1), 86-95. https://doi.org/10.57239/PJLSS-2023-21.1.007

Alam, I., Qasim, A., Shah, A. H., & Kumar, T. (2023). Blackboard Collaborate: COVID-19 impacts on EFL classroom learning and knowledge on first year university students. International Journal of Knowledge and Learning, 16(3), 221-237. https://doi.org/10.1504/IJKL.2023.132159

Al-Azawei, A., Baiee, W. R., & Mohammed, M. A. (2019). Learners’ Experience Towards E-Assessment Tools: A Comparative Study on Virtual Reality and Moodle Quiz. International Journal of Emerging Technologies in Learning, 14(05), 34-50. https://doi.org/10.3991/ijet.v14i05.9998

Alblaihed, M. (2023). Attitudes of faculty members at the University of Hail towards using the blackboard as a distance-learning system. St. Theresa Journal of Humanities and Social Sciences, 9(1), 1-23.

Alenezi, M. (2023). Digital learning and digital institution in higher education. Education Sciences, 13(1), e88. https://doi.org/10.3390/educsci13010088

Al-khresheh, M. (2021). Revisiting the effectiveness of Blackboard learning management system in teaching English in the era of COVID 19. World Journal of English Language, 12(1), 1-14. https://doi.org/10.5430/wjel.v12n1p1

Al-khresheh, M. (2022a). The Impact of COVID-19 pandemic on teachers’ creativity of online teaching classrooms in the Saudi EFL context. Frontiers in Education, 7(1041446), 1-11. https://doi.org/10.3389/feduc.2022.1041446

Al-khresheh, M. (2022b). Teachers’ perceptions of promoting student-centred learning environment: an exploratory study of teachers’ behaviours in the Saudi EFL context. Journal of Language and Education, 8(3), 23-39. https://doi.org/10.17323/jle.2022.11917

Al-Maqbali, A. H., & Raja Hussain, R. M. (2022). The impact of online assessment challenges on assessment principles during COVID-19 in Oman. Journal of University Teaching and Learning Practice, 19(2), 73-92. https://doi.org/10.53761/1.19.2.6

Almoeather, R. (2020). Effectiveness of blackboard and Edmodo in self-regulated learning and educational satisfaction. Turkish Online Journal of Distance Education, 21(2), 126-140. https://doi.org/10.17718/tojde.728140

Almufarreh, A., Arshad, M., & Mohammed, S. H. (2021). An efficient utilization of blackboard ally in higher education institution. Intelligent Automation & Soft Computing, 29(1). https://doi.org/10.32604/iasc.2021.017803

AlTameemy, F. A., Alrefaee, Y., & Alalwi, F. S. (2020). Using blackboard as a tool of e-assessment in testing writing skill in Saudi Arabia. Asian ESP, 16(6.2).

Alyadumi, Y. A. M., & Falcioglu, P. (2023). Satisfaction of higher education students with blackboard learning system during Covid-19. Journal of Management Marketing and Logistics, 10(2), 72-84. https://doi.org/10.17261/pressacademia.2023.1744

AL-Qadri, A. H., & Zhao, W. (2021). Emotional intelligence and students’ academic achievement. Problems of Education in the 21st Century, 79(3), 360. https://doi.org/10.33225/pec/21.79.00

Azizan, S. N., Lee, A. S. H., Crosling, G., Atherton, G., Arulanandam, B. V., Lee, C. E. C., & Abdul Rahim, R. B. (2022). Online learning and covid-19 in higher education: the value of it models in assessing students’ satisfaction. International Journal of Emerging Technologies in Learning, 17(03), 245-278. https://doi.org/10.3991/ijet.v17i03.24871

Baig, M., Gazzaz, Z. J., & Farouq, M. (2020). Blended Learning: The impact of blackboard formative assessment on the final marks and students’ perception of its effectiveness. Pakistan journal of medical sciences, 36(3), 327-332. https://doi.org/10.12669/pjms.36.3.1925

Ball, H. L. (2019). Conducting online surveys. Journal of human lactation, 35(3), 413-417.

Baron, J. V. (2023). Blackboard system and students’ academic performance: an experimental study in the Philippines. Journal of Social, Humanity, and Education, 3(3), 173-184. https://doi.org/10.35912/jshe.v3i3.1186

Bender, T. (2023). Discussion-based online teaching to enhance student learning: Theory, practice and assessment. Taylor & Francis.

Bizami, N. A., Tasir, Z., & Kew, S. N. (2022). Innovative pedagogical principles and technological tools capabilities for immersive blended learning: a systematic literature review. Education and Information Technologies, 28(2), 1373–1425. https://doi.org/10.1007/s10639-022-11243-w

Challa, K. T., Sayed, A., & Acharya, Y. (2021). Modern techniques of teaching and learning in medical education: a descriptive literature review. MedEdPublish, 10(18). https://doi.org/10.15694/mep.2021.000018.1

Dahlstrom-Hakki, I., Alstad, Z., & Banerjee, M. (2020). Comparing synchronous and asynchronous online discussions for students with disabilities: The impact of social presence. Computers & Education, 150, 103842. https://doi.org/10.1016/j.compedu.2020.103842

Davidova, D. (2023). Comparative analysis of traditional and new assessment methods in specialized schools. Science and innovation, 2(7), 183-184. https://doi.org/10.5281/zenodo.8185594

Dziubaniuk, O., Ivanova-Gongne, M., & Nyholm, M. (2023). Learning and teaching sustainable business in the digital era: a connectivism theory approach. International Journal of Educational Technology in Higher Education, 20(1), 1-23. https://doi.org/10.1186/s41239-023-00390-w

Fauzani, R. A., Senen, A., & Retnawati, H. (2021). Challenges for elementary school teachers in attitude assessment during online learning. Journal of Education Research and Evaluation, 5(3), 362-372. https://doi.org/10.23887/jere.v5i3.33226

García-Morales, V. J., Garrido-Moreno, A., & Martín-Rojas, R. (2021). The transformation of higher education after the COVID disruption: emerging challenges in an online learning scenario. Frontiers in Psychology, 12, 616059. https://doi.org/10.3389/fpsyg.2021.616059

Garg, M., & Goel, A. (2022). A systematic literature review on online assessment security: Current challenges and integrity strategies. Computers & Security, 113, 102544. https://doi.org/10.1016/j.cose.2021.102544

Gonzales Tito, Y. M., Quintanilla López, L. N., & Pérez Gamboa, A. J. (2023). Metaverse and education: a complex space for the next educational revolution. Metaverse Basic and Applied Research, 2(56), 1-10. https://doi.org/10.56294/mr202356

Guangul, F. M., Suhail, A. H., Khalit, M. I., & Khidhir, B. A. (2020). Challenges of remote assessment in higher education in the context of COVID-19: a case study of Middle East College. Educational Assessment, Evaluation and Accountability, 32(4), 519–535. https://doi.org/10.1007/s11092-020-09340-w

Guoyan, S., Khaskheli, A., Raza, S. A., Khan, K. A., & Hakim, F. (2021). Teachers’ self-efficacy, mental well-being and continuance commitment of using learning management system during COVID-19 pandemic: a comparative study of Pakistan and Malaysia. Interactive Learning Environments, 31(7), 4652–4674. https://doi.org/10.1080/10494820.2021.1978503

Habib, M. N., Jamal, W., Khalil, U., & Khan, Z. (2020). Transforming universities in interactive digital platform: case of city university of science and information technology. Education and Information Technologies, 26(1), 517–541. https://doi.org/10.1007/s10639-020-10237-w

Haleem, A., Javaid, M., Qadri, M. A., & Suman, R. (2022). Understanding the role of digital technologies in education: A review. Sustainable Operations and Computers, 3, 275–285. https://doi.org/10.1016/j.susoc.2022.05.004

Hezam, A. M. M., & Mahyoub, R. A. M. (2022). Saudi university students’ perceptions towards language learning via blackboard during COVID-19 pandemic: a case study of Department of Languages and Translation, Taibah University, Al-Ula Campus. Advances in Social Sciences Research Journal, 9(11), 360–372. https://doi.org/10.14738/assrj.911.12730

Hosseini, M. M., Egodawatte, G., & Ruzgar, N. S. (2021). Online assessment in a business department during COVID-19: Challenges and practices. The International Journal of Management Education, 19(3), 100556. https://doi.org/10.1016/j.ijme.2021.100556

Huang, J., Saleh, S., & Liu, Y. (2021). A review on artificial intelligence in education. Academic Journal of Interdisciplinary Studies, 10(3), 206. https://doi.org/10.36941/ajis-2021-0077

Iqbal, S. A., Ashiq, M., Rehman, S. U., Rashid, S., & Tayyab, N. (2022). Students’ perceptions and experiences of online education in Pakistani universities and higher education institutes during COVID-19. Education Sciences, 12(3), e166. https://doi.org/10.3390/educsci12030166

Kashyap, A. M., Sailaja, S. V., Srinivas, K. V. R., & Raju, S. S. (2021). Challenges in online teaching amidst covid crisis: Impact on engineering educators of different levels. Journal of Engineering Education Transformations, 34(1), 38-43. https://doi.org/10.16920/jeet/2021/v34i0/157103

Kiryakova, G. (2021). E-assessment-beyond the traditional assessment in digital environment. IOP Conference Series: Materials Science and Engineering, 1031(1), 012063. https://doi.org/10.1088/1757-899x/1031/1/012063

Lai, J. W., & Bower, M. (2019). How is the use of technology in education evaluated? A systematic review. Computers & Education, 133, 27-42. https://doi.org/10.1016/j.compedu.2019.01.010

Liu, Z. Y., Lomovtseva, N., & Korobeynikova, E. (2020). Online learning platforms: Reconstructing modern higher education. International Journal of Emerging Technologies in Learning (iJET), 15(13), 4-21. https://doi.org/10.3991/ijet.v15i13.14645

Malik, G., Tayal, D.K., & Vij, S. (2019). An Analysis of the Role of Artificial Intelligence in Education and Teaching. In P. Sa, S. Bakshi, I. Hatzilygeroudis & M. Sahoo (eds), Recent Findings in Intelligent Computing Techniques. Advances in Intelligent Systems and Computing (v. 707, pp. 407-417). Springer. https://doi.org/10.1007/978-981-10-8639-7_42

Mampota, S., Mokhets’engoane, S. J., & Kurata, L. (2023). Connectivism theory: Exploring its relevance in informing Lesotho’s integrated curriculum for effective learning in the digital age. European Journal of Education and Pedagogy, 4(4), 6-12. https://doi.org/10.24018/ejedu.2023.4.4.705

Marevci, F., & Salihu, A. (2023). The Effect of Strengths/Opportunities and Weaknesses/Challenges on Online Learning during the COVID -19 Pandemic. International Journal of Emerging Technologies in Learning, 18(17), 168-183. https://doi.org/10.3991/ijet.v18i17.39517

Martin, F., & Bolliger, D. U. (2018). Engagement matters: student perceptions on the importance of engagement strategies in the online learning environment. Online Learning, 22(1), 205-222. https://doi.org/10.24059/olj.v22i1.1092

Meirbekov, A., Maslova, I., & Gallyamova, Z. (2022). Digital education tools for critical thinking development. Thinking Skills and Creativity, 44, 101023. https://doi.org/10.1016/j.tsc.2022.101023

Munir, H., Vogel, B., & Jacobsson, A. (2022). Artificial intelligence and machine learning approaches in digital education: A systematic revision. Information, 13(4), e203. https://doi.org/10.3390/info13040203

Onyekwere, J., & Enamul Hoque, K. (2023). Relationship between technological change, digitization, and students’ attitudes toward distance learning in Lagos Higher Education institutes. Innoeduca. International Journal of Technology and Educational Innovation, 9(1), 126-142. https://doi.org/10.24310/innoeduca.2023.v9i1.15286

Padilla-Hernández, A. L., Gámiz-Sánchez, V. M., & Romero-López, M. A. (2019). Proficiency levels of teachers’ digital competence: A review of recent international frameworks. Innoeduca. International Journal of Technology and Educational Innovation, 5(2), 140-150. https://doi.org/10.24310/innoeduca.2019.v5i2.5600

Rajesh, P. K., & Sethuraman, K. R. (2020). Strengths, weaknesses, opportunities and challenges (swoc) of online teaching learning and assessment in a medical faculty. Asian journal of medicine and health sciences, 3(2), 68-71.

Rakha, A. H. (2023). The impact of Blackboard Collaborate breakout groups on the cognitive achievement of physical education teaching styles during the COVID-19 pandemic. Plos One, 18(1), e0279921. https://doi.org/10.1371/journal.pone.0279921

Ratten, V. (2023). The post COVID-19 pandemic era: Changes in teaching and learning methods for management educators. The International Journal of Management Education, 21(2), 100777. https://doi.org/10.1016/j.ijme.2023.100777

Saadati, Z., Zeki, C. P., & Vatankhah Barenji, R. (2021). On the development of blockchain-based learning management system as a metacognitive tool to support self-regulation learning in online higher education. Interactive Learning Environments, 31(5), 3148-3171. https://doi.org/10.1080/10494820.2021.1920429

Slattery, E. L., Voelker, C. C., Nussenbaum, B., Rich, J. T., Paniello, R. C., & Neely, J. G. (2011). A practical guide to surveys and questionnaires. Otolaryngology--Head and Neck Surgery, 144(6), 831-837.

Tang, T. T., Nguyen, T. N., & Tran, H. T. T. (2022). Vietnamese Teachers’ Acceptance to Use E-Assessment Tools in Teaching: An Empirical Study Using PLS-SEM. Contemporary Educational Technology, 14(3), ep375. https://doi.org/10.30935/cedtech/12106

Therisa Beena, K. K., & Sony, M. (2022). Student workload assessment for online learning: An empirical analysis during Covid-19. Cogent Engineering, 9(1), e2010509. https://doi.org/10.1080/23311916.2021.2010509

Tseng, H. (2020). An exploratory study of students’ perceptions of learning management system utilisation and learning community. Research in Learning Technology, 28(0). https://doi.org/10.25304/rlt.v28.2423

Veluvali, P., & Surisetti, J. (2022). Learning management system for greater learner engagement in higher education—A review. Higher Education for the Future, 9(1), 107-121. https://doi.org/10.1177/23476311211049855