of Technology and Educational Innovation

Vol. 10. No. 1. Junio 2024 - pp. 65-80 - ISSN: 2444-2925

DOI: https://doi.org/10.24310/ijtei.101.2024.17672

An Ef fect of Assessment Delivery Methods on Accounting Students’ Grades in an E-learning Environment

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.1. INTRODUCTION

An assessment is defined in the literature as the process of gathering, describing, or quantifying information about student performance (e.g., diagnosing students’ strengths and weaknesses and grading student performance for certification purposes) (Marriott, 2009; Rovai, 2000). The aspects of assessment are a critical part of the education process (Jimaa, 2011). According to the general assessment theory, the timing of evaluations has been generally differentiated into summative assessment and formative assessment. The first method is usually used as evidence for grading students at the end of a subject, also known as the traditional assessment. The second method is seen as an activity that aims to improve education and learning and is conducted over the course of teaching to monitor student learning (Rovai, 2000).

Identifying appropriate assessment tasks in an online learning environment is the overriding concern of universities to discover what students learn more systematically and reliably (White, 2021). This attention has grown with universities’ interest in identifying the best assessment activities when integrating online assessment into the educational system. It has become essential for them to measure the most effective online assessment practices for assessing learning outcomes. This attention has also been manifested substantially and broadly to the front again with the abrupt closure of universities and the shift to distance education, where their only viable option was online assessment.

Rovai (2000) mentioned that these assessments do not change in an online learning setting but rather change in the manner in which these assessments are implemented. The online learning environment and its digital tools are an excellent opportunity to implement numerous innovative assessment methods to promote student learning outcomes (Rogerson-Revell, 2015). Various assessment delivery methods are available that assess student progress in an e-learning environment. They encompass three distinct forms: the fully online assessment, the traditional face-to-face assessment, or a combination of both. The fully online assessment modality involves three forms of assessment: (a) an unsupervised online assessment, (b) a supervised online assessment, and (c) a face-to-face assessment in a classroom assessment within a system (Guangul et al., 2020).

The COVID-19 pandemic has compelled many universities to shift toward online learning and assessment, and they had to adopt distinct assessment practices and means of delivery and submission to ensure student progress and learning outcomes measurement under this compelling circumstance. In Saudi Arabia, the rapid transition into distance education led to universities using a range of alternative online assessments during this period, especially in the first year of this sudden shift, where the fully online assessment replaced a traditional on-campus paper assessment in the second semester and summer semester of 2019/2020. Those activities concentrated on a number of assessment methods that are diversified to include summative and formative assessment activities, usually an un-invigilated assessment, either in the form of timed LMS exams or take-home exams or assignments.

Most studies conducted in Saudi show that the use of summative assessments was the highest by instructors, while formative assessments were used less often during this pandemic (Almossa, & Alzahrani, 2022; Guangul et al., 2020). The implementation of online assessment activities contributes to promoting undergraduates’ academic performance (Oguguo et al., 2023; Thathsarani et al., 2023).

Although these alternative assessment methods were adopted, their implementation has been the main challenge to lecturers and students. Guangul et al. (2020) noted that the main challenges identified in online assessment during the shift towards online learning were academic misconduct, infrastructure, coverage of learning outcomes, and commitment of students to submit assessment activities. Further, delivering efficient and reliable assessment methods for assessing student learning achievement through an online learning system is a complicated process, particularly when lecturers and students are not prepared, as well as to a lack of assessment security software (Gamage et al., 2020; Paz Saavedra., 2022; Sharadgah, & Sa’di, 2020).

Saudi universities, nevertheless, reconsidered alternative assessment delivery methods, where the epidemic was still raging, in the academic year 2020-2021 and decided to partially return to traditional assessment through the adoption of mid-term and final exams on-campus with the continued online learning delivery and some online assessment activities. This shift to the hybrid assessment designed with a mix of online and pen-and-paper assessment activities could be attributed primarily to preserving academic honesty and maintaining educational standards (Bilen, 2021; White, 2021). Newton (2020) indicated that many universities reported widespread cheating in 2ed semester of 2019/20 during the first year of the COVID-19 Pandemic.

The landscape of education is rapidly changing, with advancements in technology and evolving pedagogical approaches leading to innovative methods of delivering assessments. In the context of accounting education, it is essential to explore the impact of various assessment delivery methods on students’ academic performance. Moreover, this experience led to the adoption of two forms of assessment delivery methods in a synchronous online teaching environment during this pandemic: 1) fully online assessment and 2) hybrid assessment. In this study, hybrid assessment has been described as mixed assessment practices combining modalities of online assessment and traditional assessment. Hence, two research questions arose: does the change in the delivery of assessment methods, from a full online assessment to a hybrid assessment, affect students’ academic achievement in an online learning environment during the COVID-19 Pandemic? And do we observe a gender gap in the final course score across different assessment delivery methods? Therefore, the aim of this study is to investigate whether a change in the delivery of assessment methods, from a full online assessment to a hybrid assessment, affected accounting students’ grades during the COVID-19 Pandemic, and to examine the gender difference in the final course score across different assessment delivery methods. This study also considers whether this change undertaken in assessment methods enabled universities to achieve their targets of improving assessment practices and ensuring academic integrity.

In order to reach the goal of this study, the effect of assessment delivery methods on the final course grade of accounting students between a fully online assessment and a mix of online and traditional pen-and-paper assessments. Grades were compiled from two courses during the summer semester of 2019/2020 and the second semester of 2020/2021. The courses selected for this study were the internal audit and the financial reports analysis courses. Presently, there seem to be no studies that have explored how learning outcomes may be affected by the delivery methods of alternative assessments when using these methods during COVID-19. Thus, this study could positively contribute to the emerging literature on the effect of these differences in delivering assessment methods on the performance of a student in an online learning environment in an accounting context.

The rest of this paper is organized as follows. The next section reviews the literature related to this research. Section 3 describes the research methodology. Section 4 presents the results of this research. Finally, a discussion is conducted in Section 5, and the conclusion is revealed in Section 6.

2. LITERATURE REVIEW AND HYPOTHESES DEVELOPMENT

The use of e-learning systems and their digital tools in the assessment are appreciated by accounting students compared with a traditional on-campus paper assessment (Helfaya, 2019; Marriott, 2009), and their performance improves with online assessments (Aisbitt, & Sangster, 2005; Duncan et al., 2012; Haidar, 2022). There is a positive and significant relationship between the students’ use of e-learning systems and their performance in the final assessments in accounting courses (Perera, & Richardson, 2010).

However, assessment methods have caused major anxiety for students with the sudden shift to online studying during the COVID-19 Pandemic (Bautista Flores et al., 2022). These students had often become entirely preoccupied with assessment tasks and their influence on their assigned grades and they might have reservations about the type of online assessment practices. Perhaps most troubling was the negative impact that assessment methods can have on the overall satisfaction with the online learning system adoption. Almossa (2021) found that the challenges of the sudden shift in learning mode and alterations in assessment methods highly impacted students’ engagement with learning and assessment. Overall, some studies indicate that assessment methods had the general effect of altering student behavior (Chen et al., 2022; Schinske, & Tanner, 2014) and that students may hold fairly positive, and at least not overly negative, attitudes towards online assessment methods (Hewson, 2012).

2.1. The delivery of assessment methods and student’s grade

Most of the studies have used the final grade indicator for measuring students’ learning results and performance of students from five angles: 1) the effects of different learning styles (Hsiao, 2021); 2) the comparison of online courses to traditional courses (Bunn et al., 2014; Cater et al., 2012; Hafeez et al., 2022; Idrizi et al., 2021); and 3) the effects of testing (Aisbitt, & Sangster, 2005; Brallier et al., 2015; Gomaa et al., 2021; Perera, & Richardson, 2010; Pierce, 2011; Yates & Beaudrie, 2009). All these studies demonstrated that the difference in the delivery of learning and assessment methods affected performance, learning outcomes, and grades.

Some of the studies of students in different subject areas, at different levels, and over different time periods found that students’ grades on online assessment and traditional face-to-face assessments were not that different (e.g., Da Silva et al., 2015; Yates and Beaudrie, 2009). Other studies found that students’ grades on online assessments were higher than those on traditional face-to-face assessments (Soffer, & Nachmias, 2018), while others found no difference between the two. In contrast, other studies proved students in traditional learning had more successful outcomes overall than those in online learning (Cater et al., 2012; Glazier et al., 2020).

Very few studies have nevertheless discussed the effect of both online and traditional face-to-face assessment delivery methods used by lecturers on students’ overall scores in a synchronous online course in the accounting context (e.g., Da Silva et al., 2015; Gomaa et al., 2021). Furthermore, there is also a lack of recent research directly studying the effect of those methods on student grades during the COVID pandemic, with most of the noted studies being focused on the comparison of learning outcomes in students and their perceptions of online learning delivery modes. Therefore, no study has discussed the method and the effect of fully online and hybrid assessment delivery on students’ achievement outcomes in a synchronous online learning environment. However, those studies that have discussed certain forms of assessment delivery methods can be used to support this study and its results.

For example, Da Silva et al. (2015) conducted a study to investigate the effect of the delivery of online assessment methods based on learning styles on the final course grades. They found that the grades obtained by students in online unsupervised assessment activities are higher than those grades obtained in online supervised assessment activities. Likewise, Gomaa et al. (2021) found that the average student grade in unproctored online exams is higher than that of proctored online exams in class in an intermediate accounting course. On the other hand, Bunn et al. (2014) found that students in the traditional classroom (Intermediate Accounting I) had higher mean scores than those online. Cater et al. (2012) demonstrated too that students in the traditional classroom outperformed students in the online classroom on tests with the average final grade in the course higher for traditional students.

A recent study concluded that student performance in completely online classes during the COVID-19 Pandemic was as effective as the pre-pandemic traditional learning classes, and students’ final course marks showed that the participants in the online classes performed as effectively as participants in the conventional learning classes (Hew et al., 2020). On the other hand, Eurboonyanun et al. (2020) found that medical students who took the online assessment had significantly higher mean scores than those who took traditional assessments (the traditional written examinations).

In a different educational context, Yates and Beaudrie (2009) compared the impact of unsupervised and supervised assessments on students’ grades in mathematics courses in a distance education environment. Their findings revealed no significant difference between the grades of the students who took exams in the classroom compared to students who took the unsupervised online. Pierce (2011) also found no differences in students’ grades on unsupervised online assessment versus supervised face-to-face assessment for three of four exams, while a significant difference in grades was found for one of four exams in a pharmacy program. In contrast, Brallier et al. (2015) also found a significant difference in grades among students on un-proctored online assessment activities and traditional proctored paper and pencil assessments in a psychology course.

Although some findings on the difference in students’ grades were paradoxical, this study suggests two following alternative hypotheses: first, accounting students who took fully online assessment earn scores that differ from students who took a hybrid assessment in the same synchronous e-learning environment during the COVID-19 Pandemic, and second, the change to assessment delivery method, from fully online to the hybrid assessment, is reflected in students’ learning results on the assessment targets and academic integrity.

2.2. Student’s gender

In recent decades it has been noted that female students receive better performance in a range of indicators, the most important of which are grades, wherein female students outperform male students in learning results not only in the higher education environment but also in the school education environment (e.g., Voyer, & Voyer, 2014; Workman, & Heyder, 2020). The female advantage in learning outcomes is pronounced with a comparison between online and traditional learning modes. For instance, female students earn higher grades than their male peers in online learning mode versus conventional learning mode (Harvey et al., 2017; Hsiao, 2021; Idrizi et al., 2021; Noroozi et al., 2020). A review of the related literature before the period 2010 reveals no difference between males and females in their grades in e-learning environments (e.g., Astleitner, & Steinberg, 2005; Cuadrado-García et al., 2010; James et al., 2016), but conventional views of comparison in gender may not be in keeping with the digital era of learning, which involves a wide use of E-learning and online sources, and other multimedia tools to enhance learning abilities.

Overall, findings on gender’s impact on student outcomes are mixed, whether in the regular classroom or online. Given these inconsistent findings, this study proposed an alternative hypothesis that students’ scores differ by gender, which is attributable to the difference in assessment delivery methods in the same e-learning environment.

3. MATERIAL AND METHOD

3.1. Procedure

The focus of the current study was mainly on assessment activities delivered in both the form of online and hybrid assessments (a mix of online and traditional pen-and-paper assessments) during two semesters. Ethical approval to conduct this study was obtained from the Research Ethics Committee (Ethics Number: MUREC-jun.5/com-2022/7-3). Student’s grades for the Internal Audit (IAC) and Financial Reports Analysis (FRAC) courses were obtained from the Edugate system. The measure of the final course grade used in this study, as seen by Tucker (2000) and Soffer and Nachmias (2018), is a better indicator to determine whether there is a significant difference in final course grades between groups in the education system.

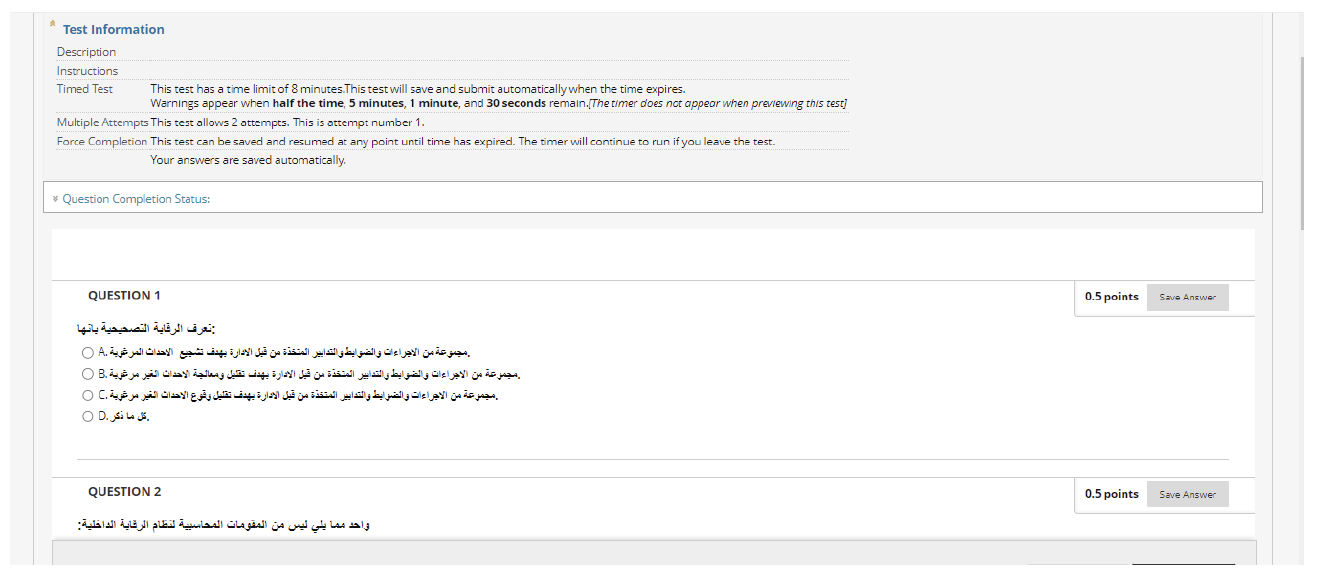

These two courses were selected because they covered two different assessment activity delivery methods (a fully online and a hybrid assessment). In the first method, the fully online assessment activities accounted for the total course grade (100%) over the summer semester of 2019/20. The online assessment activities comprised: 1) The online final exam at the end of the course (20% of course score), which is composed of multiple-choice, true/false questions, and matching questions created from the questions in the question bank, and 2) Multi-asynchronous activities over the semester (80% of course score), which are composed of discussion forums, assignments, quizzes that also created from the questions in the question bank, student exhibits, and lecturer observation. In all cases, students were required to take the uncensored testing online within the Blackboard Figure 1 presents a sample screenshot of the online quizzes.

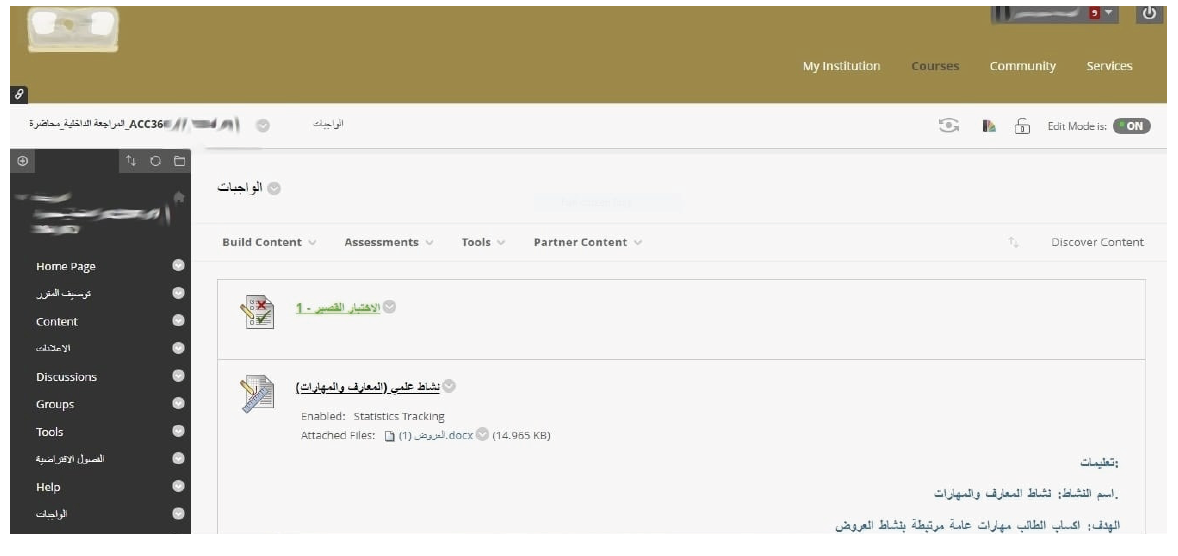

In the second method, the hybrid assessment mainly depended on the traditional proctored face-to-face final exams (paper-based test) at the mid-term and end of the course (80% of course score). The rest of the assessment (20% of course score) is composed of online assessment activities over the second semester of 2020/21. Figure 2 presents a sample screenshot of assessment activities page of Internal Audit course. All online assessment activities are asynchronous, that is, students complete activities within specified time frames and deadlines, but are not required to be online at any specific time.

Thus, the final course grade is the dependent variable of the study. The independent variable is the assessment delivery method. Also, gender is considered as the moderator variable that may affect the relationship between independent and dependent variables.

FIGURE 1. A sample screenshot of the online quizzes.

FIGURE 2. A sample screenshot of assessment activities page of Internal Audit course.

3.2. Data collection

The grades data from 473 students enrolled in the two courses, the Internal Audit course and the Financial Reports Analysis course in the accounting program, were collected when the COVID-19 pandemic required a sudden change to online distance learning. The data collection was across two semesters for both courses (summer semester 2019/20 and second semester 20/21). The first category of data is based on 224 students’ scores earned from the fully online assessment activities (47.4%) during the summer semester of 2019/20. The second category is based on 249 students’ second-semester scores in 2020/21 earned from the hybrid assessment (52.6%), as shown in Table 1.

3.3. Data analysis

The data was analyzed statistically by using SPSS 24.0. First, the t-test analysis and the chi-square test were performed at a significance level of 0.05 to examine if any statistically significant differences existed between the fully online and the hybrid assessments on the final mean score. The assessment method delivery and students’ learning grades were used as independent and dependent variables, respectively. Second, a two-way ANOVA at a significance level of 0.05 was used to determine whether any statistically significant differences existed between the two variables of the method of assessment delivery and gender with quantitative final grade and the effect of the difference in gender on mean scores. The difference in gender and assessment delivery method was the independent variable, and the final course score was the dependent variable.

TABLE 1. Descriptive statistics sample characterization.

| Characteristic | N | % | Minimum | Maximum | Mean | SD |

| Online Course | ||||||

| Internal Audit | 258 | 54.5 | 10.00 | 98.00 | 76.20 | 12.63 |

| Financial Reports analysis | 215 | 45.5 | 16.00 | 99.00 | 79.27 | 14.11 |

| Type of Assessment | ||||||

| Fully Online Assessment (summer 19/20) | 224 | 47.4 | 16.00 | 98.00 | 81.81 | 10.87 |

| Hybrid Assessment (2ed semester 20/21) | 249 | 52.6 | 10.00 | 99.00 | 73.80 | 14.31 |

| Gender | ||||||

| Male | 320 | 67.7 | 10.00 | 98.00 | 74.27 | 13.66 |

| Female | 153 | 32.3 | 56.00 | 99.00 | 84.55 | 9.68 |

SD. Standard Deviation

4. RESULTS

The final grades were, firstly, examined based on descriptive statistics to determine if there is a statistically significant difference between groups, as shown in Table 1. The descriptive statistics findings showed that the mean scores of both two methods are significantly different. The mean scores for students via full online assessment (M = 81.81, N = 224, SD = 10.87) were higher than the mean scores via the hybrid assessment (M = 73.80, N = 249, SD = 14.31). Both methods had different SDs. For differences in gender, female students had a higher mean score (M = 74.27, N = 320, SD = 13.66) than male students (M = 84.55, N = 153, SD = 9.68). The SD for both groups was differential. Moreover, a smaller difference was observed between the mean scores attained in terms of the internal audit course (M = 76.20, N = 258, SD = 12.63) and the financial reports analysis course (M = 79.27, N = 215, SD = 14.11), but it was not significantly different when compared with the assessment delivering method, as will be noted later in this section.

4.1. The effect of assessment delivery methods on student’s final course grade

First, The independent sample T-test was performed to determine if there was a statistically significant difference between the students’ final grades who were assessed entirely online and hybrid (a mix of online and pen-and-paper assessments) for both the Internal Audit course (IA course) and the Financial Reports Analysis course (FRA course). The results indicated that students’ each course grades were significantly higher with full online than with hybrid assessment. For the IA course, the difference was statistically significant (t = 4.72, p<.05), indicating that the mean scores for those who were assessed via the fully online (M = 79.73) were significantly higher than for those who were assessed via the hybrid assessment (M = 72.57). Regarding the FRA course, students receiving full online assessment had significantly higher course scores than those receiving hybrid assessment (t = 5.47, p<.05), indicating that the average grade for those who were assessed via the fully online (M = 84.74) was also significantly higher than those who were assessed by the hybrid assessment (M = 75.09). Overall, all of those students who received fully online assessment activities tend to perform success rates well above those who received a hybrid assessment (see Table 2).

Table 2 also shows the effect size corresponding to each T-test value. Cohen (1992, 157) provided rough guidelines for effect size, wherein values of 0.20 represent the small effects, 0.50 for medium effects, and 0.80 for large effects. The effect size was exclusively in the small category for all comparisons, Cohen’s d = .081 and .115, respectively. The effect’s significance was small for each course (according to Cohen, 1992).

TABLE 2. T-test results and the effect size results.

| Course | Fully-Online Assist | Hybrid Assist | t Value | p Value | Diff (online- hybrid)* | Cohen’s | Effect Size | |

| Internal Audit | 79.73 ± 10.24 | 72.57 ± 13.82 | 4.72 | .000 | Sig. diff. | .081 | Small | |

| Financial Reports Analysis | 84.74 ± 11.11 | 75.09 ± 14.75 | 5.47 | .000 | Sig. diff. | .115 | ||

* Differences between groups are significant at a 5% level (p<0.05)

Table 3 also displays grade distribution according to the delivery of the assessment method. The results of chi-square testing indicated that there are statistically significant differences in students’ grades for each course due to the two modalities’ differences. The difference in student grade for each course was statistically significant, χ2 (41, N = 258) = 67.726, p=.005 <0.05 and χ2 (51, N = 215) = 107.364, p=.000 <0.05, respectively. The correlation was smaller than our significance level of 0.05. We, therefore, had to accept the alternative hypothesis.

TABLE 3. Chi-square results.

| Course | Chi-Square value | Df | P Value* | Diff (online- hybrid) |

| Internal Audit | 67.726 | 41 | .005 | Sig. diff. |

| Financial Reports Analysis | 107.364 | 51 | .000 | Sig. diff. |

*Significant difference at 0.05 level

4.2. The effect of gender on student’s grade

Second, ANOVA was conducted to investigate whether the difference in assessment delivery methods (online/hybrid assessment) has an effect on the student’s grade is attributable to the difference in gender (male/female). The ANOVA statistical test showed a significant difference in the variation of assessment method delivery towards students’ grades for gender differences (see Table 4). The difference was significantly higher among male students in the financial reports analysis course (F = 44.35, p <.000). Table 4 also shows the effect size (Cohen’s d) corresponding to each F value. Cohen (1992, 157) provided rough guidelines for effect size, where values of 0.01 represent small effects, 0.06 for medium effects, and 0.14 for large effects. The effect sizes were exclusively in the medium and large categories for all comparisons (for IA course, p <.000, η2 = 0.073, while FRA course, p <.000, η2 = 0.172), as the η2 index was 0.073, indicating a medium effect size concerning the internal audit course, and was 0.172, indicating a large effect size concerning the financial reports analysis course.

TABLE 4. ANOVA and Eta tests (the difference in gender)/ final grade depending on gender.

| Course | Gender | fully Online Assist | Hybrid Assist | f Value | p Value* | Result | Cohen’s 𝑑 | Effect size |

| Internal Audit | Male | 77.553 ± 9.77 | 70.75 ± 14.09 | 20.197 | .000 | Sig. diff. | .073 | Medium |

| Female | 87.714 ± 7.75 | 77.76 ± 11.74 | ||||||

| Financial Reports Analysis |

Male | 82.582 ± 13.37 | 67.44 ± 5.39 | 44.35 | .000 | Sig. diff. | .172 | Large |

| Female | 87.868 ± 13.61 | 84.72 ± 9.64 |

*Statistically is significant at p < 0.05

5. DISCUSSION

This study aimed to investigate whether the type of assessment method delivery has an effect on students’ academic achievement. In this study, the final course grades on two accounting courses were used to identify the impact of the difference in the delivery of assessment methods on academic achievement in an e-learning environment (an LMS in the form of Blackboard) during the COVID-19 Pandemic period.

Results indicate that both the average grade on online assessments and the average grade on hybrid assessments were found to be a significant difference. Students’ final course scores through fully online assessment (comprising of 20% final online examination and synchronous or asynchronous activities throughout the course 80%) were higher than those of the hybrid assessment method delivery (consisting of 80% pen and paper assessment in the form of mid-term and final-term exam and synchronous or asynchronous activities throughout the course 20%). This finding was consistent with previous studies that have demonstrated that students receive higher grades through online assessment activities in an e-learning environment versus any other form of assessment, whether face-to-face or traditional pen-and-paper assessment (Da Silva et al., 2015; Gomaa et al., 2021; Hew et al., 2020; Soffer, & Nachmias, 2018). In most cases, online assessments have been considered effective in an e-learning environment in developed countries where there is a high level of sophisticated internet services, sophisticated assessment tools, and proctored functions (White, 2021).

Due to the closure of educational institutions, Universities have observed that there is uncertainty about conducting online assessments in an uncontrolled environment during the COVID-19 period, especially in the semester of the first year of the pandemic, which has been reflected in its effect on student’s grades by scoring high success grades. Noroozi et al. (2020) showed that all students benefited from online assignments during this period which fostered learning outcomes in students. There were discussions on the importance of changing the assessment delivery approach in the second year of the pandemic through ideal assessment adoption in the form of a mix of online and pen-and-paper assessment activities toward a more objective assessment. The finding in the present study has shown the positive effect of the hybrid assessment delivery method on student achievement outcomes. Further, the students scored significantly lower than expected in the online assessment activities. The study by Ladyshewsky (2015) suggests that unsupervised online assessment can be a viable tool for assessing knowledge in students, provided they meet best practice standards for online assessment. Concerns about increased cheating in unsupervised online testing are not supported. This change may require Saudi universities to invest a lot of available technological resources to improve the quality of assessment processes.

On the other hand, the findings in the present study have shown the effect of the online assessment delivery method on increasing student achievement outcomes. With the online assessment method, students are more comfortable and less stressed however during in-campus assessment they have pressure, that’s why this can be one differentiator between both the assessment methods. This effect may also be mainly due to offering the variety of asynchronous and/or synchronous assessment aspects of assessing course grades within the summer semester, with the entirety of student achievement outcomes not only based on exams and quizzes but also based on the awarding of some proportion of the scores on effort and participation, completing assigned tasks on time (For example, case studies, presentations, discussion forums, and other take-home assignments) and engaging with the subjects in synchronized online learning. Ward and Lindshield (2020) observed a positive correlation between the performance of synchronous assessment tools and final grades; students who did better on this type of assessment did better in the course. Further, synchronous and asynchronous online learning environments play a positive role in achieving better final grades in an online accounting course (Duncan et al., 2012).

Concerning the effect of the gender difference, the results showed a significant difference in the variation of assessment delivery method towards students’ grades by gender differences, which female students score considerably better than male students. The assessment delivery methods for both male and female students were uniform that’s why the reasons for the differences need to be identified as a future scope of this study. They generally are in line with the studies regarding the gender difference in student achievement outcomes in online learning by Idrizi et al. (2021) and Hsiao (2021), as female students scored highly better. This difference may be due to female students relying more on course subjects to learn autonomously compared to male students (Harvey et al., 2017), wherein the female students’ study is more prominent to self-learning, providing better academic achievements (Moore et al., 2011), as well as the involvement of female students in the e-learning environment often is more than male students’ engagement and thus, they’re scored better final grades in comparison to male students (Cuadrado-García et al., 2010). Pasion et al. (2021) indicated that the COVID-19 pandemic had a different impact on females and males in terms of engagement. Results of the study by Eltahir et al. (2022) confirmed that female students demonstrated more acceptance of the implementation of online assessment during the spread of the COVID-19 pandemic than male students. Furthermore, female students have generally been more successful in tertiary education than their male counterparts worldwide, especially in recent decades (Verbree et al., 2022).

Online assessment techniques are an indispensable solution for educational institutions during the COVID-19 pandemic. Therefore, from a closer look at this research finding, it is evident that the adoption of a hybrid assessment approach would help in providing a unique educational environment. Moreover, this approach of varied assessment proved to be more effective than the use of online assessment alone. It is expected that this study will contribute evidence to support the use of both online and face-to-face assessment approaches in the online learning to find out student abilities in accounting courses and maintain academic integrity.

6. CONCLUSIONS

The sudden shift to distance education is an interesting experience, providing new delivery and assessment experiences distinct from the planned online assessment. Online assessment is promising to reproduce traditional assessment through e-tools. Technology and an online learning system enable online assessment tools to be used more extensively to support traditional assessment activities delivery methods such as pen-and-paper tasks and written homework assignments, especially formative assessment tasks. Additionally, these tools can provide a variety of online assessment practices to evaluate student performance (formative assessment) (Al-Maqbali, & Al-Shamsi, 2023; Thathsarani et al., 2023).

In this study, online assessments (such as discussion forums, quizzes, and take-home assignments) together with traditional pen-and-paper assessments have been referred to as a hybrid assessment approach. On the other hand, a purely online assessment approach is not as effective an assessment activities delivery option as those that use face-to-face assessment. This study concludes that perhaps a hybrid assessment delivery approach is efficient, even as universities return to regular classes. Therefore, this research recommends that the use of a hybrid assessment approach would help in providing a unique educational environment, and this approach of varied assessment can enable lecturers to find out student abilities in accounting courses and maintain academic integrity. The type of assessment can influence ethical considerations, particularly in accounting, where honesty and accuracy are crucial. Encouraging ethical behavior in assessments can have a positive impact on the culture of honesty and integrity within the academic community, setting the foundation for responsible professional practices in the future.

A shift towards a combination of both online and traditional assessment (hybrid assessment) may present a solution with many benefits as it provides a gradual transition toward technology-enabled assessment. Perhaps the most benefit provided by online forms of assessment (compared with traditional pen and paper modes of delivery) is the lecturer’s precious time savings because of automated delivery, especially summative assessments in mid-term, with a shift in focus to activities of learning and teaching in the class — especially as tertiary education in Saudi will be in a three-semester system instead of two semesters, with each semester consisting of 13 weeks instead of 17 weeks — and enhancing validity because of automation of the marking process that can reduce the scope for human error. Therefore, it considers these findings useful for expanding the knowledge of the better assessments that enrich the learning experience and enhance learning effectiveness when they are strongly supported by e-tools, especially in subjects requiring a high analytical and numerical capacity, such as accounting.

Although this research offers valuable findings regarding the effect of the difference in the method of assessment delivery on the learning outcomes of online classes’ environment at the tertiary level during the COVID-19 pandemic, it has certain limitations. First, these findings are limited to two semesters at one university in Saudi. Second, the findings are specific to the context in which this study was conducted. Hence, generalizing the results to broader contexts may not be appropriate. Following this study, future research can be conducted to measure assessment activities that will have an effect on academic achievement in tertiary education.

7. ACKNOWLEDGMENTS

The author would like to thank Deanship of Scientific Research at Majmaah University for supporting this work under Project Number No. R-2023-8588.

8. REFERENCES

Aisbitt, S., & Sangster, A. (2005). Using internet-based on-line assessment: A case study. Accounting Education, 14(4), 383-394. https://doi.org/10.1080/06939280500346011

Al-Maqbali, A. H., & Al-Shamsi, A. (2023). Assessment Strategies in Online Learning Environments During the COVID-19 Pandemic in Oman. Journal of University Teaching & Learning Practice, 20(5), 1-21. https://doi.org/10.53761/1.20.5.08

Almossa, S. Y. (2021). University students’ perspectives toward learning and assessment during COVID-19. Education and Information Technologies, 26(6), 7163-7181. https://doi.org/10.1007/s10639-021-10554-8

Almossa, S.Y., & Alzahrani, S.M. (2022). Assessment practices in Saudi higher education during the COVID-19 pandemic. Humanities and Social Sciences Communications, 9(5), 1-9. https://doi.org/10.1057/s41599-021-01025-z

Astleitner, H., & Steinberg, R. (2005). Are there gender differences in web-based learning? An integrated model and related effect sizes. AACE Journal, 13(1), 47-63.

Bautista Flores, E., Quintana, N. L., & Sánchez Carlos, O. A. (2022). Distance education with university students quarantined by COVID-19. Innoeduca. International Journal of Technology and Educational Innovation, 8(2), 5-13. https://doi.org/10.24310/innoeduca.2022.v8i2.12257

Bilen, E., & Matros, A. (2021). Online cheating amid COVID-19. Journal of Economic Behavior and Organization, 182, 196-211. https://doi.org/10.1016/j.jebo.2020.12.004

Brallier, A. S., Schwanz, A. K., Palm, J. L., & Irwin, N. L. (2015). Online Testing: Comparison of Online and Classroom Exams in an Upper-Level Psychology Course. American Journal of Educational Research, 3(2), 255-258. https://doi.org/10.12691/education-3-2-20

Bunn, E., Fischer, M., & Marsh, T. (2014). Does the Classroom Delivery Method Make a Difference? American Journal of Business Education, 7(2), 143-150.

Cater, J. J., Michel, N., & Varela, O. E. (2012). Challenges of Online Learning in Management Education: An Empirical Study. Journal of Applied Management & Entrepreneurship, 17(4), 76-96.

Chen, I. Y. L., Yang, A. C. M., Flanagan, B., & Ogata, H. (2022). How students’ self-assessment behavior affects their online learning performance. Computers and Education: Artificial Intelligence, 3, e100058. https://doi.org/10.1016/j.caeai.2022.100058

Cohen, J. (1992). Statistical Power Analysis. Current Directions in Psychological Science, 1(3), 98-101. https://doi.org/10.1111/1467-8721.ep10768783

Cuadrado-García, M., Ruiz-Molina, M.-E., & Montoro-Pons, J. D. (2010). Are there gender differences in e-learning use and assessment? Evidence from an interuniversity online project in Europe. Procedia - Social and Behavioral Sciences, 2(2), 367-371. https://doi.org/10.1016/j.sbspro.2010.03.027

Da Silva, D. M., Leal, E. A., Pereira, J. M., & de Oliveira Neto, J. D. (2015). Learning styles and academic performance in Distance Education: a research in specialization courses. RBGN-REVISTA BRASILEIRA DE GESTAO DE NEGOCIOS, 17(57), 1300-1316. https://doi.org/10.7819/rbgn.v17i57.1852

Duncan, K., Kenworthy, A., & McNamara, R. (2012). The Effect of Synchronous and Asynchronous Participation on Students’ Performance in Online Accounting Courses. Accounting Education, 21(4), 431-449. https://doi.org/10.1080/09639284.2012.673387

Eltahir, M. E., Alsalhi, N. R., & Al-Qatawneh, S. S. (2022). Implementation of E-exams during the COVID-19 pandemic: A quantitative study in higher education. PLoS ONE, 17(5), 1-20. https://doi.org/10.1371/journal.pone.0266940

Eurboonyanun, C., Wittayapairoch, J., Aphinives, P., Petrusa, E., Gee, D. W., & Phitayakorn, R. (2020). Adaptation to Open-Book Online Examination During the COVID-19 Pandemic. Journal of Surgical Education, 78(3), 737-739. https://doi.org/10.1016/j.jsurg.2020.08.046

Gamage, K. A. A., Silva, E. K. de, & Gunawardhana, N. (2020). Online Delivery and Assessment during COVID-19: Safeguarding Academic Integrity. Education Sciences, 10(11), e301. https://doi.org/10.3390/educsci10110301

Glazier, R. A., Hamann, K., Pollock, P. H., & Wilson, B. M. (2020). Age, Gender, and Student Success: Mixing Face-to-Face and Online Courses in Political Science. Journal of Political Science Education, 16(2), 142-157. https://doi.org/10.1080/15512169.2018.1515636

Gomaa, M., Fei Kang, & Hong Pak. (2021). Student Performance in Online Accounting Tests: In-class vs. Take-home. Pan-Pacific Journal of Business Research, 12(1), 49-60.

Guangul, F. M., Suhail, A. H., Khalit, M. I., & Khidhir, B. A. (2020). Challenges of remote assessment in higher education in the context of COVID-19: a case study of Middle East College. Educational Assessment, Evaluation and Accountability: International Journal of Policy, Practice and Research, 32(4), 519. https://doi.org/10.1007/s11092-020-09340-w

Hafeez, M., Ajmal, F., & Zulfiqar, Z. (2022). Assessment of Students’ Academic Achievements in Online and Face-to-Face Learning in Higher Education. Journal of Technology and Science Education, 12(1), 259-273. https://doi.org/10.3926/jotse.1326

Haidar, F. T. (2022). Accounting Students’ Perceptions on A Role of Distance Education in Their Soft Skills Development. Journal of Organizational Behavior Research, 7(2), 188-202. https://doi.org/10.51847/8dK1WcPfHd

Harvey, H. L., Parahoo, S., & Santally, M. (2017). Should Gender Differences be Considered When Assessing Student Satisfaction in the Online Learning Environment for Millennials? Higher Education Quarterly, 71(2), 141-158. https://doi.org/10.1111/hequ.12116

Helfaya, A. (2019). Assessing the use of computer-based assessment-feedback in teaching digital accountants. Accounting Education, 28(1), 69-99. https://doi.org/10.1080/09639284.2018.1501716

Hew, K. F., Jia, C., Gonda, D. E., & Bai, S. (2020). Transitioning to the “new normal” of learning in unpredictable times: pedagogical practices and learning performance in fully online flipped classrooms. International Journal of Educational Technology in Higher Education, 17(1). https://doi.org/10.1186/s41239-020-00234-x

Hewson, C. (2012). Can online course-based assessment methods be fair and equitable? Relationships between students’ preferences and performance within online and offline assessments. Journal of Computer Assisted Learning, 28(5), 488-498. https://doi.org/10.1111/j.1365-2729.2011.00473.x

Hsiao, Y. C. (2021). Impacts of course type and student gender on distance learning performance: A case study in Taiwan. Education and Information Technologies, 26, 6807-6822. https://doi.org/10.1007/s10639-021-10538-8

Idrizi, E., Filiposka, S., & Trajkovik, V. (2021). Analysis of Success Indicators in Online Learning. International Review of Research in Open and Distributed Learning, 22(2), 205-223. https://doi.org/10.19173/irrodl.v22i2.5243

James, S., Swan, K., & Daston, C. (2016). Retention, Progression and the Taking of Online Courses. Online Learning, 20(2), 75-96. https://doi.org/10.24059/olj.v20i2.780

Jimaa, S. (2011). The impact of assessment on students learning. Procedia - Social and Behavioral Sciences, 28, 718-721. https://doi.org/10.1016/j.sbspro.2011.11.133

Ladyshewsky, R. K. (2015). Post-graduate student performance in ‘supervised in-class’ vs. ‘unsupervised online’ multiple choice tests: implications for cheating and test security. Assessment and Evaluation in Higher Education, 40(7), 883-897-897. https://doi.org/10.1080/02602938.2014.956683

Marriott, P. (2009). Students’ Evaluation of the Use of Online Summative Assessment on an Undergraduate Financial Accounting Module. British Journal of Educational Technology, 40(2), 237-254. https://doi.org/10.1111/j.1467-8535.2008.00924.x

Moore, J. L., Dickson-Deane, C., & Galyen, K. (2011). e-Learning, online learning, and distance learning environments: Are they the same? The Internet and Higher Education, 14(2), 129-135. https://doi.org/10.1016/j.iheduc.2010.10.001

Newton, D. (2020). ‘Another problem with shifting education online: A rise in cheating’, Washington post, https://www.washingtonpost.com/local/education/another-problem-with-shifting-education-online-a-rise-in-cheating/2020/08/07/1284c9f6-d762-11ea-aff6-220dd3a14741_story.html

Noroozi, O., Hatami, J., Bayat, A., van Ginkel, S., Biemans, H. J. A., & Mulder, M. (2020). Students’ Online Argumentative Peer Feedback, Essay Writing, and Content Learning: Does Gender Matter? Interactive Learning Environments, 28(6), 698-712. https://doi.org/10.1080/10494820.2018.1543200

Oguguo, B., Ezechukwu, R., Nannim, F., & Offor, K. (2023). Analysis of teachers in the use of digital resources in online teaching and assessment in COVID times. Innoeduca. International Journal of Technology and Educational Innovation, 9(1), 81-96. https://doi.org/10.24310/innoeduca.2023.v9i1.15419

Pasion, R., Dias-Oliveira, E., Camacho, A., Morais, C., & Campos Franco, R. (2021). Impact of COVID-19 on undergraduate business students: a longitudinal study on academic motivation, engagement and attachment to university. Accounting Research Journal, 34(2), 246-257. https://doi.org/10.1108/ARJ-09-2020-0286

Paz Saavedra, L. E., Gisbert Cervera, M., & Usart Rodríguez, M. (2022). Teaching digital competence, attitude and use of digital technologies by university professors. Pixel-Bit. Revista de Medios y Educación, (63), 93-130. https://doi.org/10.12795/pixelbit.91652

Perera, L., & Richardson, P. (2010). Students’ Use of Online Academic Resources within a Course Web Site and Its Relationship with Their Course Performance: An Exploratory Study. Accounting Education, 19(6), 587-600. https://doi.org/10.1080/09639284.2010.529639

Pierce, R. J. (2011). Web-based Assessment Settings and Student Achievement. Journal of Applied Learning Technology, 1(4), 28-31.

Rogerson-Revell, P. (2015). Constructively aligning technologies with learning and assessment in a distance education master’s programme. Distance Education, 36(1), 129-147. https://doi.org/10.1080/01587919.2015.1019972

Rovai, A. P. (2000). Online and traditional assessments: what is the difference? The Internet and Higher Education, 3(3), 141-151. https://doi.org/10.1016/S1096-7516(01)00028-8

Schinske, J., & Tanner, K. (2014). Teaching More by Grading Less (or Differently). CBE Life Sciences Education, 13(2), 159-166. https://doi.org/10.1187/cbe.CBE-14-03-0054

Sharadgah, T. A., & Sa’di, R. A. (2020). Preparedness of Institutions of Higher Education for Assessment in Virtual Learning Environments during the COVID-19 Lockdown: Evidence of Bona Fide Challenges and Pragmatic Solutions. Journal of Information Technology Education: Research, 19, 755-774. https://doi.org/10.28945/4615

Soffer, T., & Nachmias, R. (2018). Effectiveness of Learning in Online Academic Courses Compared with Face-to-Face Courses in Higher Education. Journal of Computer Assisted Learning, 34(5), 534-543. https://doi.org/10.1111/jcal.12258

Thathsarani, H., Ariyananda, D. K., Jayakody, C., Manoharan, K., Munasinghe, A. A. S. N., & Rathnayake, N. (2023). How successful the online assessment techniques in distance learning have been, in contributing to academic achievements of management undergraduates? Education and Information Technologies, 28, 14091-14115. https://doi.org/10.1007/s10639-023-11715-7

Tucker, S. Y. (2000, 24-28 april). Assessing the Effectiveness of Distance Education versus Traditional On-Campus Education. Annual Meeting of the American Educational Research Association. New Orleans, EE.UU.

Verbree, A.-R., Hornstra, L., Maas, L., & Wijngaards-de Meij, L. (2023). Conscientiousness as a Predictor of the Gender Gap in Academic Achievement. Research in Higher Education, 64(3), 451-472. https://doi.org/10.1007/s11162-022-09716-5

Voyer, D., & Voyer, S. D. (2014). Gender Differences in Scholastic Achievement: A Meta-Analysis. Psychological Bulletin, 140(4), 1174-1204. https://doi.org/10.1037/a0036620

Ward, E. J., & Lindshield, B. L. (2020). Performance, behaviour and perceptions of an open educational resource-derived interactive educational resource by online and campus university students. Research in Learning Technology, 28. https://doi.org/10.25304/rlt.v28.2386

White, A. (2021). May you live in interesting times: a reflection on academic integrity and accounting assessment during COVID19 and online learning? Accounting Research Journal, 34(3), 304-312. https://doi.org/10.1108/ARJ-09-2020-0317

Workman, J., & Heyder, A. (2020). Gender achievement gaps: the role of social costs to trying hard in high school. Social Psychology of Education, 23, 1407-1427. https://doi.org/10.1007/s11218-020-09588-6

Yates, R. W., & Beaudrie, B. (2009). The Impact of Online Assessment on Grades in Community College Distance Education Mathematics Courses. American Journal of Distance Education, 23(2), 62-70. https://doi.org/10.1080/08923640902850601