of Technology and Educational Innovation

Vol. 10. No. 1. Junio 2024 - pp. 5-28 - ISSN: 2444-2925

DOI: https://doi.org/10.24310/ijtei.101.2024.16820

Preparing instructors to transition to online distance learning: A pandemic panacea?

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.

Esta obra está bajo licencia internacional Creative Commons Reconocimiento-NoComercial-CompartirIgual 4.0.1. INTRODUCTION

The COVID-19 pandemic has had a significant impact on the educational field (Tiwari et al., 2020), forcing institutions and educators to rapidly adopt online distance learning as a contingency measure (Abou-Khalil et al., 2021; Lassoued et al., 2020; Sabet et al., 2022; Sidpra et al., 2020). However, the transition to online learning poses challenges for students and instructors, especially in hospitality and tourism. These hospitality and tourism courses traditionally rely heavily on hands-on experiences, such as culinary labs, hotel operations, travel planning, and customer service simulations. The sudden utilisation of adopt online distance platforms has disrupted this traditional practical and hands-on learning experiences approach (Munoz et al., 2021). As a result, it is not easy to harness the knowledge of online learning in a short period while maintaining the structural integrity of the taught courses, especially when dealing with practical classes (Dumford, & Miller, 2018; Eseadi, 2023; Prause et al., 2019). Consequently, a critical need emerges to scrutinize the significance of instructor preparedness and readiness concerning online distance learning solutions during this unprecedented crisis.

This study posits a fundamental argument: comprehending instructors’ online teaching preparation and their preferred class type is pivotal in assessing the success of online distance learning (ODL) in hospitality and tourism courses. Furthermore, delving into instructors’ unique requisites and concerns can furnish invaluable insights into designing effective support and training initiatives to enhance online teaching within this specialized domain. Given the unique nature of the hospitality and tourism courses that heavily rely on practical, experiential learning, it is crucial to understand the specific needs and challenges that instructors face in adapting their teaching approaches to an online format. The urgency and limited preparation time during the initial stages of the COVID-19 pandemic further highlight the importance of investigating instructors’ readiness, attitudes, and strategies in online teaching.

In this context, this study is crucial for several reasons. First, instructors play a pivotal role in shaping the online learning experience. Their familiarity with online tools, adaptability to new teaching methods, and overall attitude towards online education can greatly influence the effectiveness of the transition. Hence, investigating instructor preparedness and attitudes towards online teaching is essential. On the other hand, well-prepared instructors with a positive attitude towards online teaching are more likely to design engaging and effective online courses. This, in turn, influences student engagement, learning outcomes, and overall satisfaction. Hence, understanding the factors contributing to the successful adoption of ODL is critical. On the other hand, comparing practical and theoretical classes is crucial in understanding the ODL phenomenon. With their hands-on nature, practical classes present unique challenges in an online format. Henceforth, exploring how instructor readiness and attitudes interact with class types can provide insights into effective strategies for delivering practical components through remote methods. As such, the outcomes of this research can inform the design of future hospitality and tourism curriculum.

This study objectives are twofold. First, this study examines the inter-relationship between online teaching readiness, adoption, attitude, and behavioural intention among hospitality and tourism instructors. Next, this study tests whether class types (practical or theoretical class) moderate the inter-relationship between online teaching readiness, adoption, and behavioural intention. The outcomes of this study bear substantial significance for enhancing the comprehension of ODL within the realms of hospitality and tourism. By shedding light on instructors’ readiness, attitudes, and strategies in the context of ODL, the study enriches the discourse surrounding effective pedagogical approaches in these fields. The study’s findings also highlight the potential to foster the creation of resilient and adaptable educational programs, equipping future learners to thrive in the face of uncertainty.

2. LITERATURE REVIEW

2.1. Instructor technology readiness

Instructor online teaching readiness pertains to how well-prepared and suitable instructors effectively engage with online education and design/deliver online courses. It encompasses technical skills, familiarity with online teaching tools, and pedagogical knowledge required to create interactive and engaging learning experiences (Brinkley-Etzkorn, 2018; McGee et al., 2017). Since instructors are responsible for course design and delivery, technological readiness is crucial in establishing a supportive and engaging online learning environment (Alea et al., 2020; Cutri et al., 2020; Tiwari et al., 2020). Past studies highlighted the importance of instructor readiness in delivering a practical instructional delivery experience (Eseadi, 2023; Hung, 2016; Junus, 2021; Tang et al., 2021; Wei, & Chou, 2020). Besides, the level of instructor readiness greatly influences the quality of the online learning experience (Cutri et al., 2020; Tiwari et al., 2020).

Online learning environments introduce unique challenges and demands for instructors. Technical readiness, encompassing an instructor’s proficiency with online tools and technologies (Jung, & Lee, 2020). Hence, it is crucial for effective delivery of online courses. Lifestyle readiness, on the other hand, referring to an instructor’s personal suitability for remote work and flexibility (König et al., 2020). It has since become particularly relevant when teaching online. Besides, pedagogical readiness, involving an instructor’s familiarity with effective online teaching methods, is essential for designing engaging and interactive online learning experiences (Selvaraj et al., 2021). Integrating these readiness factors acknowledges the contextual nuances of online teaching.

The level of online teaching readiness can be evaluated using three scales: technical readiness, lifestyle readiness, and pedagogical readiness (Boettcher, & Conrad, 2021; First, & Bozkurt, 2020). Technical readiness assesses the instructor’s perception of technology integration to provide an optimal online learning and teaching environment (Phan, & Dang, 2017). Meanwhile, lifestyle readiness assesses the instructor’s online teaching environment, including self-managed learning and time management skills (First, & Bozkurt, 2020). On the other hand, pedagogical readiness evaluates online instructor experiences, confidence levels, and attitudes toward the online environment for teaching (Alea et al., 2020).

Many studies have evaluated instructor readiness in online teaching based on their technology skills, lifestyle, and pedagogical training (Brinkley-Etzkorn, 2018; McGee et al., 2017; Oguguo et al., 2023). Oguguo et al. (2023) and König et al. (2020) empirically validated that instructors’ competence and opportunities to acquire digital competence are significant factors in adopting online teaching. However, most recent literature led to inconsistent findings concerning the impact of readiness toward using and applying online teaching in developing nations (Ayodele et al., 2018; Owen et al., 2020). This is pertinent as developing nations have limited exposure to technology compared to the first world countries. Hence, further research is required to understand how these readiness factors contribute to instructors’ attitudes toward their adoption and challenges with online teaching.

2.2. Attitudes towards online learning

Instructor attitude is one of the key agents in utilising online teaching in any educational institution. Kaplan (1972) defined attitude as a tendency to respond to an event favourably or unfavourably. Meanwhile, Semerci and Aydin (2018) described attitude as an element that guides an individual’s behaviour in line with his feelings and thoughts. In addition, Triandis (1971) also stated that attitudes consist of three components: affective, cognitive, and behavioural. The affective component includes statements of likes and dislikes about certain objects (Stangor et al., 2014). On the other hand, the cognitive part refers to an instructor’s statements that provide the rationale for the value of an object. At the same time, the behavioural aspect explains what an instructor does or intends to do (Koet, & Abdul Aziz, 2021). Notably, all three components of online teaching help form an instructor’s general attitude toward online teaching.

Many studies on online teaching acceptance have shown that attitude significantly predicts behavioural intention to use online teaching. Instructors with continued training and support were highly optimistic about online teaching (Karen, & Etzkorn, 2020). However, Guðmundsdóttir and Hathaway (2020) found that although most instructors had adequate experience teaching online before the COVID-19 pandemic, they had to operate in “triage mode” during the early stage of COVID-19 with limited time to prepare, learn, and build online courses contents. Such urgency affect attitude especially those who had never taught (or even learned) online (Davis et al., 2019; Iyer, & Chapman, 2021). As a result, instructors who had never taught or learned online before may have faced greater challenges and uncertainties, which could have influenced their attitudes towards online teaching. It could have impacted instructors’ perceptions and approaches to online instruction. Therefore, it is crucial to understand the nature of instructors’ attitudes better to understand their behavioural intention in online teaching.

2.3. Online learning behavioural intention

Mailizar et al. (2020) defined behavioural intention in online teaching as behavioural tendency and willingness to conduct classes in online teaching; therefore, it determines the acceptance of the technology. Notably, instructors’ behavioural intentions are influenced by their attitudes in online and face-to-face classes (Mokhtar et al., 2018). However, Chao (2019) has found that behavioural intention to adopt new technology was related to instructors’ satisfaction, trust, performance expectancy, and effort expectancy to use technology in online teaching. Besides, the instructor’s behaviour can differ based on class types, such as face-to-face and online teaching (Maheshwari, 2021).

The main difference between face-to-face and online classes is the experience and the pattern of engagement between instructors and students. Face-to-face classes involved many activities, practicals, and lectures (Kemp, & Grieve, 2014). Meanwhile, online classes demanded a significant shift in communication style, summative assessments, and subject delivery between lecturers and students (Junus et al., 2021; Oguguo et al., 2023). Meanwhile, online classes offered students more self-directed in their studies with less direction from the lecturers. Hence, transitioning from face-to-face to online classes often causes many challenges.

Looking at the hospitality and tourism educational perspectives, the transition from face-to-face to online classes brings about significant differences in the experience and pattern of engagement between instructors and students. Face-to-face classes typically involve various activities, practicals, and lectures facilitating hands-on learning experiences. On the other hand, online classes require a shift in communication style, assessment methods, and subject delivery, placing more responsibility on students for self-directed learning. This transition presents challenges for both instructors and students alike.

2.4. Hypotheses development

This study combines the Venkatesh et al. (2003) Unified Theory of Acceptance and Use of Technology (UTAUT) model with technology readiness attributes as proposed by Dwivedi et al. (2019). The effort expectancy (EE), performance expectancy (PE), social influence (SI), facilitating conditions (FC), behavioural intention (BI) to adopt the online learning system, and usage behaviour are the six fundamental constructs in the UTAUT model. Several educational and pedagogy studies have adopted and extended the UTAUT model to determine technology adoption behaviour (Akinnuwesi et al., 2022; Chao, 2019; Dwivedi et al., 2017; Nikou, & Economides, 2019; Mei et al., 2018). However, only a limited study tries to integrate the UTAUT model with technology readiness attributes, causing scarce understanding of the impact of different types of classes in ODL’s operationalisation.

The UTAUT framework primarily focuses on end users’ acceptance and use of technology. However, in an online learning context, instructors are active users and facilitators of technology. By integrating instructor readiness factors, the framework can provide a more holistic understanding of the technology adoption process, accounting for the unique perspective of educators (Al-Fraihat et al., 2020; Cutri et al., 2020). Besides, integrating instructor readiness factors can enhance the predictive power of the UTAUT framework when applied to online learning contexts (Ayodele et al., 2018; Owen et al., 2020). These factors can explain additional variance in instructor attitudes and behaviors beyond the core UTAUT constructs, offering a more accurate model for understanding technology adoption in online teaching.

There are various predictors of user’s attitude towards technology. Notably, there is a positive link between effort expectancy and attitude towards technology. Studies has found that lecturers will portray positive attitude towards technology if it offers ease of search, ease of use and time saving (Md Yunus et al., 2021). Similarly, a study by Chao (2019) confirmed that effort expectancy influences the instructor’s attitude toward technology adoption. On the other hand, users would be more open and confident to use a new technology if they felt it would save their time and effort as compared to the traditional platform (Nikolopoulou et al., 2021). Similarly, Sewadono et al. (2023) has demonstrated the significant influence of performance expectancy to elevate instructor’s intention to use e-learning platform.

On the other hand, social influence is one of the most important factors to influence attitude towards technology adoption. Given that ODL is still a foreign concept among instructors, their attitude towards ODL may be influenced by their social circle like peers, family members, figurehead, relatives, and workmate. These peoples possess significant influence towards their thoughts, ideas, opinions and attitudes towards a new technology. Studies has found that instructors are prone to refer to their social circles to get the relevant ideas about online learning (Kim et al., 2020; Selvaraj et al., 2021). Besides, studies also have found that facilitating conditions are the strongest determinant and vital for technology adoption (Jung, & Lee, 2020; Sangeeta, & Tandon, 2021). Similarly, Mazman Akar (2019) study found that facilitating conditions positively influence teacher’s technology adoption.

Hence, this study has put forward to test the following hypotheses:

- H1a: Effort expectancy positively influences the instructor’s attitude.

- H1b: Performance expectancy positively influences the instructor’s attitude.

- H1c: Social influence positively influences the instructor’s attitude.

- H1d: Facilitating conditions positively influence the instructor’s attitude.

Dwivedi et al. (2017) found that attitude played a critical role in the acceptance and behavioural intention in adopting technology. Another study by Khechine et al. (2020) found that attitudes were the main determinants of behavioural intention in adopting technology. Similarly, an instructor with a positive attitude towards e-learning tools maintains the quality of learning and forms an important part of instructor characteristics (Al-Fraihat et al., 2020). Besides, Jung and Lee (2020) and Selvaraj et al. (2021) also found that attitude towards e-learning is a key factor in developing technology and overcoming instructors’ resistance to using the technology application in the teaching process.

On the other perspectives, past literature indicated that their readiness level could influence their use and application of online teaching, affecting course outcomes and student satisfaction (Alea et al., 2020; Cutri et al., 2020). However, most related literature has led to inconsistent findings concerning the impact of readiness toward using and applying online teaching in developing nations (Ayodele et al., 2018; Owen et al., 2020). König et al. (2020) empirically validated that instructors’ competence and opportunities to acquire digital competence are significant factors in adopting online teaching. Meanwhile, Prause et al. (2019) highlight the importance of readiness to teach online as “the state of faculty preparation” to teach online. Specifically, many studies claimed that technical readiness is one of the most crucial factors influencing online learning behaviour (Gay, 2016). Besides technical readiness, lifestyle readiness also may affect the online learning behaviour among instructors (Loomis, 2000; Pillay et al., 2007). Similarly, Geng et al. (2019) found that pedagogical readiness can influence the instructor’s behavioural intention in online teaching. Hence, this study has put forward to test the following hypotheses:

- H2: Instructors’ attitude influences their behavioural intention in online teaching.

- H3a: Technical readiness positively influences their behavioural intention in online teaching.

- H3b: Pedagogical readiness positively influence their behavioural intention in online teaching.

- H3c: Lifestyle readiness positively influences their behavioural intention in online teaching.

A study by Peattie (2001) found that an attitude-behaviour gap usually exists with a mismatch between individuals’ revealed preferences and their actual behaviours. To investigate the connection between attitude-behaviour, Alfy (2016) utilised Behavioural Reasoning Theory (BRT) to investigate the reasons for and against individuals’ behaviours. Their study explains the existence of gaps between the instructor’s attitude and behaviour intention in online teaching where the instructor’s attitude might differ due to the nature and environment of different class types. In the case of practical classes, where hands-on activities are integral, the real-time interactions that can be challenging to replicate virtually (Estriengana et al., 2019; Schlenz et al., 2020). In contrast, theoretical classes might be perceived as requiring less effort to transition online, as they primarily involve content delivery and discussions. Therefore, it is assumed that instructors might exhibit different attitudes based on their perception of effort in adapting practical and theoretical classes to online teaching methods.

Meanwhile, hands-on experience and skill development are paramount in practical class. Hence, instructors may be concerned about online teaching methods’ effectiveness in replicating the same learning outcomes (Simamora, 2020). They might be more skeptical about the efficacy of online methods in practical classes, potentially leading to differences in their attitudes based on the perceived performance outcomes as compared to the theoretical classes (Gopal et al., 2021). Similarly, in practical classes, instructors might be more influenced by the experiences of their peers who have effectively used online methods for hands-on activities. In contrast, theoretical classes might be perceived as having a more straightforward transition, leading to varied influences on attitude formation based on the type of class (Coman et al., 2020; Khalil et al., 2020). It is also important to note that the specific resources and support required for practical and theoretical classes could differ significantly. Differences in the availability and adequacy of these facilitating conditions could lead to varying attitudes towards online teaching methods based on the type of class (Gamage et al., 2020; Ramos-Morcillo et al., 2020).

Technical readiness pertains to individuals’ perception of their preparedness to effectively use technical tools and platforms. In the case of practical classes, the technical requirements for replicating hands-on experiences online might be more complex, which could require a higher level of technical readiness than theoretical classes (Aditya, 2021; Geng et al., 2019). Besides, instructors teaching practical classes might need to redesign their teaching strategies significantly, affecting their pedagogical readiness differently than theoretical class instructors. Consequently, the effect of pedagogical readiness on behavioral intention could vary based on the type of class (Ersin et al., 2020; Kaushik, & Agrawal, 2021). Similarly, practical and theoretical classes might necessitate different adjustments to instructors’ lifestyles. For instance, practical classes might demand real-time availability for online labs or simulations, while theoretical classes could offer more flexibility in scheduling. The differing lifestyle demands for the two types of classes could lead to variations in the impact of lifestyle readiness on behavioral intention (Aditya, 2021; Asghar et al., 2021; Mathew, & Chung, 2020). As per this matter, the class types are treated as a moderating variable for this study. As a result, the following hypotheses were proposed:

- H4a : The effect of Effort Expectancy on the instructor’s attitude is significantly different for theoretical and practical classes.

- H4b: The effect of Performance Expectancy on the instructor’s attitude is significantly different for theoretical and practical classes.

- H4c: The effect of Social Influence on the instructor’s attitude is significantly different for theoretical and practical classes.

- H4d: The effect of Facilitating conditions on the instructor’s attitudes is significantly different for theoretical and practical classes.

- H4e: The effect of Technical Readiness on the instructor’s behavioural intention significantly differs for theoretical and practical classes.

- H4f : The effect of Pedagogical Readiness on the instructor’s behavioural intention is significantly different for theoretical and practical classes.

- H4g: The effect of Lifestyle Readiness on the instructor’s behavioural intention significantly differs for theoretical and practical classes.

3. METHOD

3.1. Research design and population

The cross-sectional survey was used for this study as the data of variables were collected at one given time across pre-determined samples (Wilson, 2021). The population investigated in this study consisted of lecturers in Higher Education Institutions (HIEs) that offer Hospitality and Tourism courses. The inclusion criteria include the following: the respondents are hospitality and tourism educators in higher education institutions; ii) they conducted online teaching during COVID-19; iii) they teach either practical or theoretical classes. Malaysia, Philippines, Indonesia, and Thailand instructors were the focus of this study. These countries exhibit similar characteristics: provides numerous hospitality and tourism educational courses; alike technological landscape, with variations in infrastructure, access to resources, and levels of digital literacy among its population.

3.2. Research instruments

The questionnaire was divided into three main sections. Section A measures the adoption level among online teaching instructors, while Section B measures the readiness and behaviour among the instructors regarding online teaching. The survey items (see Appendix 1) on instructor adoption items were adopted from Ventakesh et al. (2003) while for items for attitude were adopted from Mosunmola et al. (2018). In addition, the technical, lifestyle and pedagogical readiness items were adopted from Gay’s (2016) study. Five-point Likert scale were used throughout the survey items. Lastly, the nominal scale was applied to obtain the instructor’s demographic profile. Prior to data collection, the validity of a survey was determined through face validity engagement with a panel of experts. All comments or suggestions obtained from the validity check were recorded and evaluated for future usage in research methodology analyses. Next, the items were tested to ensure the reliability of the survey measures (Coakes et al., 2009), where the Cronbach Alpha for each construct was higher than the minimum threshold (>.70). Purposive sampling was used to collect primary data from the specified samples in this study (Zikmund et al., 2013). The minimum sample size was determined through G*Power software (N>138).

3.3. Data collection

The online survey has been chosen to conduct this study due to the COVID-19 movement control with the snowball approach utilised to gather the data where the first group of respondents shared the survey link with their colleagues (Dragan, & Isaic-Maniu, 2013). Besides, online surveys offer a convenient way for respondents to engage with the research from their own devices and at their preferred time (Geldsetzer, 2020). This accessibility is particularly relevant during periods of movement control, when physical interactions and traditional data collection methods are restricted.

A structured, close-ended English language online questionnaire via the Google Form platform was utilised. In order to reach the first group of respondents, the researcher identified universities that offer Hospitality and Tourism courses and gathered the educators’ names and contact from the university website. Their written consent was obtained before emailing the online survey link.

3.4. Data analysis

The Partial Least Squares-Structural Equation Modelling (PLS-SEM) method was chosen due to its advantages over the covariance approach. This approach’s advantages are its ability to estimate theoretical and measurement conditions and distributional and practical considerations (Hanafiah, 2020). Besides, PLS-SEM has several other advantages as it efficiently assesses data with complex hierarchical models as per this study’s framework (Hair et al., 2020; Wang et al., 2019).

The PLS-SEM path models were constructed in two steps. The two-step approach begins with estimating the measurement model and then moves on to the structural model analysis. Next, the multi-group analysis (MGA) was utilised to test the moderation effect on the dependent variable as proposed by MacKinnon (2011). This study utilised two software applications for data analysis: IBM SPSS Statistics (Version 26) and Smart PLS version 3.1.1.

4. RESULTS

A total of 248 respondents gave their feedback for this study. Most of the respondents were aged 31 to 40 years old (n=128, 51.6 percent), with most of them coming from the Philippines (n=108, 43.5%) and 101 respondents (40.7%) coming from Malaysia. Meanwhile, 9.3 percent of respondents came from Indonesia (n=23), while only 6.5 percent came from Thailand (n=16). Of the 252 respondents, 133 (53.6%) are from private universities, while the remaining 115 (46.4%) are from public universities. A large majority of the instructor’s expertise area is in tourism, with 103 (41.5%) respondents. Another 86 respondents (34%) are experts in the hospitality field, while 53 respondents (21.4%) were experts in culinary and food fields, with six respondents (2.4%) being experts in event management areas. This study compared two types of classes: theory-based (lecture/mass lecture) and practical-based (kitchen/lab-small group of students). A total of 165 respondents (66.7%) conducted theory-based (lecturer/mass lecturer) classes in higher education institutions, while the rest, 83 respondents (33.5%), conducted practical-based (kitchen/lab-small group of students) classes. Most respondents have five years or less of teaching experience (n=83; 33.5%), and 75 (30.2%) respondents have equivalent to six to ten years of teaching experience in higher education institutions. Regarding their online teaching experience, 248 (98.4%) respondents have less than one year of basic online teaching experience before COVID-19.

4.1. Measurement model assessment

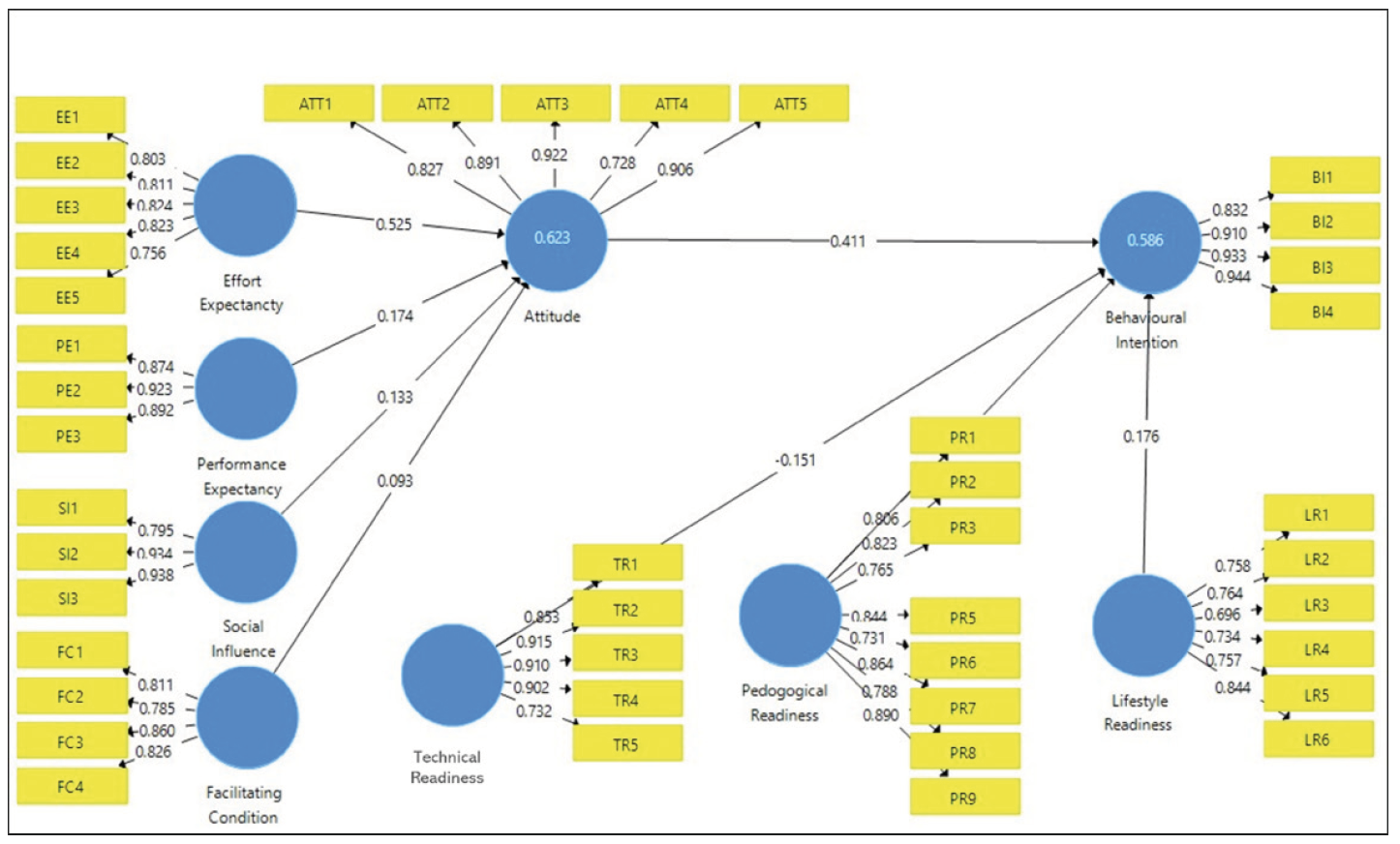

The measurement model (outer model) was used to assess the study model (Hanafiah, 2020; Hair et al., 2014). Four parameters are involved and must be established (i) indicator reliability, (ii) internal consistency reliability, (iii) convergent validity as well as (iv) discriminant validity in order to assess a reflective measurement model. Fig 1 and Table 1 below illustrate the outer loading scores, composite reliability, convergent reliability, and Cronbach Alpha for reflective measurement model assessment.

FIGURE 1. Measurement Model.

TABLE 1. Path Coefficients.

| Code | Outer Loading | Cronbach Alpha | Composite Reliability | Average Variance Extracted (AVE) |

| Performance Expectancy | 0.878 | 0.925 | 0.804 | |

| PE1 | 0.874 | |||

| PE2 | 0.923 | |||

| PE3 | 0.892 | |||

| Effort Expectancy | 0.863 | 0.901 | 0.646 | |

| EE1 | 0.803 | |||

| EE2 | 0.811 | |||

| EE3 | 0.824 | |||

| EE4 | 0.823 | |||

| EE5 | 0.756 | |||

| Facilitating Conditions | 0.839 | 0.892 | 0.674 | |

| FC1 | 0.811 | |||

| FC2 | 0.785 | |||

| FC3 | 0.860 | |||

| FC4 | 0.826 | |||

| Social Influence | 0.867 | 0.920 | 0.794 | |

| SI1 | 0.795 | |||

| SI2 | 0.934 | |||

| SI3 | 0.938 | |||

| Attitude | 0.909 | 0.932 | 0.736 | |

| ATT1 | 0.827 | |||

| ATT2 | 0.891 | |||

| ATT3 | 0.922 | |||

| ATT4 | 0.728 | |||

| ATT5 | 0.906 | |||

| Behavioural Intention | 0.926 | 0.948 | 0.821 | |

| BI1 | 0.832 | |||

| BI2 | 0.910 | |||

| BI3 | 0.933 | |||

| BI4 | 0.944 | |||

| Technical Readiness | 0.915 | 0.937 | 0.749 | |

| TR1 | 0.853 | |||

| TR2 | 0.915 | |||

| TR3 | 0.910 | |||

| TR4 | 0.902 | |||

| TR5 | 0.732 | |||

| Lifestyle readiness | 0.855 | 0.891 | 0.578 | |

| LR1 | 0.758 | |||

| LR2 | 0.764 | |||

| LR4 | 0.734 | |||

| LR5 | 0.757 | |||

| LR6 | 0.844 | |||

| Pedagogical Readiness | 0.928 | 0.940 | 0.665 | |

| PR1 | 0.806 | |||

| PR2 | 0.823 | |||

| PR3 | 0.765 | |||

| PR5 | 0.844 | |||

| PR6 | 0.731 | |||

| PR7 | 0.864 | |||

| PR8 | 0.788 | |||

| PR9 | 0.890 | |||

*N=248; Items removed: Indicators items below 0.7 – TR6, LR3, and PR4

Table 1 illustrates the range of loading indicator scores between 0.728 and 0.944, which exceed the recommended value. However, items TR6, LR3, and PR4 are removed since the loading indicators score is below 0.708 9 (Hair et al., 2014). The composite reliability values of nine constructs in this study are between 0.839 to 0.928, exceeding the acceptable value of 0.70 – reflecting internal consistency of the items in each construct is adequate for this study. Meanwhile, the AVE values are between 0.578 to 0.821, which indicate satisfactory convergent validity. The Heterotrait-Monotrait Ratio of Correlations (HTMT) was used to confirm the discriminant validity of the model. The suggested threshold value should be as low as 0.90 (Henseler et al., 2015). As the HTMT values are less than 0.90, thus, no discriminant validity problems among the latent constructs can be found.

4.2. Structural model assessment

The next step in PLS-SEM analysis is evaluating the structural model by examining proposed hypotheses and identify the exogenous variables’ effect on the endogenous variables. Four aspects were considered: i) estimation of path coefficient (β), ii) determination coefficient (R2), iii) effect size (f 2), and iv) prediction relevance (Q2) (Hair et al., 2019). Table 2 reports the outcomes of path coefficients, T-statistics, and significance levels for all hypothesised paths.

TABLE 2. Path Coefficients.

| Path | Path coefficient (ẞ) | T Statistics | P-Values | R2 | Q2 | f 2 | Hypothesis |

| H1a: EE -> ATT | 0.066*** | 7.977 | 0.000 | 0.623 | 0.424 | 0.351 | Accept |

| H1b: PE -> ATT | 0.049*** | 3.562 | 0.000 | 0.013 | Accept | ||

| H1c: SI -> ATT | 0.045** | 2.985 | 0.003 | 0.043 | Accept | ||

| H1d: FC -> ATT | 0.056 | 1.658 | 0.098 | 0.030 | Reject | ||

| H2: ATT -> BI | 0.062*** | 6.664 | 0.000 | 0.586 | 0.448 | 0.200 | Accept |

| H3a: TR -> BI | 0.052** | 2.906 | 0.004 | 0.033 | Accept | ||

| H3b: PR -> BI | 0.070*** | 5.383 | 0.000 | 0.027 | Accept | ||

| H3c: LR-> BI | 0.058** | 3.014 | 0.003 | 0.108 | Accept |

Notes: **p<.05, ***p<.001

The results of the path coefficients revealed that the relationship between effort expectancy in online teaching (β=0.066***; t=7.977) and the instructor’s attitude toward online teaching is significant. Secondly, this study confirms the significant relationship between performance expectancy in online teaching (β=0.049***; t=3.562) and the instructor’s attitude toward online teaching. Thirdly, the relationship between social influence toward online teaching (β=0.045***; t=2.985) and the instructor’s attitude toward online teaching is also significant. Unfortunately, the relationship between facilitating condition (β=0.056***; t=1.658) and the instructor’s attitude toward online teaching is insignificant. This indicates that only effort expectancy, performance expectancy, and social influence of online teaching are the major determinants of the instructor’s attitude toward online teaching.

On the other hand, the results of the path coefficients also revealed that the relationship between the instructor’s attitude (β=0.062***; t=6.664) and behavioural intention to continue online teaching is significant, confirming that the instructor’s attitude in online teaching is a significant predictor of their behavioural intention in online teaching. Other than that, the relationship between technical readiness in online teaching (β=0.052***; t=2.906) and behavioural intention among the instructors is also significant. Next, the relationship between pedagogical readiness in online teaching (β=0.070***; t=5.383) and behavioural intention among the instructors is significant. In addition, the relationship between lifestyle readiness among the instructors (β=0.058***; t=3.014) and behavioural intention among the instructors is also significant. The results confirm that technical readiness, pedagogical readiness, and lifestyle readiness among the instructors significantly influence the instructor’s behavioural intention to opt for online teaching.

The findings showed significant variance (R2 values ranging from 0.586 to 0.623) in the study framework. Notably, 62.3 percent of the effort expectancy, performance expectancy, social influence, and facilitating condition can explain the variances in attitude construct. Next, the instructor’s attitude and readiness could explain 58.6 percent of the variance in the behavioural intention. On the other hand, the effect size function of f 2 .y Chin (1998) is utilised to calculate the inner-model change in the effects on the effect size. The f 2.values of 0.02, 0.15, and 0.35 represent weak, moderate, and substantial effects, respectively. Notably, the effect size of effort expectancy (0.351) on attitude is substantial, while facilitating condition, performance expectancy, and social influence on instructor’s attitude reported a small effect size. On the other hand, lifestyle substantially affected behavioural intention, while technical and pedagogical readiness reported a small effect size. In addition, attitude has a substantial effect size on behavioural intention. Following the blindfolding procedure, the Q2 value is greater than zero, which concludes that the study’s model has predictive validity (Chin, 1998).

4.3. Multi-group analysis

This study differentiates the theoretical and practical classes to test whether the class types moderate how the independent variables influence behavioural intention among the instructors through the Multi-Group Analysis. This approach is suggested particularly if the independent or moderating variable are categorical (Henseler, 2012). Following the MGA approach proposed by Afthanorhan et al. (2014), the sample was first split into groups (subsamples) and the path relationships of exogenous/independent variable(s) were regressed with endogenous/dependent variable(s) using one subsample at the time. This allowed for each model to be deemed acceptable (or unacceptable) with regard to the measurement model. Next, the bootstrap method was applied (500 times) to re-sample the data in order to obtain the standard error of the structural paths in the subsamples under consideration. Subsequently, differences between the path estimators were tested for significance of the t-test values.

Out of 248 respondents, almost 66.5 percent had theory-based (lecturer/mass lecturer) classes in higher education institutions (n=165). In comparison, the remaining were practical-based (kitchen/lab-small group of students) classes in higher education institutions (n=83). Table 3 exhibits the estimated values of the structural relations for the two subsamples.

TABLE 3. Multi-Group Analysis.

| Path | (β) Theoretical Class | p-values | β) Practical Class | p-values | Result |

| H4a: EE -> ATT*Mod | 0.472*** | 0.000 | 0.640*** | 0.000 | Reject |

| H4b: PE -> ATT*Mod | 0.217** | 00.004 | 0.137* | 0.011 | Reject |

| H4c: SI -> ATT*Mod | 0.119** | 0.022 | 0.092 | 0.187 | Reject |

| H4d: FC -> ATT*Mod | 0.060 | 0.328 | 0.151 | 0.067 | Reject |

| H4e: TR -> BI*Mod | -0.180** | 0.001 | -0.143 | 0.119 | Accept |

| H4f: PR -> BI*Mod | 0.343*** | 0.000 | 0.465*** | 0.000 | Reject |

| H4g: LR-> BI*Mod | 0.322*** | 0.000 | -0.136 | 0.087 | Accept |

Notes: **p<.05, ***p<.001

Referring to the Multi-Group Analysis (MGA), the interaction between effort expectancy, performance expectancy and facilitating condition on attitude is significant among the instructors with theoretical and practical classes. Henceforth, this study confirms that there are no significant differences in terms of the significant level for both types of classes. However, the interaction between social influence and attitude is significant among the instructors with theoretical classes (βT= 0.119; p-value = 0.022) but not with the practical class instructors (βP= 0.092; p-value = 0.187). Henceforth, this study confirms that class type moderates the relationship between social influence and attitude. On the other hand, the interaction between attitude and behavioural intention has no significant differences in terms of the significant level for both types of classes. However, the interaction between technical and lifestyle readiness and behavioural intention differs from the theoretical and practical classes. Likewise, this study confirms no significant differences of class types in the causal relationship between pedagogical lifestyle and behavioural intention.

5. DISCUSSION AND IMPLICATION

5.1. Study Discussion

This study investigates the factors that encourage instructors to adopt online teaching based on the challenges of learning models from offline to online platforms. The finding showed that effort expectancy (EE), performance expectancy (PE) and social influence (SI) had a direct effect on the instructor’s attitude toward online teaching (Jung, & Lee, 2020; Md Yunus et al., 2021; Nikolopoulou et al., 2021; Sangeeta, & Tandon, 2021; Sewadono et al., 2023). Unfortunately, we found that facilitating condition (FC) does not directly affect the instructor’s attitude toward adopting online teaching. The absence of a direct effect could be due to unique contextual factors of this study. Notably, online teaching environments can vary widely, and factors such as institutional policies, technological infrastructure, and instructor training programs can influence how FC impacts attitudes. Such results do not reflect the mainstream research findings (see Khechibe et al., 2020; Mei et al., 2018; Nikou, & Economides, 2019; Wong, 2016), which considers facilitating conditions the strongest determinant and positively influences the adoption of technology.

Conversely, it is crucial to recognize that an instructor’s attitude plays a pivotal role in directly and significantly influencing their behavioral intention to engage in online teaching, as evidenced by Khechine et al. (2020) findings. Significantly, a positive attitude demonstrated by instructors holds the potential to wield a substantial positive impact on their inclination to embrace online teaching practices. This phenomenon is consistently underscored by previous research. Researchers such as Estriengana et al. (2019), Keong et al. (2014), and Schlenz et al. (2020) have all concurred that a favorable attitude harbored by instructors tends to act as a catalyst in shaping their behavioral intention to adopt online teaching methods. The salient connection between attitude and behavioral intention lies in instructors’ proclivity to align their actions with their optimistic attitude, ultimately fostering a harmonious integration of online teaching methodologies into their pedagogical practices.

This research underscores the pivotal role of technology readiness in predicting instructors’ intentions to persist with online teaching in forthcoming periods. Existing studies by Alea et al. (2020), Cutri et al. (2020), Omotayo and Adekunle (2021), Rafique et al. (2018), and Tsourela and Roumeliotis (2015) validate this assertion. Specifically, the outcomes of this study affirm that attributes encompassing technical, pedagogical, and lifestyle readiness exert a discernible impact on instructors’ behavioral inclination towards sustained online teaching, aligning with insights from Ayodele et al. (2018), Brinkley-Etzkorn (2018), McGee et al. (2017), Owen et al. (2020), and Prause et al. (2019). This empirical body of evidence conclusively demonstrates that instructors’ preparedness in technical competence, pedagogical acumen, and adaptability to remote work significantly mold their intentions to adopt online teaching practices in the future.

The multi-group analysis was employed to examine the potential moderating effect of class type on the association between attitude and behavioral intention. Among the eight hypotheses tested, noteworthy significance emerged in the constructs of technical readiness and lifestyle readiness, as noted in the works of Aditya (2021), Asghar et al. (2021), and Geng et al. (2019). This divergence could be attributed to the fluid interchangeability of class efficacy types due to inherent distinctions, as evidenced by Alea et al. (2020) and Mathew and Chung (2020). Furthermore, the challenges faced by instructors leading practical classes in the online domain are noteworthy, often stemming from the constraints of the virtual learning environment, a matter elucidated by Goh and King (2020). These findings underscore the nuanced interplay between class type, instructor adaptability, and technological readiness, shaping the dynamics of technology adoption in diverse educational contexts.

However, it is important to note that there are insignificant differences between effort expectancy, performance expectancy, social influence, facilitating conditions and pedagogical readiness effect on the instructor’s attitude based on the theoretical and practical classes. Notably, this study found that instructors’ attitudes may be driven by their perception of the benefits and outcomes of their teaching efforts in both theoretical and practical classes. On the other hand, the social dynamics and influences on instructors’ attitudes might not differ significantly between theoretical and practical classes. Perhaps, they could be influenced by similar factors such as colleague opinions, institutional culture, and peer recognition, leading to the rejection of this hypothesis. In addition, this study confirms that facilitating conditions, which relate to the availability of resources and support for teaching, might consistently impact instructor attitudes regardless of class type. Similalry, the instructors’ preparedness in terms of teaching methodologies might be important regardless of class type.

5.2. Study Implications

This study highlights the central role of attitude in determining instructors’ behavioural intentions to conduct online teaching. It emphasizes that an instructor’s attitude is influenced by performance expectancy, social influence, and effort expectancy. Besides, this study underscores the importance of the online learning system’s ease of use and usefulness. Out of four attributes in technology adoption, the researcher found that only Facilitating Condition (FC) did not influence the instructor’s attitude toward online teaching. Other basic technology adoption attributes (i.e., performance expectancy, social influence and effort expectancy) directly affected attitude towards online teaching (Rana et al., 2017; Weerakkody et al., 2017). Besides ongoing training programs and ongoing support for instructors and students, the online system must be easy to navigate, with clear instructions and accessible features that facilitate seamless interaction between instructors and students.

On the other hand, this study identifies technical, pedagogical, and lifestyle readiness as direct factors influencing instructors’ behavioural intentions. Higher education institutions need to provide instructors with the necessary technical support and resources to ensure they have the skills and tools required for online teaching. Besides, providing instructors with the necessary pedagogical training and resources can boost their confidence and competence in delivering engaging and effective online instruction. On the other hand, supporting instructors in achieving a healthy work-life balance can contribute to their overall job satisfaction and motivation in online teaching.

An important implication of this study is that higher education institutions should develop comprehensive training programs and support mechanisms for instructors to enhance their technology readiness in online teaching. The type of class has been found to moderate the influence of technical and lifestyle readiness on instructors’ behavioural intentions, highlighting the need for tailored training based on the specific requirements of different classes. Higher education institutions should design training programs specifically tailored to the different class types (theoretical vs practical) within the Hospitality and Tourism curriculum. These training programs should focus on providing instructors with the necessary skills and knowledge to effectively integrate technology into their respective class types. Practical classes may require additional training on virtual simulations, case studies, and other interactive tools. In contrast, theoretical classes may benefit from training on online discussion facilitation and innovative content delivery methods.

6. CONCLUSION

The choice to use the online learning platform for COVID-19 is revolutionary and timely, primarily due to the 4.0 Industrial Revolution. However, there are still concerns that online learning may have been a sub-optimal substitute for conventional teaching and learning activities. Besides, it is evidente that although online teaching and learning were deemed more dynamic, they could not holistically replace face-to-face physical classes. These findings would offer improvement for in terms of understanding online educational delivery, evaluation, and interaction among students and instructors, specifically in the hospitality and tourism settings. Hence, this finding can also guide higher education institutions to create relevant content for the program that should be focused on. Nevertheless, the outcome of this study also helps higher education institutions develop an effective hospitality curriculum that is flexible and well adapted to the dynamic environment in future. Since online teaching requires different skills and competencies than face-to-face teaching, instructors must adapt their pedagogical approach and learn to use technological tools to deliver classes effectively. In addition, they must consider the additional challenges students face when studying from home, such as a lack of social interaction and the need for self-discipline.

6.1. Limitations and future lines of research

One of the limitations of this study is the limited geographical scope of the respondents. The study participants came from only four ASEAN countries: Malaysia, Indonesia, Thailand, and the Philippines. As different countries may have unique educational systems, teaching practices, and student expectations, the study’s findings may not fully represent the global or international context, limiting the generalizability of the results. Future research could consider expanding the participant pool to include educators and students from a more diverse range of countries, regions, and educational contexts to improve the study’s validity and applicability. This would provide a broader perspective on online teaching readiness and preferences, allowing for a more comprehensive understanding of the subject matter.

Additionally, while this study extended the UTAUT model and technology readiness attributes, it is worth noting that each country is unique and adopting this integrated framework may not be directly applicable to other contexts or countries. Future research could explore the applicability of the extended model and readiness attributes in different cultural, educational, or regional contexts to assess their generalizability. In addition, future research could delve deeper into the specific factors that influence these constructs, such as investigating the challenges facing instructors’ readiness and intention to continue online teaching. Meanwhile, given the sudden and unexpected transition to online teaching, the primary focus of this study is to explore the readiness, attitudes, and strategies of instructors in adapting to online teaching. This led to prioritising these aspects over demographic factors to better understand the nuances of online teaching adoption within this context. The UTAUT main emphasis, according to Ventakesh (2003) are on the key constructs and their direct impact on technology adoption and use, regardless of demographic differences. Nonetheless, this study acknowledged that by excluding demographic variables, the findings might not capture the full richness of potential moderating effects in the study frameworks.

7. FUNDING

Universiti Teknologi MARA supports the research work under the Visibility Research Grant (VRGS). The authors wish to express our gratitude to the Faculty of Hotel and Tourism Management, Universiti Teknologi MARA for supporting this manuscript’s research works and development.

8. COMPETING INTEREST

The author declares that they have no known competing financial interests or personal relationships that could have influenced the work reported in this paper.

9. REFERENCES

Abou-Khalil, V., Helou, S., Khalifé, E., Chen, M. A., Majumdar, R., & Ogata, H. (2021). Emergency online learning in low-resource settings: Effective student engagement strategies. Education Sciences, 11(1), e24. https://doi.org/10.3390/educsci11010024

Aditya, D. S. (2021). Embarking Digital Learning Due to COVID-19: Are Teachers Ready? Journal of Technology and Science Education, 11(1), 104-116. https://doi.org/10.3926/jotse.1109

Afthanorhan, A., Nazim, A., & Ahmad, S. (2014). A parametric approach to partial least square structural equation modeling of multigroup analysis (PLS-MGA). International Journal of Economic, Commerce, and Management, 2(10), 15-24.

Akinnuwesi, B. A., Uzoka, F. M. E., Fashoto, S. G., Mbunge, E., Odumabo, A., Amusa, O. O., Okpeku, M., & Owolabi, O. (2022). A modified UTAUT model for the acceptance and use of digital technology for tackling Covid-19. Sustainable Operations and Computers, 3, 118-135. https://doi.org/10.1016/j.susoc.2021.12.001

Al-Fraihat, D., Joy, M., Masa’deh, R., & Sinclair, J. (2020). Evaluating e-learning systems success: An empirical study. Computers in Human Behavior, 102, 67-86. https://doi.org/10.1016/j.chb.2019.08.004

Alea, L. A., Fabrea, M. F., Roldan, R. D. A., & Farooqi, A. Z. (2020). Teachers’ covid-19 awareness, distance learning education experiences and perceptions towards institutional readiness and challenges. International Journal of Learning, Teaching and Educational Research, 19(6), 127-144. https://doi.org/10.26803/ijlter.19.6.8

Alfy, S., Gómez, J. M., & Ivanov, D. (2016). Exploring instructors’ technology readiness, attitudes and behavioral intentions towards e-learning technologies in Egypt and United Arab Emirates. Education and Information Technologies, 22(5), 2605-2627. https://doi.org/10.1007/s10639-016-9562-1

Asghar, M. Z., Barberà, E., & Younas, I. (2021). Mobile Learning Technology Readiness and Acceptance among Pre-Service Teachers in Pakistan during the COVID-19 Pandemic. Knowledge Management & E-Learning, 13(1), 83-101. https://doi.org/10.34105/j.kmel.2021.13.005

Ayodele, S., Endozo, A., & Ogbari, M. E. (2018, October). A study on factors hindering online learning acceptance in developing countries. In ICETC (Ed.), Proceedings of the 10th International Conference on Education Technology and Computers (pp. 254-258). Association for Computing Machinery. https://dl. acm.org/doi/abs/10.1145/3290511.3290533

Boettcher, J. V., & Conrad, R.-M. (2021). The online teaching survival guide: Simple and practical pedagogical tips. John Wiley & Sons.

Brinkley-Etzkorn, K.E. (2018). Learning to teach online: Measuring the influence of faculty development training on teaching effectiveness through a tpack lens. The Internet and Higher Education, 38, 28-35. https://doi.org/10.1016/j.iheduc.2018.04.004

Chao, C. M. (2019). Factors determining the behavioral intention to use mobile learning: An application and extension of the utaut model. Frontiers in Psychology, 10, 1-14. https://doi.org/10.3389/fpsyg.2019.01652

Chin, W.W. (1998). The partial least squares approach to structural equation modelling. Advances in Hospitality and Leisure, 8(2), 295-336.

Coakes, S. J., Steed, L., & Ong, C. (2009). Analysis without anguish: SPSS version 16.0 for windows. John Wiley and Sons Ltd.

Coman, C., Țîru, L. G., Meseșan-Schmitz, L., Stanciu, C., & Bularca, M. C. (2020). Online teaching and learning in higher education during the coronavirus pandemic: Students’ perspective. Sustainability, 12(24), e10367. https://doi.org/10.3390/su122410367

Cutri, R. M., Mena, J., & Whiting, E. F. (2020). Faculty readiness for online crisis teaching: Transitioning to online teaching during the covid-19 pandemic. European Journal of Teacher Education, 43(4), 523-541. https://doi.org/10.1080/02619768.2020.1815702

Davis, N. L., Gough, M., & Taylor, L. L. (2019). Online teaching: advantages, obstacles and tools for getting it right. Journal of Teaching in Travel & Tourism, 19(3), 256-263. https://doi.org/10.1080/15313220.2019.1612313

Dragan, I.M., & Isaic-Maniu, A. (2013). Snowball sampling completion. Journal of Studies in Social Science, 5(2), 160-177.

Dumford, A. D., & Miller, A. L. (2018). Online learning in higher education: exploring advantages and disadvantages for engagement. Journal of Computing in Higher Education, 30(3), 452-465. https://doi.org/10.1007/s12528-018-9179-z

Dwivedi, Y. K., Rana, N. P., Jeyaraj, A., Clement, M., & Williams, M. D. (2019). Re-examining the unified theory of acceptance and use of technology (UTAUT): Towards a revised theoretical model. Information Systems Frontiers, 21(3), 719-734. https://doi.org/10.1007/s10796-017-9774-y

Dwivedi, Y.K., Rana, N.P., Janssen, M., Lal, B., Williams, M.D., &Clement, M. (2017). An empirical validation of a unified model of electronic government adoption (UMEGA). Government Information Quarterly, 34(2), 211-230. https://doi.org/10.1016/j.giq.2017.03.001

Ersin, P., Atay, D., & Mede, E. (2020). Boosting preservice teachers’ competence and online teaching readiness through e-practicum during the COVID-19 outbreak. International Journal of TESOL Studies, 2(2), 112-124.

Eseadi, C. (2023). Online Learning Attitude and Readiness of Students in Nigeria during the Covid-19 pandemic: A Case of Undergraduate Accounting Students. Innoeduca. International Journal of Technology and Educational Innovation, 9(1), 27-38. https://doi.org/10.24310/innoeduca.2023.v9i1.15503

Estriegana, R., Medina-Merodio, J. A., & Barchino, R. (2019). Student acceptance of virtual laboratory and practical work: An extension of the technology acceptance model. Computers & Education, 135, 1-14. https://doi.org/10.1016/j.compedu.2019.02.010

Firat, M., & Bozkurt, A. (2020). Variables affecting online learning readiness in an open and distance learning university. Educational Media International, 57(2), 112-127. https://doi.org/10.1080/09523987.2020.1786772

Gamage, K. A., Wijesuriya, D. I., Ekanayake, S. Y., Rennie, A. E., Lambert, C. G., & Gunawardhana, N. (2020). Online delivery of teaching and laboratory practices: Continuity of university programmes during COVID-19 pandemic. Education Sciences, 10(10), e291. https://doi.org/10.3390/educsci10100291

Gay, G. H. (2016). An assessment of online instructor e-learning readiness before, during, and after course delivery. Journal of Computing in Higher Education, 28(2), 199-220. https://doi.org/10.1007/s12528-016-9115-z

Geldsetzer, P. (2020). Use of rapid online surveys to assess people’s perceptions during infectious disease outbreaks: a cross-sectional survey on COVID-19. Journal of Medical Internet Research, 22(4), e18790. https://doi.org/10.2196/18790

Geng, S., Law, K. M., & Niu, B. (2019). Investigating self-directed learning and technology readiness in blending learning environment. International Journal of Educational Technology in Higher Education, 16, e17. https://doi.org/10.1186/s41239-019-0147-0

Goh, E., & King, B. (2020). Four decades (1980–2020) of hospitality and tourism higher education in Australia: Developments and future prospects. Journal of Hospitality and Tourism Education, 32(4), 266-272. https://doi.org/10.1080/10963758.2019.1685892

Gopal, R., Singh, V., & Aggarwal, A. (2021). Impact of online classes on the satisfaction and performance of students during the pandemic period of COVID 19. Education and Information Technologies, 26(6), 6923-6947. https://doi.org/10.1007/s10639-021-10523-1

Guðmundsdóttir, G., & Hathaway, D. (2020). “We always make it work”: Teachers’ agency in the time of crisis. Journal of Technology and Teacher Education, 28(2), 239-250.

Hair, J.F., Risher, J.J., Sarstedt, M., & Ringle, C.M. (2020). When to use and how to report the results of pls-sem. European Business Review, 31(1), 2-24. https://doi.org/10.1108/EBR-11-2018-0203

Hair. F. Jr, J., Sarstedt, M., Hopkins, L., & G. Kuppelwieser, V. (2014). Partial least squares structural equation modeling (PLS-SEM). European Business Review, 26(2), 106-121. https://doi.org/10.1108/ebr-10-2013-0128

Hanafiah, M. (2020). Formative vs reflective measurement model: Guidelines for structural equation modelling research. International Journal of Analysis and Applications, 18(5), 876-889. https://doi.org/10.28924/2291-8639-18-2020-876

Henseler, J. (2012, July 21-23). PLS-MGA: A non-parametric approach to partial least squares-based multi-group analysis. In W. A. Gaul, A. Geyer-Schulz, L. Schmidt-Thieme, & J. Kunze (Eds.), Challenges at the Interface of Data Analysis, Computer Science, and Optimization (pp. 495-501). Springer Berlin Heidelberg.

Henseler, J., Ringle, C.M., & Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of Academy of Marketing Science, 43, 115-135. https://doi.org/10.1007/s11747-014-0403-8

Hung, M. L. (2016). Teacher readiness for online learning: Scale development and teacher perceptions. Computers & Education, 94 , 120-133. https://doi.org/10.1016/j.compedu.2015.11.012

Iyer, D. G., & Chapman, T. A. (2021). Overcoming technological inequity in synchronous online learning. Communications of the Association for Information Systems, 48(1), 205-212. https://doi.org/10.17705/1CAIS.04826

Jung, I., & Lee, J. (2020). A cross-cultural approach to the adoption of open educational resources in higher education. British Journal of Educational Technology, 51(1), 263-280. https://doi.org/10.1111/bjet.12820

Junus, K., Santoso, H. B., Putra, P. O. H., Gandhi, A., & Siswantining, T. (2021). Lecturer readiness for online classes during the pandemic: A survey research. Education Sciences, 11(3), e139. https://doi.org/10.3390/educsci11030139

Kaplan, K. J. (1972). On the ambivalence-indifference problem in attitude theory and measurement: A suggested modification of the semantic differential technique. Psychological Bulletin, 77(5), 361-372. https://doi.org/10.1037/h0032590

Karen, E., & Etzkorn, B. (2020). The effects of training on instructor beliefs about and attitudes toward online teaching. American Journal of Distance Education, 34(1), 19-35. https://doi.org/10.1080/08923647.2020.1692553

Kaushik, M. K., & Agrawal, D. (2021). Influence of technology readiness in adoption of e-learning. International Journal of Educational Management, 35(2), 483-495. https://doi.org/10.1108/IJEM-04-2020-0216

Kemp, N., & Grieve, R. (2014). Face-to-face or face-to-screen? Undergraduates’ opinions and test performance in classroom vs. online learning. Frontiers in Psychology, 5, 1-11. https://doi.org/10.3389/fpsyg.2014.01278

Keong, Y. C., Albadry, O., & Raad, W. (2014). Behavioral intention of efl teachers to apply e-learning. Journal of Applied Sciences, 14(20), 2561-2569. https://doi.org/10.3923/jas. 2014.2561.2569

Khalil, R., Mansour, A. E., Fadda, W. A., Almisnid, K., Aldamegh, M., Al-Nafeesah, A., Alkhalifah, A., & Al-Wutayd, O. (2020). The sudden transition to synchronized online learning during the COVID-19 pandemic in Saudi Arabia: A qualitative study exploring medical students’ perspectives. BMC Medical Education, 20, e285. https://doi.org/10.1186/s12909-020-02208-z

Khechine, H., Raymond, B., & Augier, M. (2020). The adoption of a social learning system: Intrinsic value in the utaut model. British Journal of Educational Technology, 51(6), 2306–2325. https://doi.org/10.1111/bjet.12905

Kim, D., Lee, Y., Leite, W. L., & Huggins-Manley, A. C. (2020). Exploring student and teacher usage patterns associated with student attrition in an open educational resource-supported online learning platform. Computers & Education, 156, 103961. https://doi.org/10.1016/j.compedu.2020.103961

Koet, T. W., & Abdul Aziz, A. (2021). Teachers’ and students’ perceptions towards distance learning during the covid-19 pandemic: A systematic review. International Journal of Academic Research in Progressive Education and Development, 10(3), 531-562. https://doi.org/10.6007/ijarped/v10-i3/11005

König, J., Jäger-Biela, D. J., & Glutsch, N. (2020). Adapting to online teaching during covid-19 school closure: Teacher education and teacher competence effects among early career teachers in Germany. European Journal of Teacher Education, 43(4), 608-622. https://doi.org/10.1080/02619768.2020.1809650

Lassoued, Z., Alhendawi, M., & Bashitialshaaer, R. (2020). An exploratory study of the obstacles for achieving quality in distance learning during the COVID-19 pandemic. Education Sciences, 10(9), e232. https://doi.org/10.3390/educsci10090232

Loomis, K.D. (2000). Learning styles and asynchronous learning: Comparing the lassi model to class performance. Online Learning, 4(1), 23-32. https://doi.org/10.24059/olj.v4i1.1908

MacKinnon, D. P. (2011). Integrating mediators and moderators in research design. Research on Social Work Practice, 21(6), 675-681. https://doi.org/10.1177/1049731511414148

Maheshwari, G. (2021). Factors affecting students’ intentions to undertake online learning: an empirical study in Vietnam. Education and Information Technologies, 26(6), 6629–6649. https://doi.org/10.1007/s10639-021-10465-8

Mailizar, M., Almanthari, A., & Maulina, S. (2021). Examining teachers’ behavioral intention to use e-learning in teaching of mathematics: An extended tam model. Contemporary Educational Technology, 13(2), e298. https://doi.org/10.30935/cedtech/9709

Mathew, V. N., & Chung, E. (2020). University students’ perspectives on open and distance learning (odl) implementation amidst covid-19. Asian Journal of University Education, 16(4), 152-160. https://doi.org/10.24191/ajue.v16i4.11964

Mazman Akar, S. G. (2019). Does it matter being innovative: Teachers’ technology acceptance. Education and Information Technologies, 24(6), 3415-3432. https://doi.org/10.1007/s10639-019-09933-z

McGee, P., Windes, D., & Torres, M. (2017). Experienced online instructors: beliefs and preferred supports regarding online teaching. Journal of Computing in Higher Education, 29(2), 331-352. https://doi.org/10.1007/s12528-017-9140-6

Md Yunus, M., Ang, W. S., & Hashim, H. (2021). Factors affecting teaching English as a Second Language (TESL) postgraduate students’ behavioural intention for online learning during the COVID-19 pandemic. Sustainability, 13(6), 3524. https://doi.org/10.3390/su13063524

Mei, B., Brown, G. T., & Teo, T. (2018). Toward an understanding of preservice English as a Foreign language teachers’ acceptance of computer-assisted language learning 2.0 in the people’s Republic of China. Journal of Educational Computing Research, 56(1), 74-104. https://doi.org/10.1177/0735633117700144

Mokhtar, S., Katan, H., & Rehman, H., (2018). Instructors’ behavioural intention to use learning management system: An integrated tam perspective. Technology, Education and Management Journal, 7(3), 513-525. https://doi.org/10.18421/TEM73-07

Mosunmola, A., Mayowa, A., Okuboyejo, S., & Adeniji, C. (2018, January). Adoption and use of mobile learning in higher education: The utaut model. In IC4E ‘18 (Ed.), Proceedings of the 9th International Conference on E-Education, E-Business, E-Management and E-Learning (pp. 20-25). Association for Computing Machinery. https://doi.org/10.1145/3183586.3183595

Munoz, K. E., Wang, M.-J., & Tham, A. (2021). Enhancing online learning environments using social presence: evidence from hospitality online courses during covid-19. Journal of Teaching in Travel & Tourism, 21(4), 339-357. https://doi.org/10.1080/15313220.2021.1908871

Nikolopoulou, K., Gialamas, V., & Lavidas, K. (2021). Habit, hedonic motivation, performance expectancy and technological pedagogical knowledge affect teachers’ intention to use mobile internet. Computers and Education Open, 2, 100041. https://doi.org/10.1016/j.caeo.2021.100041

Nikou, S. A., & Economides, A. A. (2018). Factors that influence behavioral intention to use mobile-based assessment: A stem teachers’ perspective. British Journal of Educational Technology, 50(2), 587–600. https://doi.org/10.1111/bjet.12609

Oguguo, B., Ezechukwu, R., Nannim, F., & Offor, K. (2023). Analysis of teachers in the use of digital resources in online teaching and assessment in COVID times. Innoeduca. International Journal of Technology and Educational Innovation, 9(1), 81-96. https://doi.org/10.24310/innoeduca.2022.v8i1.11144

Omotayo, F.O. & Adekunle, O.A. (2021). Adoption and use of electronic voting system as an option towards credible elections in Nigeria. International Journal of Development Issues, 20(1), 38-61. https://doi.org/10.1108/IJDI-03-2020-0035

Owen, S. M., White, G., Palekahelu, D. T., Sumakul, D. T., & Sediyono, E. (2020). Integrating online learning in schools: Issues and ways forward for developing countries. Journal of Information Technology Education: Research, 19, 571-614. https://doi.org/10.28945/4625

Peattie, K. (2001). Golden goose or wild goose? The hunt for the green consumer. Business Strategy and the Environment, 10(4), 187–199. https://doi.org/10.1002/bse.292

Phan, T. T. N., & Dang, L. T. T. (2017). Teacher readiness for online teaching: A critical review. International Journal on Open and Distance E-Learning, 3(1), 1-16.

Pillay, H., Irving, K., & Tones, M. (2007). Validation of the diagnostic tool for assessing tertiary students’ readiness for online learning. Higher Education Research and Development, 26(2), 217-234. https://doi.org/10.1080/07294360701310821

Prause, M. (2019). Challenges of industry 4.0 technology adoption for smes: the case of Japan. Sustainability, 11(20), e5807. https://doi.org/10.3390/su11205807

Rafique, G. M., Mahmood, K., Warraich, N. F., & Rehman, S. U. (2021). Readiness for online learning during covid-19 pandemic: A survey of Pakistanis students. The Journal of Academic Librarianship, 47(3), 1-10. https://doi.org/10.1016/j.acalib.2021.102346

Ramos-Morcillo, A. J., Leal-Costa, C., Moral-García, J. E., & Ruzafa-Martínez, M. (2020). Experiences of nursing students during the abrupt change from face-to-face to e-learning education during the first month of confinement due to COVID-19 in Spain. International Journal of Environmental Research and Public Health, 17(15), 5519. https://doi.org/10.3390/ijerph17155519

Rana, N. P., Dwivedi, Y. K., Lal, B., Williams, M. D., & Clement, M. (2017). Citizens’ adoption of an electronic government system: Towards a unified view. Information Systems Frontiers, 19(3), 549-568. https://doi.org/10.1007/s10796-015-9613-y

Sabet, S. A., Moradi, F., & Soufi, S. (2022). Predicting students’ satisfaction with virtual education based on health-oriented lifestyle behaviors. Innoeduca: International Journal of Technology and Educational Innovation, 8(2), 43-57. https://doi.org/10.24310/innoeduca.2022.v8i2.13079

Sangeeta & Tandon, U. (2021). Factors influencing adoption of online teaching by school teachers: A study during COVID-19 pandemic. Journal of Public Affairs, 21(4), e2503. https://doi.org/10.1002/pa.2503

Schlenz, M. A., Schmidt, A., Wöstmann, B., Krämer, N., & Schulz-Weidner, N. (2020). Students’ and lecturers’ perspective on the implementation of online learning in dental education due to SARS-CoV-2 (COVID-19): A cross-sectional study. BMC Medical Education, 20, e354. https://doi.org/10.1186/s12909-020-02266-3

Selvaraj, A., Radhin, V., Nithin, K. A., Benson, N., & Mathew, A. J. (2021). Effect of pandemic based online education on teaching and learning system. International Journal of Educational Development, 85, e102444. https://doi.org/10.1016/j.ijedudev.2021.102444

Semerci, A., & Aydın, M. K. (2018). Examining high school teachers’ attitudes towards ICT use in education. International Journal of Progressive Education, 14(2), 93-105. https://doi.org/10.29329/ijpe.2018.139.7

Sewandono, R. E., Thoyib, A., Hadiwidjojo, D., & Rofiq, A. (2023). Performance expectancy of E-learning on higher institutions of education under uncertain conditions: Indonesia context. Education and Information Technologies, 28(4), 4041-4068. https://doi.org/10.1007/s10639-022-11074-9

Sidpra, J., Gaier, C., Reddy, N., Kumar, N., Mirsky, D., & Mankad, K. (2020). Sustaining education in the age of covid-19: A survey of synchronous web-based platforms. Quantitative Imaging in Medicine and Surgery, 10(7), 1422-1427. https://doi.org/10.21037/qims-20-714

Simamora, R. M. (2020). The Challenges of online learning during the COVID-19 pandemic: An essay analysis of performing arts education students. Studies in Learning and Teaching, 1(2), 86-103. https://doi.org/10.46627/silet.v1i2.38

Stangor, C., Tarry, H., & Jhangiani, R. (2014). Principles of Social Psychology – 1st International Edition. Pressbooks by BCcampus.

Tang, Y. M., Chen, P. C., Law, K. M., Wu, C. H., Lau, Y.-y., Guan, J., & Ho, G. T. (2021). Comparative analysis of student’s live online learning readiness during the coronavirus (Covid-19) pandemic in the higher education sector. Computers & Education, 168, e104211. https://doi.org/10.1016/j.compedu.2021.104211

Tiwari, P., Séraphin, H., & Chowdhary, N. R. (2020). Impacts of Covid-19 on tourism education: Analysis and perspectives. Journal of Teaching in Travel & Tourism, 21(4), 313-338. https://doi.org/10.1080/15313220.2020.1850392

Triandis, H. C. (1971). Attitude and attitude change (foundations of social psychology). John Wileys & Sons Inc.

Tsourela, M., & Roumeliotis, M. (2015). The moderating role of technology readiness, gender, and sex in consumer acceptance and actual use of Technology-based services. The Journal of High Technology Management, 26(2), 124-136. https://doi.org/10.1016/j.hitech.2015.09.003

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. Management Information Systems Quarterly, 27(3), 425-478. https://doi.org/10.2307/30036540

Wang, Y., Gu, J., Wang, S., & Wang, J. (2019). Understanding consumers’ willingness to use ride-sharing services: The roles of perceived value and perceived risk. Transportation Research Part C: Emerging Technologies, 105, 504-519. https://doi.org/10.1016/j.trc.2019.05.044

Weerakkody, V., Irani, Z., Kapoor, K., Sivarajah, U., & Dwivedi, Y. K. (2017). Open data and its usability: An empirical view from the citizen’s perspective. Information Systems Frontiers, 19(2), 285–300. https://doi.org/10.1007/s10796-016-9679-1

Wei, H.-C., & Chou, C. (2020). Online learning performance and satisfaction: Do perceptions and readiness matter? Distance Education, 41(1), 48-69. https://doi.org/10.1080/01587919.2020.1724768

Wilson, B. J., Deckert, A., Shah, R., Kyei, N., Copeland Dahn, L., Doe-Rogers, R., Hinneh, A. B., Johnson, L. W., Natt, G. D., Verdier, J. A., Vosper, A., Louis, V. R., Dambach, P., & Thomas-Connor, I. (2021). Covid-19-related knowledge, attitudes and practices: a mixed-mode cross-sectional survey in Liberia. BMJ Open, 11(7), 1-14. https://doi.org/10.1136/bmjopen-2021-049494

Wong, G. K. (2016). The behavioral intentions of Hong Kong primary teachers in adopting educational technology. Educational Technology Research and Development, 64(2), 313–338. https://doi.org/10.1007/s11423-016-9426-9

Zikmund, W.G., Carr, B.J., Griffin, M., & Babin, B.J. (2013). Business Research Method. Dryden Press.

APPENDIX I

PERFORMANCE EXPECTANCY

- I teach online during the outbreak of COVID-19 because I can have access to students at distant locations.

- I teach online during the outbreak of COVID-19 because it helps me to reach students within the shortest time frame.

- I teach online during the outbreak of COVID-19 because students can continue participating in discussion sections and lectures.

EFFORT EXPECTANCY

- It is easy for me to deliver online lectures.

- The students’ feedback during online class is easy to understand.

- I can solve the problems of students easily during an online class.

- It is easy to customise the lectures online.

- It is easy to participate in discussions during an online class.

FACILITATING CONDITIONS

- I have been provided with the resources necessary to deliver online classes by my university.

- I have the necessary knowledge to deliver the online lecture.

- Delivering lectures online is compatible with other technologies I use.

- I get help from my university when I face difficulties while delivering classes online.

SOCIAL INFLUENCE

- People whose opinions I value prefer that I should teach online during the COVID-19 pandemic.

- My colleagues and peers think that I should adopt the online mode of teaching during the COVID-19 pandemic.

- People who are important to me think that I should adopt online teaching during the COVID-19 pandemic.

ATTITUDE

- The use of online teaching is a good idea.

- Online teaching is engaging for me.

- Online teaching is fun for me.

- Online teaching makes learning more interesting for students.

- I enjoy teaching online.

BEHAVIOURAL INTENTION

- I intend to teach online teaching throughout the COVID-19 pandemic.

- I intend to teach online teaching after the COVID-19 pandemic.

- I intend to continue adopting online teaching in the future.

- I intend to encourage my peers and colleagues to adopt online teaching in the future.

TECHNICAL READINESS

- I own a computer/laptop/smartphone.

- My computer setup is sufficient for online learning.

- I can access software such as word processor, spreadsheet, or browser.

- I have access to a dedicated network connection.

- I have access to high-speed internet.

LIFESTYLE READINESS

- I have a private place in my home that I can use for my teaching activities.

- I have adequate time that will be uninterrupted in which I can work on my online.

- I have resources/experts nearby who will assist me with any technical problems.

- I am an active social media user.

- I am comfortable working online.

PEDAGOGICAL READINESS

- I am always eager to try new technology in education.

- I am a self-motivated, independent learner.

- I don’t need to be in a traditional classroom environment to teach.

- I communicate comfortably online.

- I efficiently use the internet to find additional teaching resources.

- I can work independently without the traditional class arrangement.

- I always experiment with new pedagogical approaches.

- I feel confident making online instruction.